How Iñárritu Shoots A Film At 3 Budget Levels

In this video I’ll break down how Alejandro González Iñárritu directed his first low budget feature Amores Perros, the mid budget Birdman up to the blockbuster level The Revenant.

INTRODUCTION

Gritty, textural, real and raw are how I’d describe the look of Alejandro González Iñárritu’s highly acclaimed movies. From the breakneck success of his first independent budget film all the way up to high budget blockbusters, his movies are the product of a clear directorial voice, that goes after telling difficult, risky stories that at times interweave non-linear narratives and express the psychological state of each character’s strife.

In this video I’ll break down how Iñárritu created his first low budget feature Amores Perros, the mid budget Birdman up to the blockbuster level The Revenant.

AMORES PERROS - $2 MILLION

Iñárritu’s success as a feature film director didn’t happen overnight. He began his career in a different field of entertainment, radio, which progressed into a producing job in TV, which led to him creating his own production company, Zeta Films - where he produced commercials, short films and even a TV pilot.

Since Amores Perros was an incredibly ambitious, logistically and structurally challenging first feature, it helped that he carried some experience as a director into it along with an established relationship with crew.

“Most of the people that worked with me on this film, almost all of the head of departments from Rodrigo Prieto to Brigitte Broch the production designer, all this team, we had been working together for many years doing commercials - I have a production company.

So, in a way, that complexity, it was a language that we had already established between us. So it was my first film but definitely was not my first time on a set.” - Alejandro González Iñárritu

The story, which he worked on with screenwriter Guillermo Arriaga, was constructed using three subplots which all feature human character’s different relationships with dogs which were interwoven and connected by a common plot point.

This push to make such a tightly packed and difficult first feature on a relatively low budget was partly due to the difficulties involved in producing a movie in Mexico at the time.

“Your first film normally was at the mercy of the government and then you just show it to your friends. Because there was no money, nobody wanted to see any Mexican film at that time.

There was an anxiety that runs that it was your only real opportunity to say something and to express yourself. So, I think it has to do with: you want to include everything you wanted to say.” - Alejandro González Iñárritu

The story and also how it was stylistically told through the cinematography leaned into the extreme.

Most of the film was shot with a handheld camera and wide angle lenses - which, combined with the dynamic camera operating from Prieto - injected a gritty, raw realism into the story. This technique of moving with characters on wide angle prime lenses - from 14mm to 40mm - is something that he would continue to use in his later movies.

Shot on a wide angle lens.

This wider warped intensity that was used to capture the more intense and chaotic characters, was flipped when photographing the outcast figure Chivo. They instead shot him with much more telephoto focal lengths on an Angenieux 25mm-250mm HR zoom lens - which had the effect of further isolating him from the environment.

Shot on a telephoto lens.

Another large part of the extreme, raw look of the movie was created by how the 35mm film was developed in the lab. By skipping the processing step of bleaching the negative, which is called bleach bypass, it creates a desaturated, higher contrast look with exaggerated, more pronounced film grain.

In other words all the vibrancy in the colour gets sucked out, except for a few colours like red which remain abrupt and punchy, the highlights get brighter and are more prone to blowing out, while the shadows more easily get crushed to pure black with little detail.

Iñárritu has stated that this bleach bypass look was a way of emulating and exaggerating the look of Mexico City, which is quite polluted with particles in the air that makes things feel hazy and grey. It also added more contrast to the city light which could otherwise look quite flat.

Iñárritu’s bold, risky vision that combined an extreme, raw narrative with an extreme, raw look, went down very well at festivals, where it won the prestigious Cannes Critics’ Week, inspired him to create a trilogy of like minded films which he called his Death Trilogy, went on to have success with audiences - making back around 10 times its low budget, and through doing so jump started his career as a director.

BIRDMAN - 16 MILLION

“I shot that film in 19 days - less than four weeks - and it was crazy.” - Alejandro González Iñárritu

Birdman came about during a free schedule window he had, while waiting for the correct winter season to shoot his next much higher budget movie - The Revenant. The script’s $16.5 million budget was financed in a co-production between Fox Searchlight, who initially got the script but had a budget cap on what they could spend, and New Regency, who were producing Iñárritu’s other film in production.

There were some resonances in the script between the lead actor Michael Keaton’s own career and that of the lead character - who was well known for being cast as a superhero and struggled to regain his perception as a quote unquote ‘prestigious’ theatre actor.

Iñárritu pitched a radical idea that the entire film should happen in a single, long take - or more accurately have the appearance of a single take through combining and disguising the cuts from various individual takes.

This long take concept was partially based on the idea of interconnecting various characters - like he’d also done in his prior work - and capturing the intensity and energy of backstage, without giving the audience cutting points or moments to breathe.

Unlike Amores Perros - which creates an extreme intensity through quick cutting and a large amount of camera angles - Birdman took the other extreme of creating intensity by keeping the camera always in motion and not cutting.

This stylistic decision was also one that was formed out of necessity.

“The reason I think I got into this different kind of approach or design of making films was because of need. Sometimes the restrictions and limitations are the best - at least creatively. I didn’t have enough money. I didn’t have enough time.” - Alejandro González Iñárritu

Getting an ensemble cast of actors and constructing the stages meant that the shooting schedule was limited to only 19 days. These choreographed long takes are incredibly difficult to shoot, as it requires perfection not only from the actors but also from the crew and camera operators, however, accomplishing, for example, a 10 minute long take can knock considerable shooting pages off the schedule in a small amount of time.

Taking this approach meant that the final film had to be fully designed in pre-production, before shooting, rather than discovered or re-constructed in the edit.

The ‘editing’ happened up front both in making changes to the script by getting it down to 103 pages from its initial 125 pages, and in the months leading up to the shoot where cinematographer Emmanuel Lubezki and Iñárritu worked out the blocking with stand ins, a camera and a mock set in a warehouse which they mapped out with textiles and c-stands.

The film was shot on Alexa cameras, mainly the Mini, in either a Steadicam build for smoother tracking shots or handheld operated shots by Lubezki. Like Amores Perros it was filmed on wide angle lenses, a combination of Master Primes and Summilux-Cs - which are both very clean, sharp sets of prime lenses.

To keep a naturalistic feel to the lighting and to practically be able to shoot 360 degrees on sets, Lubezki designed it around only using practical sources that you could see within the shot. Whether that was overhead stage lighting, bulbs on makeup mirrors or overhead fluorescents in a corridor.

This meant that colour temperatures were mixed and at times cast monochromatic hues over the image - which may have gone a bit against traditional expectations of maintaining skin tones, but gave the images a more naturalistic and real feel that is present in Iñárritu’s movies.

The digital Alexa allowed him to roll for long takes and expose at a very sensitive 1,200ASA with the lenses opened up to a T/2 aperture. This allowed them to shoot in low light environments while also preserving a big dynamic range between highlights and shadows. This helped them when shooting a shot that went out into a non-locked off Time Square at night, where they had no control over the lighting or how it was balanced.

Although Birdman was shot in a vastly different style - it maintained a feeling of raw realism, chaotic energy and gritty intensity that interconnected different characters in the story - just like he had in his debut feature.

THE REVENANT - 135 MILLION

Following Birdman, Iñárritu leaped into shooting his next, much higher budget feature that he had been prepping for many years: The Revenant - an 1823 action filled Western with a revenge story.

“I prepared that film in 2011. And I started scouting and storyboarding. And I was very excited about the experience to allow myself to go to the nature. And then I realised that there is no that kind of romantic thing of losing yourself in nature. No. It’s a war. You’re at war with nature to make it work - what you need.” - Alejandro González Iñárritu

Again, Iñárritu proposed a radical approach to making the film. He wanted to shoot all the extremely isolated, natural spaces entirely on location, rather than shooting in a studio with bluescreens and locations created by visual effects. He also wanted to shoot the film chronologically.

To envelope audiences in the world and push the realism as far as possible his DP, Lubezki, also pushed to shoot almost entirely using natural light.

Not only did shooting it for real produce a visual style that is unmatched in realism, but placing the actors in the real environment and shooting chronologically put the actors through real, raw, intense conditions that, probably, accentuated the level of realism in the performances.

“The conditions were massive. The physical scenes that he went through were extremely precise. Actually dangerous. Because if you do a bad move, the choreography with these kinds of stunts with such a speed and camera movements that are so precise, you put yourself at risk.” - Alejandro González Iñárritu

These many stunt sequences were made even more challenging as, in a similar style to Birdman, Iñárritu decided to design many of these sequences as long takes. Throughout the production they used one of three methods of moving the camera: a Steadicam for smoother tracking shots, Lubezki operating a handheld camera, or using a Technocrane for moves at speed, over difficult terrain or for booming overhead camera moves.

A technical challenge that emerged from shooting long takes in uncontrolled natural light, was how to balance exposure without it getting blown out or getting too dark - when moving from a dark area to a light area, or visa versa.

Lubezki’s DIT was tasked with pulling the iris. This is where a motor is attached to the aperture ring on a lens, which transmits to a handheld device that can be turned to change the aperture during a shot - either opening up and making the image brighter, or stopping down and making it darker. This has to be done carefully and gradually so as to avoid these changes in exposure being noticeable and distracting.

After initially choosing to shoot the day scenes on 35mm film, to maximise dynamic range, Lubezki decided to switch to a purely digital workflow - again shooting on the Alexa Mini as the A cam, the XT for Steadicam and crane, and the Alexa 65 for vistas or moments where they wanted the greater width and resolution of the large sensor.

Again, they also used wide angle Master Primes and Summilux-Cs - more specifically the 14mm Master Prime and occasionally a 12mm or 16mm. When on the larger field of view Alexa 65 he would often use a 24mm Prime 65 lens.

Like his other films, The Revenant preserved an intense, raw, real chaotic feeling, however at a higher budget that could afford one of the biggest stars in the world, a very long production schedule, more complicated visual effects shots, in very challenging, slow shooting environments, with many complex action and stunt sequences.

5 Reasons You Should Shoot With A Gimbal

Let’s look at five reasons why filmmakers use gimbals in both videography and on high end productions alike.

INTRODUCTION

Gimbals are often associated more with videography or prosumer camera gear than they are with big budget movies. However, this shouldn’t be the case. For years, this method for stabilising cameras and operating them in a handheld configuration has been used on many industry level shows, commercials and movies.

So let’s use this video to look at five reasons why filmmakers use gimbals in both videography and on high end productions alike.

1. MOVEMENT

For a long time in the early days of cinema it wasn’t possible to shoot with a handheld camera that could move with actors and could be operated by a single person.

Instead, filmmakers that wanted to move these heavy cameras needed to do so on a dolly - a platform which could be slid along a track using wheels. This trained the audience's eyes for decades to accept this smooth tracking movement as the cinematic default.

To this day, this language of smooth, flowing, stable camera movement has persisted and is often sought after by directors and cinematographers. Gimbals are able to achieve a similar movement, without needing tracks and a dolly, by using sensors that detect when a camera is off kilter and correcting that by evening it out with motors in real time.

These motors can control three axes of movement, hence why these devices are also called 3-axis gimbals. They can adjust and even out the up and down motion, known as tilt, the side to side motion, known as pan, or rotational motion known as roll.

Different gimbals can be set to different modes to control the axes of movement that you want. For example you could limit the motion to a pan follow mode, where the motors stabilise and lock the tilt and roll axes and only react and follow when the operator pans the camera horizontally.

Or you could enable pan and tilt follow, where only the roll axis is locked so that as the operator moves the gimbal horizontally or vertically, the gimbal will follow along with the movement of the operator. Gimbals can therefore be quite reactive to the handheld motions the operator makes, so are a useful tool in situations that require floating, smooth moves that need to track the motion of an actor or moving object.

Because they’re operated handheld, the kind of movement you get from a gimbal will have more of a floating, drifting, stability to it with small, meandering deviations in moves which is caused by the manual operation of it, compared to something like a dolly, which is super stable, heavy, and tethered to a specific line of track that creates more precise, cleaner moves. Certain filmmakers may want this drifting feeling of motion that is attainable from a gimbal.

2. UNEVEN TERRAIN

One advantage that a gimbal has over alternative grip rigs that also produce smooth camera movement, is that they can be more easily set up and operated over uneven terrain or in remote locations.

While it is possible to lay tracks on uneven outdoor locations - by first building a wooden platform to use as a smooth and level base, it is miles easier to operate the camera handheld on a gimbal and use your feet to move over uneven surfaces.

If venturing into very remote locations it also means that all that productions will need to carry is a gimbal camera build, some batteries and maybe a box of lenses. Compared to a massive truck and a full grips package - which may not be able to make it up to certain mountain locations.

Filmmakers may also want dynamic movement that squeezes through tight spaces where larger cinema grip rigs would otherwise not be able to fit - like through car doors or inside tight interiors. Or they may need the camera to move up or down a slope, which could also include something like stairs, which dollies can’t do since they need a stable, level platform to lay tracks on.

3. TIME & MONEY SAVER

On top of these advantages around moving the camera, gimbals are also a great tool for productions as they have the potential to save time and money. Paying for a single gimbal operator, or even having DPs operate the gimbals themselves and getting the first ACs to build and balance them, can provide a good saving on the grips budget.

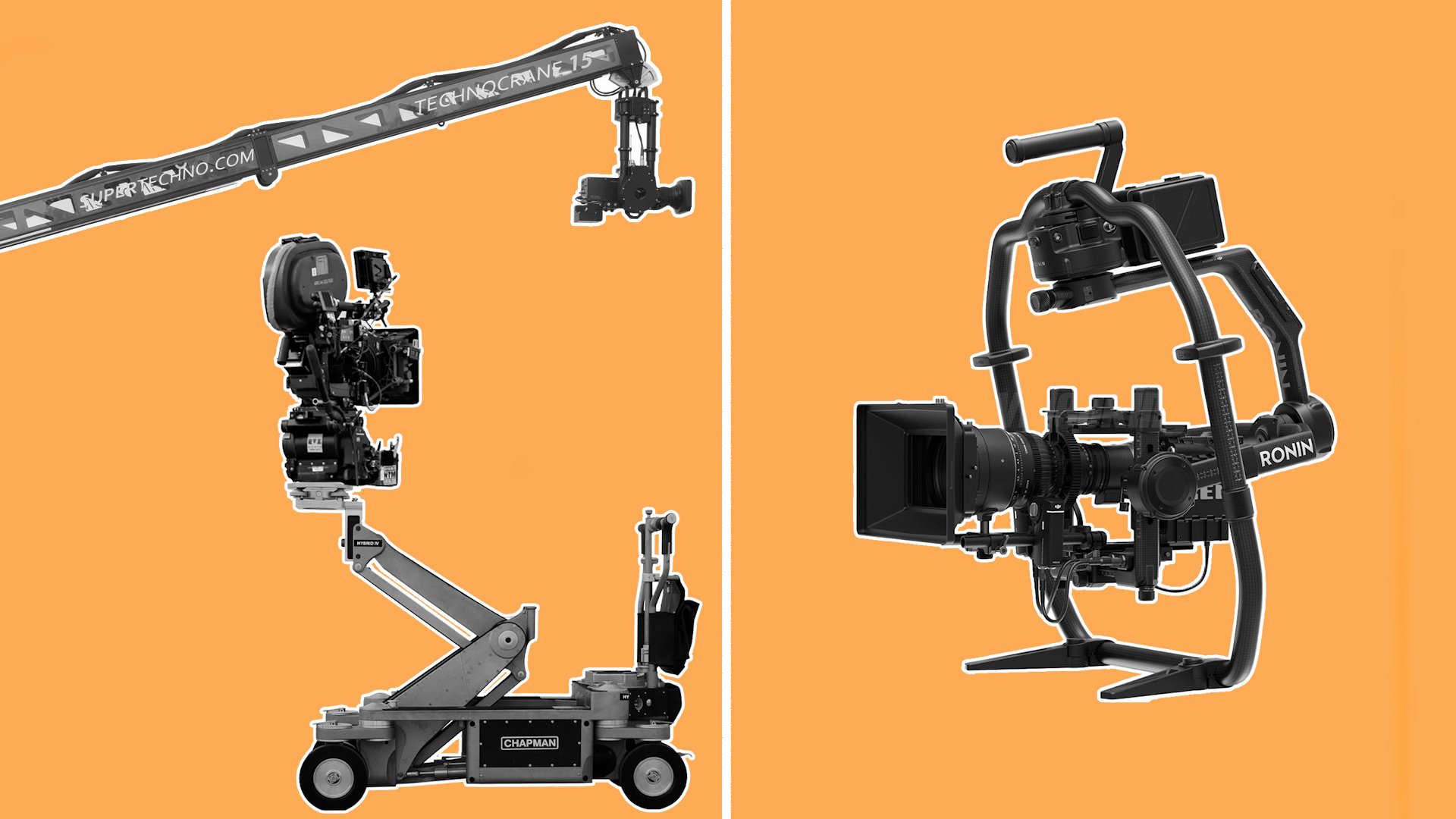

On some shoots, you may be able to get away with using a gimbal for stable motion rather than having a dolly sitting in the truck for smooth moves on stable surfaces and a Technocrane waiting to be set up for moves across uneven terrain.

On top of the gear costs, you also save on crew costs, as choosing to use a dolly or a Technocrane will come with the costs of hiring a larger grip team to set up and run the gear.

While these savings may be less important on some jobs like high end international commercials that have the money to pay for whatever tool is deemed necessary - another type of saving that a gimbal provides that may still be valuable is time.

It’s almost always easier to walk through shots, make adjustments to positioning and do a run through on a handheld gimbal - without needing to get a team to lay tracks, or spend lots of time between setups building and positioning grip rigs.

And on these enormous jobs where the most expensive thing on set may be paying for a celebrity performance or locking off a pricey location - time, as the saying goes, is money. So gimbals may save money not only on the lower rental cost and fewer crew requirements - but also by speeding up the time taken to set up each moving shot.

At this point, some of you may be saying, “Why not just use a Steadicam? It’s a similar cost and gives you a similar feeling of motion.” Well, gimbals actually come with one party trick that Steadicams don’t.

4 - REMOTE HEAD

So, what is this extra capability that a gimbal has that a Steadicam doesn’t have? Because most large production gimbals like the Movi Pro or Ronin 2 have motors that control three axes of movement and have controls in the form of a joystick or wheels that enable operators to wirelessly control how the camera tilts, pans and rolls - it is effectively a remote head.

This means that it can double up and be used both in a handheld gimbal configuration for some shots, then rebuilt as a remote head and attached to rigs such as a crane, car arm, or as a remote head on a dolly.

So instead of hiring both a Steadicam to do tracking shots on the ground, and a Libra head that is attached to a crane for an aerial shot - productions can get away with using only one gimbal.

When gimbals are rigged as remote heads they basically transmit a signal between the wheels moved by the operator and the gimbal. There are three wheels that each represent pan, tilt and roll.

So if an operator wants to pan the camera left to right they can roll the side wheel forward. This will then send an instantaneous signal from the wheels to the head - which will pan the camera without the operator needing to be physically near the head.

Gimbals can also be used for ‘transition shots’ that change between two different builds or operating methods during a single take. For example, this shot, which slides the camera in a remote head mode up a story on a wire rig while being controlled wirelessly by an operator. It then gets unclipped from the rig and grabbed by an operator who can proceed to use it to follow the action like a handheld gimbal.

5. FLEXIBILITY

The final reason that many filmmakers opt to use a gimbal is due to the greater flexibility that it provides. This is a matter of taste and feeds into a preference for how directors or DPs like to structure their filming.

Some like to be more traditional, formal and deliberate and move the camera from some form of a solid base like a dolly. This provides a clear move from A to B which can be repeated multiple times, is predictable and relies on actors perfectly hitting their marks by following a prescribed movement based on the pre-established blocking by the director.

However, some other filmmakers like to work in a different way that is more open to experimentation, improvisation and embracing little magical moments that may be discovered.

This may be appropriate for directors who like working with non-professional actors, or in semi-documentary shooting environments - where the ability to change shots on the fly is very important.

It may also be good for directors who like to shoot in long takes - where they can work with actors and give direction as they go, putting the actors in a fully dressed shooting environment and then chasing after them, finding the best angles and moments of performance through shooting.

Having the freedom of a gimbal is great for these longer takes, as the camera can be transported great distances, up stairs, through gaps and over most surfaces as long as the operator's arms can hold it. It also eliminates the need to place tracks - which have a chance of being seen in the shot if shooting in an expansive 360 degree style where we see everything in the location.

Gimbals are great for scenarios where actors aren’t given an exact mark or blocking and are instead encouraged to move around and find the shot. Because their position is not locked onto a track, the camera is free to roam and explore - getting all the benefits that come from operating a handheld camera, while at the same time preserving some of that traditional cinematic stability that we discussed.

CONCLUSION

As we’ve seen gimbals offer value not only to lower budget, more improvisational, documentary based shooting, but also to larger productions who seek stabilised motion in unusual spaces, or with dynamic moves, with a rig that saves on set up time, can double up as a remote head and offers the ability to transition between different styles of operating the camera.

As high quality cinema cameras continue to get smaller and smaller and are more easily able to fit and balance on gimbals, this rig will continue to soar in popularity and be an increasingly useful tool for stable, cinematic movement.

The Crop Factor Myth Explained

Let’s go over a more detailed explanation on what ‘crop factor’ is, how it works and a misconception about it.

INTRODUCTION

There’s an idea in photography that cameras with different sized sensors have what we call ‘crop factors’. A large format Alexa 65 has a crop factor of 0,56x compared to a Super 35 camera. A 90mm lens multiplied by 0,56 is 50mm. Therefore, many people say that using a 50mm lens on this camera is going to look exactly the same as using a 90mm lens on this camera.

The truth is that this isn’t exactly 100% correct - for quite an important reason. So, let’s go over a more detailed explanation on what ‘crop factor’ is, how it works and the big misconception about it.

WHAT IS CROP FACTOR?

As photochemical film photography emerged and cinema cameras were created, there was a push to create film with a standardised size - that could be used across different cameras from different manufacturers and be developed by different laboratories around the world. That film had a total width of approximately 35mm and therefore was called 35mm.

When digital cinema cameras started getting manufactured, they replaced film with photosensitive sensors that stuck to the approximate size of film’s 35mm 4-perf capture area.

However, along the way some other more niche formats emerged: from smaller 16mm film that was a cheaper alternative, to large format 65mm which maximised the resolution and quality of movies at a higher cost, to tiny ⅔” video chips from early camcorders to smaller micro four thirds photography sensors.

The issue is that when you put the same lens on two cameras with different sensor sizes they will have a field of view that is different, where one image looks wider and one looks tighter.

So, for prospective camera buyers or renters to get a sense of the field of view each camera would have, many manufacturers started to publish what they called a ‘crop factor’ to determine this.

This means you take your lens’ focal length - for example a 35mm lens - and multiply it by the crop factor of the camera - such as 2x - to arrive at a new focal length number, 70mm. This means that on this smaller sensor your 35mm lens will have approximately the same field of view or magnification as a 70mm lens on a Super35 sensor.

Since Super 35 sensors are considered the standard size, this has a crop factor of 1x. Camera sensors larger than 35mm would have a crop factor of less than 1x and sensors that are smaller than 35mm would have a crop factor of more than 1x.

THE CROP FACTOR MYTH

So where does the myth part come in? Well, the issue is that many people interpret crop factors as saying that shooting with a 70mm lens on a Super 35 sensor is exactly the same as shooting with a 35mm lens on a smaller sensor with a 2x crop.

What’s important to note is that while the level of magnification of the image may be the same, there are still a bunch of other characteristics that lenses have that will make images different depending on what focal length is chosen.

So what we should say is that a 70mm lens on a Super 35 sensor has approximately the same field of view of a 35mm lens on a smaller sensor. We shouldn’t say that a 70mm lens on this camera is exactly the same as a 35mm lens on this camera in every way - as different focal lengths come with other secondary characteristics beyond just their field of view.

Rather than different sensors magnifying or zooming out on the width of what we see it’s better to think about it in different terms. If you put the same lens on two different cameras with a larger sensor and a smaller sensor, the way that the light enters the lens and creates an image will be the same.

The only difference is that the camera with the smaller sensor has less surface area to capture the image with. This makes it feel like the image is ‘cropped in’ in comparison to the larger sensor which can capture more of the surface area and therefore produce an image which feels wider.

Calculating crop factor and then changing the lens on the camera to a more telephoto lens - may make the width of the images match, but will also change the very nature of the image by altering the depth of field, the compression and the distortion in the image.

THE EFFECTS OF FOCAL LENGTHS

The smaller the sensor is, the more cropped in the image will be and therefore the wider focal lengths you will need to use. Whereas the larger the sensor is, the wider the shot will appear which means cinematographers will often choose longer, more telephoto lenses.

One of the secondary effects from using longer focal lengths is that it will create a shallower depth of field. This means that the area that is in focus will be much narrower on a telephoto lens, which means the background will be softer with more bokeh.

This is why movies shot on cameras with large format sensors bigger than Super 35, like the Alexa 65, which cinematographers pair with longer focal length lenses will have a much shallower depth of field, with soft, out of focus backgrounds.

It is a misconception that larger sensors create this effect. In fact, it is the longer focal length lenses that do this.

Another effect that focal lengths have is on how compressed the image is. Wider focal lengths expand the background and make objects behind characters appear further away.

Telephoto lenses compress the background and have the effect of bringing different planes closer to the character.

For this reason, cameras with smaller sensors that need to use wide lenses, may produce images that appear a bit ‘flatter’ without much depth, especially in wide shots. While large format cameras, with their longer lenses, compress the background to create a bit more of a layered perception of dimensionality.

Wider lenses also have a tendency to distort the image more. So, shooting a close up of an actor on a Super 35 camera with a wider focal length will expand their face and make their features unnaturally larger, while using a longer focal length on a large format camera with the same equivalent field of view will compress the faces of actors a bit more which many say is a bit more flattering.

CROP FACTORS OF DIFFERENT SENSORS

Although modern digital cinema camera sensors come in many shapes and sizes, in general they conform to a few approximate dimensions.

Some cameras come with the option to shoot a very small section of the sensor that is equivalent to 16mm film. This has an approximate crop factor of 2x compared to Super35.

This little format will usually be paired with wider lenses designed for 16mm - such as the Ultra 16 Primes which range from 6mm up to 50mm focal lengths, which with a crop factor applied produces a field of view of around 12mm-100mm when adjusted for Super35. As we discussed, this 6mm will produce an image with extremely limited bokeh and a deep depth of field that feels quite dimensionally flat.

Next we have Super35 sensors which are usually considered standard, such as we find on an Alexa 35 or Red Helium. Each manufacturer produces sensors with subtly different dimensions - but most will be the approximate size of 4-perf 35mm film and produce the standardised field of view, where a 18-24mm focal length feels wide, a 35-50mm lens is about a medium, and anything longer at around 85mm starts to have a compressed, telephoto feel.

Anything bigger than Super35 size is usually considered to be ‘large format’. This includes ‘full frame’ sensors modelled on still cameras that are approximately 36x24mm. Some examples are the Arri Alexa Mini LF, the Sony Venice 6K or the Sony FX9.

These cameras will have a crop factor of somewhere around 0.67x, which bumps a wider perspective up to around 32mm, a medium feel to around 65mm and a telephoto lens to about 110mm.

65 cameras like the Alexa 65 push this even more with their approximate 0,56x crop factor that makes a 45mm lens a wide, a 90mm lens a medium and a 150mm a telephoto. As we discussed, shooting a wide field of view with a 45mm will produce much more compression, bokeh and dimensionality than using a 12mm lens on a 16mm camera - even though they’ll produce a similar field of view.

It’s important to note that these crop factor numbers are all relative to what sensor size is considered the ‘standard’. For example, in still photography a full frame sensor is usually considered normal with a 1x crop factor, which means smaller APSC sensors which are roughly close to Super 35 will have around a 1.5x or 1.6x crop factor.

What is much more important than getting super technical about these crop factor numbers is understanding how larger or smaller sensor sizes affect the field of view and understanding all the secondary effects that using different focal lengths will have on the image.

Why The Book Is Often Better Than The Movie

What are some reasons that makes books difficult to adapt into movies?

WHY ADAPT MOVIES?

Cinema has a long history of transforming literary works into movies. This makes sense for a few reasons.

Firstly, a large proportion of audiences will already be aware of the story and characters. Therefore, it’s easier to market the movies and get the existing fanbase into seats without needing to sell a completely new concept, world or story to audiences with promotional materials.

Secondly, some of the best, most inventive and iconic stories which build their own worlds were written as novels. So, there is great subject matter to choose from. However, adapting an existing story to the screen also comes with some baggage.

DIFFERENT IMAGINATIONS

When reading novels, your brain uses the descriptors written by the author and your imagination fills in the image - kind of like how AI can create images from prompts.

Depending on what AI you use, or what prompt you give, you’ll get different variations of images and different interpretations. The same is true of humans. Different directors and audience members will interpret texts differently, not only visually, but also thematically.

This is especially true in texts where the descriptions are a bit vague. Here’s an example. When I say gollum from Lord of The Rings, anyone who has seen the movie will immediately think the character looks like this.

However, illustrator Tove Jansson imagined and represented gollum like this, based on Tolkien’s description of a ‘slimy creature’, ‘as dark as darkness’ with ‘big, round pale eyes in his thin face’.

This disparity in how the character was imagined made Tolkien add an extra adjective ‘small’ to the description in later editions. The point is, different people will imagine things differently.

This applies to the landscapes the stories take place against, how the characters look, what actors are cast, or how key props or objects are rendered. If these representations go against the mainstream audience imagination they may not be well received.

If directors manage to get past this first hurdle and present a visual world that is palatable to the majority of the audience and aligns with the mainstream imagination, they are faced with another hurdle.

EXPRESSING INTERNAL THOUGHTS

How do you express internal monologues, omniscient narration and emotions of characters, that is easily done in the literary form?

One technique that filmmakers have is to use voice over from either the character themself, or voice over from a narrator. However, in many contexts this technique can quickly get overused and disrupt the flow of the movie. Whereas novels can break down thoughts, emotions, and internal explanations at any point they wish, easily, through text.

Other methods that have been used to provide information and context to audiences include: dream sequences, flashbacks and one character telling another a story or explaining something on screen.

These attempts to express internal thoughts about the plot in the form of dialogue can often come out as clunky exposition, which is another reason why translating the thoughts of characters in books to the screen is a challenge.

Good adaptations focus on the characters: and allow the story to be told through the actions of the characters, rather than aligning plot points and then manipulating the characters to get to the plot.

SHOW DON’T TELL

Two good rules to overcome exposition are: show rather than tell and delay giving expositional information for as long as possible.

This is usually reliant on great performances from actors who can project their internal emotions and thoughts externally. Likewise, the language of the camera can also be used to express information.

Take this scene from No Country For Old Men - which conveys a huge amount of information without any dialogue. It’s shot from the perspective of Chigurh, so we’re seeing things unfold at the same time he is - delaying revealing expositional information.

He opens the door with a cattle gun. Lots of information here: firstly he’s clearly in a rural, farming area that would have such a tool, secondly he can clearly adapt to his surrounds using whatever he finds, thirdly, he makes a noise and is confident enough in his violent ability that he doesn’t seem to care about people noticing him.

He finds unopened letters - we know that whoever he’s looking for has been gone a while. There’s an unmade bed, hangers from hastily packed clothes and an open window - we know the person has left in a hurry. He grabs some milk from the fridge and drinks it. The milk is still good so whoever he’s after couldn’t have left more than a couple days ago.

The camera pushes in - getting inside his head. His thoughtful, slow, calculating psychopathic calm behaviour as an intruder is very uneasy.

Then after this mountain of information has been revealed entirely visually - it’s confirmed through a later dialogue exchange with a woman.

This is how good adaptations of books reveal information - by leaning on cinema’s visual tools, and controlling the flow of information to the audience, rather than by overusing expositional dialogue.

STORY STRUCTURE

Another structural difference between books and movies is their length and how they are designed to be consumed.

Novels are by their very nature intended to be read over an extended period of time, in different sittings. Authors can delve into extreme detail like describing the world, adding backstory, getting inside the heads of characters, and they can elongate plots.

Movies are designed to be consumed in a single one and a half to three hour sitting. This means the plot from adapted works often needs to get enormously condensed, simplified, restructured or reinvented to make sense within the more limited time frame.

This can be at odds with what fans want - who are used to the greater plot nuances and depth in the original work.

A solution to this has been to create a series of instalments - breaking the movies into multiple parts. This hasn’t always been successful.

Pacing an adaptation through the writing and editing needs to strike a balance between giving justice to the original story and plot, re-writing or removing excessive side storylines and not overstretching the existing material.

The way that Peter Jackson ends The Return Of The King is a good example. After the ring is destroyed in the film’s climax and the characters return to the Shire, Jackson cuts out the entire ‘Scouring of the Shire’ storyline from the book - where the hobbits retake the Shire through another battle to end Saruman’s rule.

Adding this would have both extended the movie’s run time too much, and goes against the classic three act structure in movies by introducing a second inferior climax after the true climax of destroying the ring.

CREATING TONE

One of the most challenging parts of adapting an existing work to the screen is finding the correct tone that pays homage to the story’s intention: whether that’s creating a feeling of wonder, an uneasy suspense, or action.

A number of filmmaking tools can be used to achieve this feeling: from the score to the set design to the lighting. An example of visually creating different tones can be seen in how cinematographer Andrew Lesnie, Peter Jackson and the rest of the crew created a unique look for each ‘realm’ or location - which also expressed an emotional tone.

The Shire is green, lush and characters are backlit with golden sunlight that is comforting, homely and natural.

Bree needed to feel a bit more aggressive with a sense of foreboding. So they pushed a yellow-green tint in the grade that made skin tones a bit more sickly and lit it with hard light sources with jagged shadows.

For the magical safe haven of Rivendell they pushed a comforting, autumnal warm look in post production, lit scenes with more diffused, softer lighting with less intense shadows, and introduced digital diffusion into the image that created a blooming, smudgy, halation effect in the highlights that would come from using a strong Pro-Mist diffusion filter.

Which again, contrasted heavily with the scenes in Mordor that tried to suck all life and vibrancy from the almost monochromatically neutral palette, lit by constantly gloomy, cloudy light.

Each region carried its unique emotional tone not only through the visuals but also through the music.

CONCLUSION

Adapting fiction to the screen is beset by challenges: from bringing imagined imagery to life, expressing the internal thoughts of characters, restructuring and shortening the storyline to create an appropriate tone that aligns with the original source material.

Truly doing stories justice requires directors to have a clear vision, which they refine and structure with careful pre-production planning, unhindered by ulterior financial motives, which is then supported and executed by a superb cast and technical crew.

The 2 Ways To Shoot Car Scenes

There are two main ways of pulling off driving shots: with a process trailer, or with a poor man’s process trailer. Let’s break down how these two techniques work, the gear involved, and some reasons why filmmakers may choose one method over the other in different situations.

INTRODUCTION

A general rule in cinematography is the more variables a scene in a script has, the more difficult it is to film.

Car scenes come with a lot of moving parts…literally. This presents some challenges. However, since over the years countless scenes have been written, set and filmed inside of moving cars, some standardised cinematographic methods have emerged to handle these situations.

In fact there are two main ways of pulling off these shots: one method is done practically with real locations and a rig called a process trailer, and the other way is achieved through a bit of filmmaking trickery and is called a ‘poor man’s’ process trailer.

So, let’s break down how these two techniques work, the gear involved, and some reasons why filmmakers may choose one method over the other in different situations.

PROCESS TRAILER

To be able to cut together a dialogue scene filmmakers need to shoot multiple angles of the scene being performed, multiple times. Therefore, it’s important that there is a high level of consistency among all of the different takes, so that when shots filmed at different times are placed next to each other there is an illusion that the scene is continuous and unfolding in real time.

This is why cars present a bit of a snag. Consistency over a long shooting period can be difficult when traffic is unpredictable, the background outside the window changes, the driver alters how fast or slow they are accelerating, and the lighting conditions morph as they drive past different areas that may cause shadows or different angles of light.

Also, asking an actor to drive and perform dialogue at the same time can be a bit too much multitasking and diminish the performance, or even be dangerous as their attention to their driving will be compromised.

For this reason, car dialogue scenes shot while driving on roads are almost always done with a rig called a process trailer. Sometimes also called a low loader, this is a trailer with wheels and a platform big enough to fit a car on - which the actors sit inside - that can be towed by another vehicle. The car that is shown on camera is referred to as the ‘picture vehicle’.

Process trailers need to sit very low to the ground so as to give the illusion that the picture car is driving. If it is too raised then the perspective will be off.

Most low loaders are designed with a front cab section that the driver sits in and a rear section behind the cab with mounting points for lights, a director’s monitor, space for essential crew to sit, gear to get stored, and generators to be mounted that can run power.

This front section then tows the process trailer which the picture car sits on.

The actors sit inside the picture vehicle and the camera operator is placed on the trailer, usually outside the vehicle, and films the scene as the actor’s play out each take and pretend to drive. Meanwhile the actual driving will be done inside the front cab by a professional low loader driver.

Any camera operators, focus pullers or other essential crew that need to be on the trailer section have to be harnessed in for safety and contained by a barrier of mounted poles that grips build.

The route that will be driven by the low loader will be carefully planned ahead of production and will almost always involve getting permission and paying for permits from the local government. They will often insist that a police escort is used to drive in front of or near the process trailer and may even require that some roads need to be closed or blocked off during shooting for the safety of the public.

To provide a consistent background and limit blocking off roads to a small area, the driving route will usually either be looped, or it will be a route that has a turning point at the end of it - which can be driven each time for multiple takes.

This turning point will have to be scouted in advance by the driver to ensure there is enough space to perform a u-turn with the elongated, low clearance vehicle.

There are a number of different shot options that can be used on a process trailer.

Often, cinematographers will shoot from a stabilised platform like a tripod or car mount which the grips can secure on the process trailer, outside the picture car.

Common angles are shooting a two shot front on, through the windscreen, then punching in for close ups on each actor though their respective side window.

The camera could also be rigged or shot handheld from inside the picture vehicle.

I’ve also witnessed some DPs who like to operate a handheld camera on an Easyrig, and position themselves just outside the open side windows - especially for car commercials.

If shooting through windows DPs will almost always use a rota pola filter. As the name suggests this polariser glass can be rotated by turning a wheel, to position the filter so that the polariser effect minimises the reflections from the glass. This allows the camera to see the actors inside the car without being blocked by reflections.

Some complex camera moves beyond locked off frames can also be achieved, such as this. Which is done by shooting off a Scorpio 10 - which is a small telescoping crane arm - that is rigged to the process trailer and moved by a grip. It has a mini Libra head attached to it, which means the camera can be tilted, panned or rolled remotely by an operator using wheels.

In this case they achieved these tricky moves by removing the car’s side doors and shooting through the passenger side of the vehicle. They shot all the moves practically then later inserted the window’s glass and reflections with VFX.

Cinematographers will often get their team to rig a fill light on the process trailer. Usually this is quite a soft light with some spread, such as Skypanels or an HMI with diffusion. This will lift the level of ambient light inside the vehicle which will be much darker than the bright levels of natural ambience outside, yet be soft and not too directional so as to mask that the shot is being lit at all.

It’s also possible to use a car mount, where the camera is attached directly onto the car by grips through using a mounting mechanism that is usually secured through poles with suction cups that stick onto the car’s body, or with a rig called a hostess tray.

In this case, some actors may be able to do their own stunt driving without a process trailer for scenes without dialogue. It may also be possible to use a professional driver that stands in as a double for the actor, which requires shooting at an angle that doesn’t reveal too much of the driver’s identity.

POOR MAN’S PROCESS TRAILER

As nice as it is to shoot driving scenes for real by using a process trailer, this method does come with a number of disadvantages. For this reason, filmmakers also came up with a second, artificial method for capturing these shots - which is referred to as the ‘poor man’s process trailer’.

There are a few different methods of doing this, but basically it involves placing the stationary car in a low light environment, like a studio, then using lighting, giving the car a shake, and creating a simulated background to give the illusion that the actors are in a moving car.

There are four main ways that this can be done: with projection, with lighting, with a green screen or with volumetric lighting from giant LED screens.

Although there are different nuances to each of these methods, they are set up in a similar way. First, a background is placed behind the area where the shot will take place. So if the shot is a close up of a driver, then a projection surface will be set up behind the picture vehicle in the same directional line that the camera is pointing.

A projector will then hit that surface with a pre-recorded clip shot out of a moving vehicle which plays out for at least the length of a full take. It’s, of course, important that the video loop in the background is shot at the same angle as the shot which you line up and is moving in the right direction.

The alternative to this is to replace the projection surface with an illuminated green or blue screen. Then in post production key the green and replace it with the video clip of the moving background.

A higher budget version of these two methods is to use volumetric lighting - which is basically gigantic LED video panels that output a brighter luminance. Again, clips can be played by these video walls, which can usually be controlled by software that can also do things like defocus and shift the perspective of the video.

Because of the increased levels of light output from these panels, you also get more realistic production of light, so any highlights in the video clip will produce brighter areas or reflections in the lighting, and any changes in colour will change the colour of the light that hits the subject.

Once you’ve set your background, then it’s time for cinematographers to work on the lighting. How this is done will depend on whether it’s a day or night scene and is based on the discretion of the cinematographer. But, usually it will involve adding some kind of ambience to the scene, like a bounced source that softens and spreads the light while raising the general exposure levels.

Then you’ll usually want to set up some moving light sources to simulate that the car is in motion. This can be done by loosening the lock off on a stand and swivelling a light around. Or, what I’ve found works quite well, is to get a few people to swing around some handheld LED tube sources.

To get the feeling of camera motion to be realistic, I’ve also found that shooting with a handheld camera combined with getting people to randomly shake the picture vehicle tends to give a more accurate feeling of motion than shooting a locked off frame.

ADVANTAGES AND DISADVANTAGES OF THE PROCESS TRAILER

One of the biggest reasons why filmmakers chose to shoot car scenes in this way rather than with a real process trailer is because of budget. Shooting with a full police escort and locking off roads can become incredibly pricey.

The cost of renting out a studio, or even shooting outside in a parking lot, is going to be far cheaper than renting a low loader and paying for road closures and permits.

Having said that, if you want to shoot with a large volumetric LED wall in a big film studio, then it quickly moves away from being a ‘poor man’s’ method and will probably be a similar financial spend to shooting with a real process trailer.

So, taking budget out of the equation, what are some of the advantages and tradeoffs of each method?

Basically, shooting with a process trailer offers a realistic look, with little vibrations and real world, interactive lighting, that is difficult to match, while shooting with a ‘poor man’s process trailer’ offers much more ease and convenience.

It’s much easier to record clean dialogue when working in a soundproof studio environment than when working out on real roads.

It’s also significantly faster and easier to change between setups when working in a studio, than when working from a low loader - which often requires driving the vehicle to a stopping point, then getting a full grips team to re-rig the camera so that it is safe and secured.

It’s also far more convenient for the director. When shooting in a studio they can clearly watch every shot on a monitor, and stroll over and give notes or have conversations with actors between takes. Whereas shooting on a process trailer usually involves more staggered communication and direction over a radio.

Studio environments also give cinematographers far more visual control. For example, when shooting outdoors the sun position will change over time, the light may go in and out of clouds during takes changing its exposure and quality, you may get unwanted reflections off the glass or hit a bumpy section of road that moves the camera too much.

In a studio lights can be set to a consistent intensity and position, lens changes and camera moves are easy, as you work off a flat, unmoving surface - ensuring that take after take can be visually repeated in the same way.

Also any technical glitches are easy to fix right away, whereas if a focus motor slips or a monitor loses a transmitted video feed on a process trailer, you’ll have to bring the whole moving circus to a safe stop to fix the problem - which is time consuming.

A final disadvantage to working with a process trailer is that it forces you to face the camera towards the windscreen of the picture vehicle or shoot side on.

Shooting from behind and looking out of the front windscreen requires renting a niche vehicle where the driver’s controls are placed behind the picture vehicle - which can be a lot of effort for a single shot, compared to just turning the car around inside a studio against a moving background.

CONCLUSION

Despite the logistical challenges and inconveniences, some filmmakers with a budget still opt to shoot the real thing. Prioritising the realistic visual nuances that come straight out of the box when working with a process trailer.

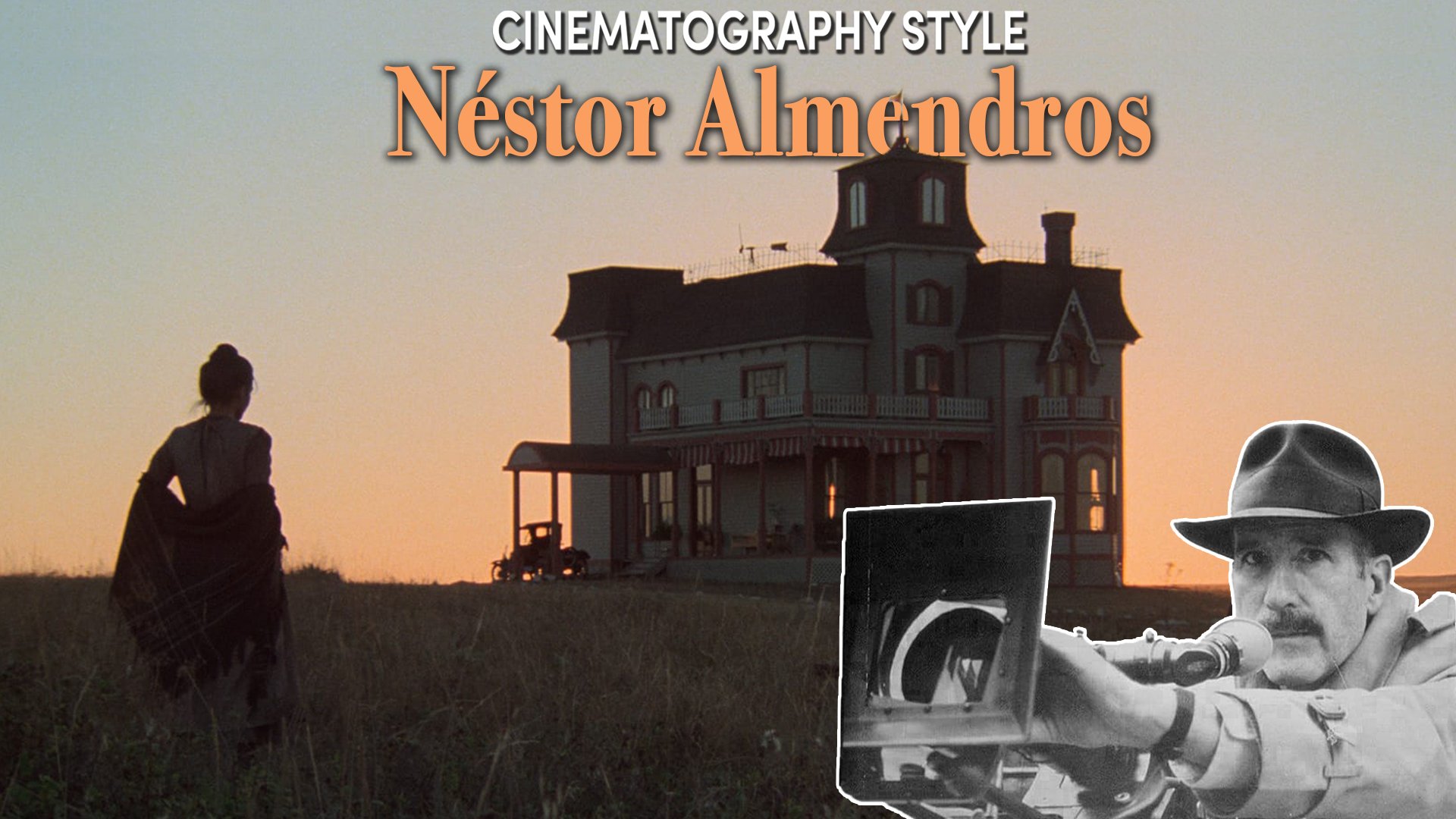

Cinematography Style: Néstor Almendros

Let's take a look at the influential work of cinematographer Néstor Almendros; specifically, his use of natural lighting, his taste for cinematic simplicity and focus on providing directors with his creative insights and knowledge of cinema.

INTRODUCTION

Although cinematographer Néstor Almendros did most of his work in France, often for groundbreaking French New Wave directors, like Éric Rohmer and François Truffaut, he is probably best known for his collaborations with Hollywood directors on some iconic movies in the 70s and 80s.

Directors were often drawn to his pioneering way of working with natural light, his taste for cinematic simplicity and a focus on providing them with his creative insights, beyond just his technical expertise.

In this episode let’s break down how his thoughts and philosophies on cinematography influenced his photographic style, and also take a look at some of the gear and techniques that he used to pull off some breathtaking images.

PHILOSOPHY

Many of the filmmaking techniques that Almendros would later use on larger feature productions, were actually gleaned in his earliest explorations into cinematography.

Three of these concepts which appear as threads throughout his filmography are: his knowledge of movies, his focus on natural lighting and his push for cinematic simplicity.

He developed an early love for cinema, so much so that he eventually became a bit of a cinefile and started writing movie reviews. He cites this as one of the best educational resources for DPs, claiming that the technical side of photography can always be learnt or executed by film technicians and crew that you work with, but having a solid foundation and understanding of what films came before and what the current trends are in cinema and photography are incredibly important.

In his early years he wanted to be a director. This made having a perspective on the narrative a must. It’s the job of the cinematographer to be sensitive to the needs of the story and have the necessary cultural background to draw from.

An example of a movie that he was influenced by was the early Italian neorealist film La Terra Trema - shot by cinematographer G. R. Aldo. He was blown away by how Aldo used naturalistic lighting in a way that was very different from the other much more stylised and overlit movies of the time - which blasted hard, frontal key light at actors.

Instead of shooting on sets in film studios and shining hard, spot lit key light, fill light and backlight at actors as was the norm, many of these Italian neorealist films used available, naturalistic light in real locations, which may be hard with unflattering shadows under direct sun, a soft, gentle feel under cloudy conditions, or use the last remnants of dusk light remaining in the sky after sunset.

His appreciation for naturalistic light may also have been influenced by his filmmaking originating in shooting documentaries in Cuba - where he could only work with a camera and available light as there was not enough budget to hire lights or a team of electricians.

To overcome the low levels of light inside some of the houses they would shoot in, they came up with the idea of using mirrors to bounce the sunlight that was outside into the house through windows then bouncing it off the ceiling.

This technique of softening light by bouncing it became important later, but so too did the function of mimicking the direction of the natural sunlight by angling it through windows and increasing its strength.

In other words, taking an existing source of natural light and strengthening it by artificial means.

Almendros inspired a major transition in thinking about lighting. Rather than being bound to film school concepts like three-point lighting, he instead wanted the lighting in his films, even when using artificial film lights, to be motivated by what the natural light sources - like the sun - does in real life.

He talks about this in his autobiography:

“When it comes to lighting, one of my basic principles is that the light sources must be justified. I believe that what is functional is beautiful, that functional light is beautiful light. I try to make sure that my light is logical rather than aesthetic.”

Another aspect to Almendros’ work is an appreciation for simplicity both in practical, technical terms as well as aesthetic terms. Again, this may perhaps have evolved from the beginning of his career in documentary and on low budget films, where he became accustomed to making do with a lack of resources.

A great example of his economical way of working, was on his first narrative feature: La Collectionneuse which he shot for French New Wave director Éric Rohmer. Because of an extremely limited budget they were faced with a choice early on: either shoot in the less expensive 16mm or shoot extremely economically on 35mm.

They went with 35mm.

A shooting ratio refers to how much footage was shot in relation to the length of the finished movie: so if 20 hours of footage was shot to complete a 2 hour movie then the production had a shooting ratio of 10:1. La Collectionneuse had an insanely low shooting ratio of 1.5:1. This meant that they shot only 1 take for most shots.

Working in such an economical way has a few advantages: it forces directors to think about exactly what they want and have a refined vision. As Almendros says: “the problem is that when there are many options there is a tendency to use them all.”

Even later on in his career when he was working with larger budgets on Hollywood productions, he always gravitated to finding the simplest method using the tools that would most easily produce an image with functional, realistic light that told the story.

For example, not using a big truck full of lights and a large team of electricians, if he could get a more authentic image from only using natural light.

Visually, many of the films he shot also have a certain stylistic simplicity to them. Although of course it depended on which director he worked with, he often shot quite deliberately composed, static frames without many dynamic camera moves. This was especially true when working with Rohmer who liked shooting stationary frames from a tripod head.

So, Almendros can be characterised by his cinematic knowledge, naturalistic lighting, and simplicity - but how did he translate that over with the techniques and gear that he used?

GEAR

Nowadays the default for most cinematographers is to base their placement of lights, and quality of illumination on the real life sources that exist in the location.

As I mentioned before, that wasn’t always the case. The prior standard was that actors should almost always be well illuminated in clear, strong pockets of light which were usually placed in front of the talent, from above, and shined directly at them. This clearly illuminates the face without shadow, however isn’t what light does in real life.

Compare this shot of how actors used to be lit when placed next to a window, to how Almendros did it in Days Of Heaven. There are two big takeaways.

Firstly, Almendros places the light source outside the window, shining in, mimicking the direction that the sun would in real life. While the other shot keys the actor with a high, frontal source of light - that doesn’t make sense in the real world.

Secondly, the quality of the light is different. Almendros uses a much more diffused light that is far softer with a natural, gentle transition from shadow to brightness. While the other example has a very clear, crisp shadow caused by very strong, undiffused artificial light.

A technique he often used to get this soft quality of light when shooting interiors was to bounce lights, often from outside a window, into the ceiling. This reflected the source around the room, decreasing the intensity of the light, but lifting the overall ambience in the room in a natural way.

He liked using strong sources with high output to create his artificial sunlight for interiors on location or in studio sets, such as tungsten minibrutes, old carbon arc lights, or, later on, HMIs.

Although he is known for his use of naturalistic soft light in movies like Days Of Heaven, he also did use hard light at times when it was functional and could be justified by a realistic source.

He also often favoured lighting with a single source - meaning one lighting fixture which pushed light in a singular direction. He often did this by using practical light fixtures - like lamp shades with tungsten bulbs - and not adding any extra fill light to lift the exposure levels in the space.

In Days Of Heaven he even took this idea and transferred it to the oil lamp props, which he had replaced with electric quartz bulbs that shine through orange tinted glass, which were wired under the shirts of actors and attached to a belt with batteries they could wear.

These innovative solutions led the way to what is nowadays easily done with battery powered LEDs.

He paired this warm practical light with another lighting technique he would master - exposing for very low levels of ambient dusk light in the sky.

Days of Heaven is probably best known for using this dusk light known as “magic hour”, but it’s actually something that he’d been doing since his first feature.

This was especially difficult as for most of his career he worked with a Kodak film stock that had a very low ASA rating compared to today’s standards. Kodak 5247 is a tungsten balanced film that was rated at only 125 EI - which is around 5 stops slower than a modern digital cinema like the Sony Venice 2 that can shoot at 3,200 EI.

To expose at these extremely low levels of natural light he would rate the 125 ASA film at 200 ASA on his light meter, 2/3rds of a stop underexposed. As it got darker he would then remove the 85 filter - which changes the colour temperature of tungsten film to daylight but also darkens the image by one stop.

Then as it got progressively darker he’d also change to lenses with a faster aperture that let in more light, ending wide open on a Panavision Super Speed T1.1 55mm - poor focus puller.

If they needed to push things even further into low light shooting he would sometimes even film at 12 or 18 frames per second and change the shutter from 1/50 to 1/16. In this case they’d also ask the actors to move more slowly than usual to mask the otherwise sped up feeling of motion you’d get - reaping the final moments of available natural light before everything became dark.

However, when he wasn’t working with the smallest amounts of available light, Almendros actually preferred to not shoot with a wide open aperture. He felt the best depth of field was slightly stopped down, so that the background wasn’t a complete blur and could still be made out, yet was slightly soft so as to isolate the characters from the frame and make them stand out.

He also innovated other DIY tech that could be used to simulate naturalistic lighting. For example, he ignited flame jets attached to gas tanks, which could be easily handled and had a controllable flame. These could be brought near actors to naturalistically illuminate them for scenes involving fire, rather than using electric lights - which was standard practice before.

To achieve a wide shot of locusts flying off in-camera, without post production visual effects, Almendros again pulled a technique from his knowledge of cinema - this time a movie called The Good Earth. They suspended helicopters just above the shot and released seeds and peanut shells, then to get the effect of the insects taking off they got the actors to perform their actions in reverse.

This was shot on an old Arriflex which could shoot film backwards that would later play out the original action in reverse.

This innovation also extended into camera movement. Days Of Heaven was the first film to use the Panaglide - Panavision’s lightweight alternative to the Steadicam, which could be used to get sweeping, tracking shots with actors over uneven natural terrain.

CONCLUSION

Much of what we take for granted in cinematography today, like shooting in low light, using practical sources, and thinking about motivated natural lighting rather than three-point lighting, are all innovations aided by Almendros’ work.

He was able to use his knowledge of cinema to inform his taste and storytelling techniques, then pushed established technical boundaries and ways of thinking to make his cinematography extremely beautiful but also extremely influential.

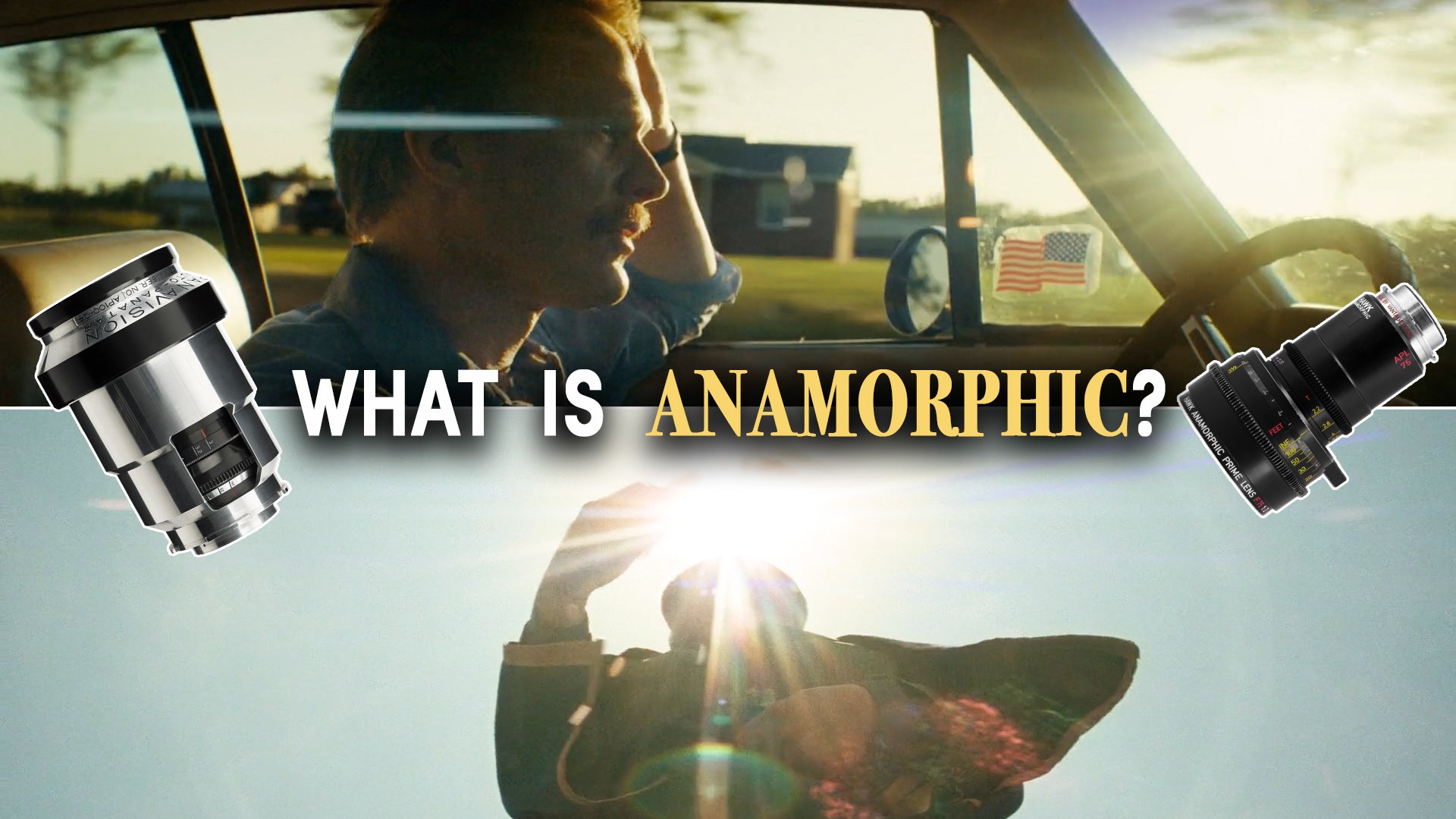

What Makes Anamorphic Lenses Different?

Let's dive a bit deeper into how anamorphic lenses work and what makes them different from regular spherical glass.

INTRODUCTION

Choosing a set of lenses is one of those tools in a cinematographers toolbox that can influence how stories are visually presented and how they are practically shot.

Anamorphic glass is different to normal spherical lenses in many ways, so much so that shooting anamorphic is considered a different format altogether.

But what is it that makes these lenses different? Let’s do a bit of a deep dive.

WHAT ARE ANAMORPHIC LENSES?

When light passes through an ordinary lens, it captures an image which is correctly proportioned and can be used straight away. Anamorphic lenses are a bit funky. Because of their differently shaped, oval, cylindrical glass elements, when light hits them it gets squeezed and produces an image which is compressed.

Kind of like how fairground mirrors can squeeze reflections to make you look long and lanky.

This contorted format was invented for two reasons: to create a widescreen aspect ratio, while at the same time maximising the quality or detail in the image. To understand how this works we must quickly dive into the origins of how old 35mm film cameras worked.

35mm film runs vertically through a camera, which captures the full width of the negative at a height of four of these little perforations tall. This is all well and good when shooting in the old, tall academy aspect ratio, but what if you wanted to film and present images in a widescreen format?

Well, you could take that taller frame and chop off the top and bottom with a mask. But that meant that when capturing or projecting you would waste a lot of expensive film that would just be blacked out and because the full height of the negative was cropped, the recording area was smaller, which decreased the clarity and quality of the recording and increased the amount of film grain you’d see.

SQUEEZE FACTOR

Anamorphic lenses fixed this by recording the full height and width of each four-perf frame, by squeezing the image to cover the entire negative. When screening the film in a cinema they then attached an extra anamorphic lens onto the projector which desqueezed the image - reverting it back to its correct proportions by stretching it out by the same amount that the anamorphic lens originally squeezed it.

The amount that the lenses compressed the image is called the squeeze factor. This refers to the ratio of horizontal to vertical information captured by an anamorphic lens. So regular spherical lenses that capture normal looking images have a factor of 1x, where the horizontal and vertical information is the same. While anamorphic lenses usually have a squeeze factor of 2x. This means that twice as much horizontal information is squeezed into the image than vertical information.

Although a 2x factor is the norm for anamorphic glass, there are also some lenses with different squeeze factors out there - which we’ll get to a bit later.

DESQUEEZE

Now that all post production, and almost all cinema projection, happens digitally rather than with film - the method for desqueezing footage has also changed.

You can now import files shot with anamorphic lenses into editing software, and apply settings to desqueeze the footage digitally, for example by a factor of two, to make the images uncompressed in a native widescreen aspect ratio.

ASPECT RATIO

Although the exact aspect ratio, or width, for anamorphic capture and projection may change ever so slightly depending on a few factors, it will usually either be 2.35:1 or, nowadays, 2.39:1 - which is more commonly referred to in more general terms by rounding it up and calling it a 2.40:1 aspect ratio.

SUPER 35

Before I mentioned that an alternative method to get to this widescreen aspect ratio is by shooting with spherical lenses with a 1x factor, and cropping off the top and bottom of the frame.

Although this method yields less surface recording area and slightly diminished quality and resolution of detail, it is still a very commonly used format over anamorphic for a number of reasons.

Because widescreen Super 35 records extra information on the top and bottom of the frame, this can be useful in post for things like CGI, stabilising the image with software, or cropping out unwanted things by reframing up or down.

SPHERICAL VS ANAMORPHIC CHARACTERISTICS

Spherical lenses are also usually sharper across the width of the frame, meaning that details on the edges of the shot that are in focus will remain sharp. Anamorphic lenses have a sweet spot in the middle of the frame that will be sharp when in focus, while the edges of the frame will resolve detail less sharply.

Another difference between spherical and anamorphic lenses is how their bokeh is rendered, which is the out of focus area in an image. Because the glass elements in 1x spherical lenses are rounded they produce rounded balls of bokeh.

However, anamorphic lenses, with their cylindrical elements that squeeze the image, create bokeh which takes on more of an oval shape. This shape is also affected by where the cylindrical glass element is placed within the lens.

Most true anamorphic lenses place the cylindrical element at the front of the lens, with regular circular elements behind it. These are called front anamorphics and produce that classic ovular distortion in the background.

There are also rear anamorphic lenses, which instead place the cylindrical element at the back of the lens, with the rest of the circular elements in front of it. This is often done to create Frankenstein anamorphised zooms, which takes an existing spherical zoom lens and adds a rear anamorphic element to the back of it.

This has the same effect of squeezing the image, however rear anamorphics often lose the oval bokeh shape, which becomes a bit more rounded or even, in some cases, rectangular.

Probably the most defining characteristic of anamorphics is their flare. When direct, hard light enters these lenses it produces a horizontal flare across the width of the image - which is usually quite pronounced.

Spherical flares tend to generally be a bit tamer and subtler and flare in a more circular way, rather than horizontally.

ANAMORPHIC IN A DIGITAL WORLD

Many digital cinema cameras use a sensor that approximately modelled the size of a Super 35 negative with a 4:3 ratio. This meant that most existing anamorphic lenses which were designed for four-perf film would be able to cover the width of digital sensors without vignetting.

Like on film, these 2x anamorphic lenses could cover the full height and most of the width of the sensor, filling a greater overall surface area than shooting a cropped Super 35 image with spherical lenses.

However, not all digital sensors used such a tall 4:3 ratio. Some sensors were designed to be more of a 16:9 size. There are some anamorphic lenses with a different 1.3x squeeze factor, instead of the standard 2x squeeze, that cover these wider sensors and still produce a widescreen image with a 2.40 aspect ratio.

In recent years, full frame and large format digital cameras have seen a surge in popularity. Due to these sensors being significantly larger than Super 35, most 2x anamorphic lenses don’t have glass elements wide enough to cover these sensors without them seeing inside the lens and the image vignetting.

Anamorphic lenses with various squeeze factors have been designed to cover these format sizes, from 1.3x to 1.8x.

LIMITATIONS

A limitation of shooting large format or full frame anamorphic lenses is that you have a smaller selection to choose from and this glass is typically more expensive than comparable spherical options.

Spherical primes sets also usually come with far more focal lengths to choose from. For example a modern set of spherical primes like the Master Primes come in 16 focal lengths, whereas a modern set of anamorphic primes like the G-Series come in eight focal lengths.

This sometimes means that cinematographers like to pair a set of anamorphic primes with a longer zoom - which may either be an anamorphised rear zoom, like we mentioned before, or a front anamorphic zoom like the Panavision 70-200mm.

Another potential limitation of anamorphics, especially front anamorphic zooms, are that because of their more difficult design and increased number of glass elements, they usually have a slower stop than their spherical peers - which renders a tad less bokeh and makes it more difficult to shoot in very low light conditions.

They are also on average physically larger and heavier than spherical lenses - with the gigantic Primos being a great example of just how hefty a prime anamorphic lens can get. Although many lighter alternatives do also exist.

Close focus can also be an issue. The extra glass in anamorphic lenses means that the MOD, or the closest point that the lens can render an object in sharp focus, is usually not very near. 1x spherical glass is normally far better at this. So, if filmmakers want to shoot an extreme close up on an anamorphic lens, they will need to use an extra diopter filter which allows them to achieve more of a macro focus.

Visually, anamorphics produce more distortion, with the wider focal lengths, around 40mm and wider, bending the edges of the frame - which is especially noticeable when shooting something with a straight line like a door frame.

WHY CHOOSE ANAMORPHIC LENSES?

So, broadly, spherical lenses offer a greater practical flexibility to cinematographers, while anamorphic lenses offer a specific look, in exchange for a few practical tradeoffs.

Overall, DPs who like a clean look to their footage, which is sharp across the frame and free of aberrations or distortion often like to go with spherical glass and crop to get a wide aspect ratio. Whereas those looking to add a touch more visual character to the footage to make it a little less perfect, which is often done to counteract the sharpness of modern high res digital cameras, may prefer the look of older anamorphic lenses.

Having said that, there are exceptions to this. Old, vintage spherical lenses exist which offer a lot of imperfections, as well as modern anamorphic lenses which are very sharp and clean.

In the end, anamorphic lenses can give projects a look that has long been considered classically cinematic, with their oval bokeh, lateral flares, falloff and native widescreen ratio. However, this does come with a few practical tradeoffs which may need to be considered by filmmakers.

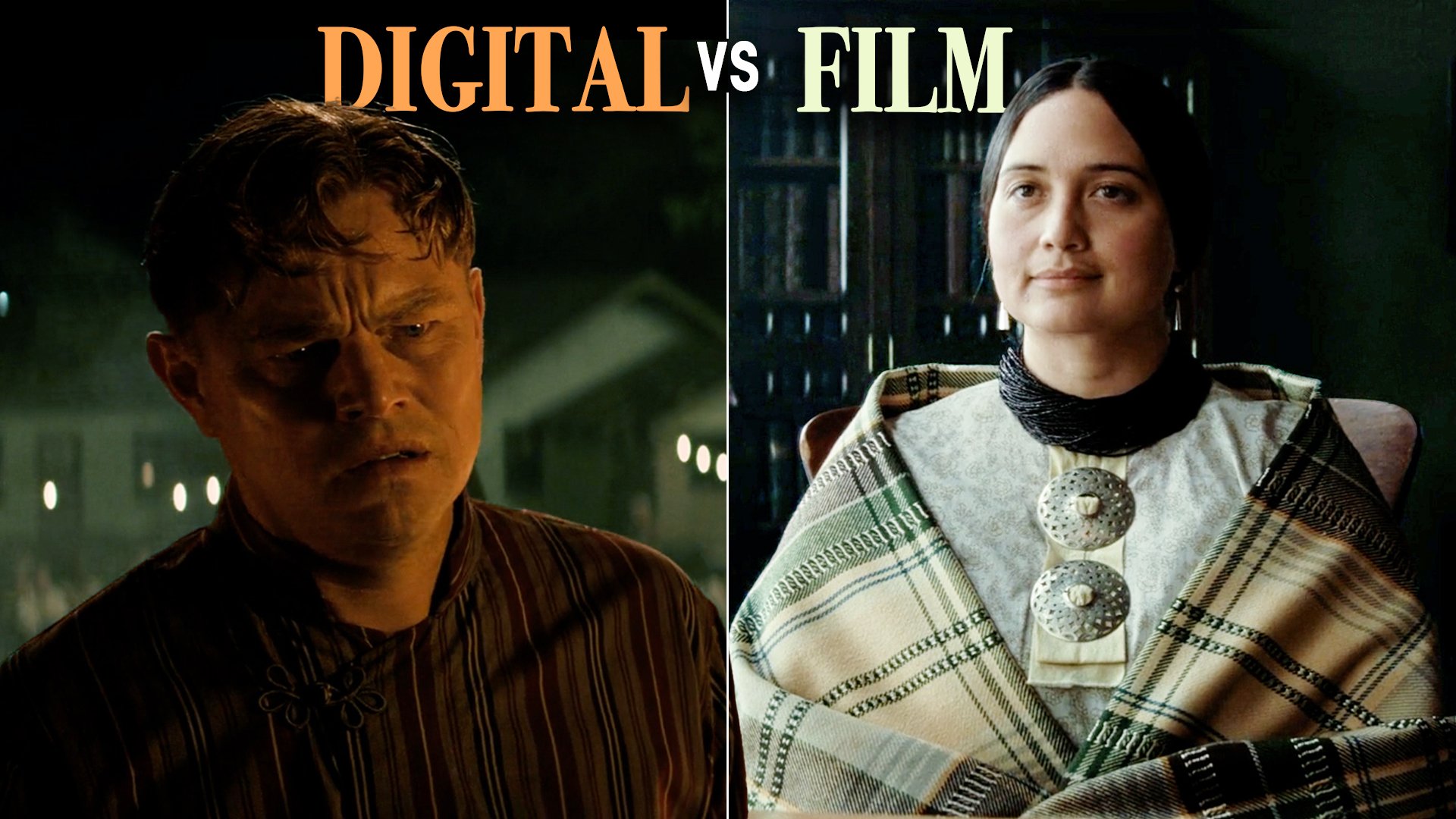

Mixing Film And Digital Footage: Killers Of The Flower Moon

Let's break down the cinematography - specifically the use of colour and LUTs - in Killers Of The Flower Moon.

INTRODUCTION

Colour is a tool that plays a crucial role in cinematography and can be manipulated to craft a bunch of different looks.

Some of these looks can be pretty heavy handed, like the quote unquote ‘Mexican filter’ - which punches up the warmth everytime the world of the story moves south of the US border.

Traffic (2002)

But other applications of colour, like in Killers Of The Flower Moon, is a bit more subtle, yet has an unconscious effect on how the story is taken in by audiences.

The workflow behind this cinematography combines old school thinking with new school technology. This comes from the choice to shoot on both film and digital cameras in different situations, and by thinking about LUTs and digital colour correction in terms of old photochemical techniques.

So, let’s explain how these principles work by breaking down the cinematography in Killers Of The Flower Moon.

MIXING FORMATS