The Last Colour Negative Motion Picture Film In The World: Kodak Vision 3

Let’s use this video to examine the last remaining range of colour negative film stocks, go over how to choose the right film, how to identify each stock’s specifications based on the label of their film can, and talk about the visual characteristics that contribute to the ‘shot on Kodak’ look.

INTRODUCTION

Shooting on film, in both photography and in the world of cinema, has seen a bit of a resurgence in recent times. After the release of capable, high end digital cinema cameras - like the Arri Alexa in 2010 - many may have thought that the era of shooting movies photochemically was done and dusted. However, over a decade later, motion picture film still exists.

But, unlike in photography where there are still quite a few different films to choose from, in the world of motion picture film there is only one commercially mass produced category of colour negative film that remains. From one company. Kodak Vision 3.

So, let’s use this video to examine the last remaining range of film stocks, go over how to choose the right film, how to identify each stock’s specifications based on the label of their film can, and talk about the visual characteristics that contribute to the ‘shot on Kodak’ look.

CHOOSING THE RIGHT FILM

When cinematographers shoot on film there are three basic criteria that will inform what film stock they choose to shoot on: the gauge, the speed and the colour balance.

First, you need to decide what gauge or size of film you will shoot on. This may be determined on the basis of budget, or due to a stylistic choice based on the look of the format.

The four standardised film sizes to choose from are: 8mm, 16mm, 35mm and 65mm.

The smaller the width of the film is, the less of it you need to use and the cheaper it will be but the less sharpness, clarity and more grain it will have. The larger the width of the film, the more you will need, the more expensive it’ll be and the higher fidelity and less grain it will have.

Next, you’ll need to decide on what film speed you want to shoot at. This is a measurement of how sensitive the film is to light and is comparable to EI or ISO on a digital camera.

Basically, the more light you’re shooting in, the lower the film speed needs to be. So bright, sunny exteriors can be shot on a 50 speed film, while dark interiors need to be shot on a 500 speed film.

Finally, films come in two colour balances: daylight and tungsten. This refers to the colour temperature of the light source that they are designed to be shot in. So when shooting under natural sunlight or with film lights like HMIs that have a colour temperature of approximately 5,500K it’s recommended to use a daylight stock. When shooting with warmer tungsten light sources, a tungsten balanced film should be used to get the correct colour balance.

As a side note, it is still possible to shoot a tungsten film, like 500T, in cooler sunlight.

Kodak recommends using a warm 85 filter and exposing the film at 320 instead of 500. However, some cinematographers, like Sayombhu Mukdeeprom, prefer to shoot tungsten stocks in daylight without an 85 filter and then warm up the processed and scanned images to the correct colour balance in the colour grade.

HOW TO READ A FILM LABEL

Within the Kodak Vision 3 range there are 4 remaining film stocks in production. Two daylight balanced stocks - 50D and 250D - and two tungsten stocks - 200T and 500T.

One of the best ways to further unpack the technical side of what makes up a film is to look at the information on the label that comes with every can.

The biggest and boldest font is how we identify what kind of film it is. This is broken into two parts. 50 refers to the film speed or EI that it should be metered at. So cinematographers shooting a 50 EI film will set the ISO measurement on their light metre to 50 to achieve an even or ‘box speed’ exposure of the image.

‘D’ refers to daylight. So this is a daylight balanced film.

The second part, 5203, is a code to identify what type of film it is. Every motion picture film has a different series of numbers that is used to identify it. So 35mm Kodak Vision 3 50D is 5203. 8622 is 16mm Fujifilm Super-F 64D. 7219 is 16mm Kodak Vision 3 500T.

It’s crucial that all cans of film that are shot are labelled with this code when sent to the film lab for development so that the film can be identified and developed at the correct box speed.

This brings us to the next text, develop ECN-2. This refers to how the film needs to be developed. ECN-2 development is basically the same process of passing the film through a series of chemical baths as C-41 - which is used to process colour negative film in photography.

However, it also includes an extra step where the remjet layer on the Vision 3 film is removed. Remjet is used to minimise the halation of highlights and decrease static from the film quickly passing through the camera at 24 frames per second.

Next, we have a table that indicates how the film should be exposed in different lighting conditions. Under daylight no extra filters are required and the film can be exposed with an EI or ISO of 50.

When shooting with a 3,200K tungsten light source Kodak recommends using a cooling 80A filter - which changes the light from 3,200K to 5,500K or daylight. Adding this filter lets through less light, so in this situation Kodak recommends exposing the film with an EI of 12.

This 35 means that the film comes in a 35mm gauge width. These numbers refer to the kinds of perforations it has on the sides of the film.

And, the final important number refers to how many feet of film the roll contains.

When shooting on 35mm the most common roll length is 400ft - which is used for lighter camera builds. But 1000ft rolls can also be used in larger studio magazines that allow filmmakers to roll the camera for longer before needing to reload.

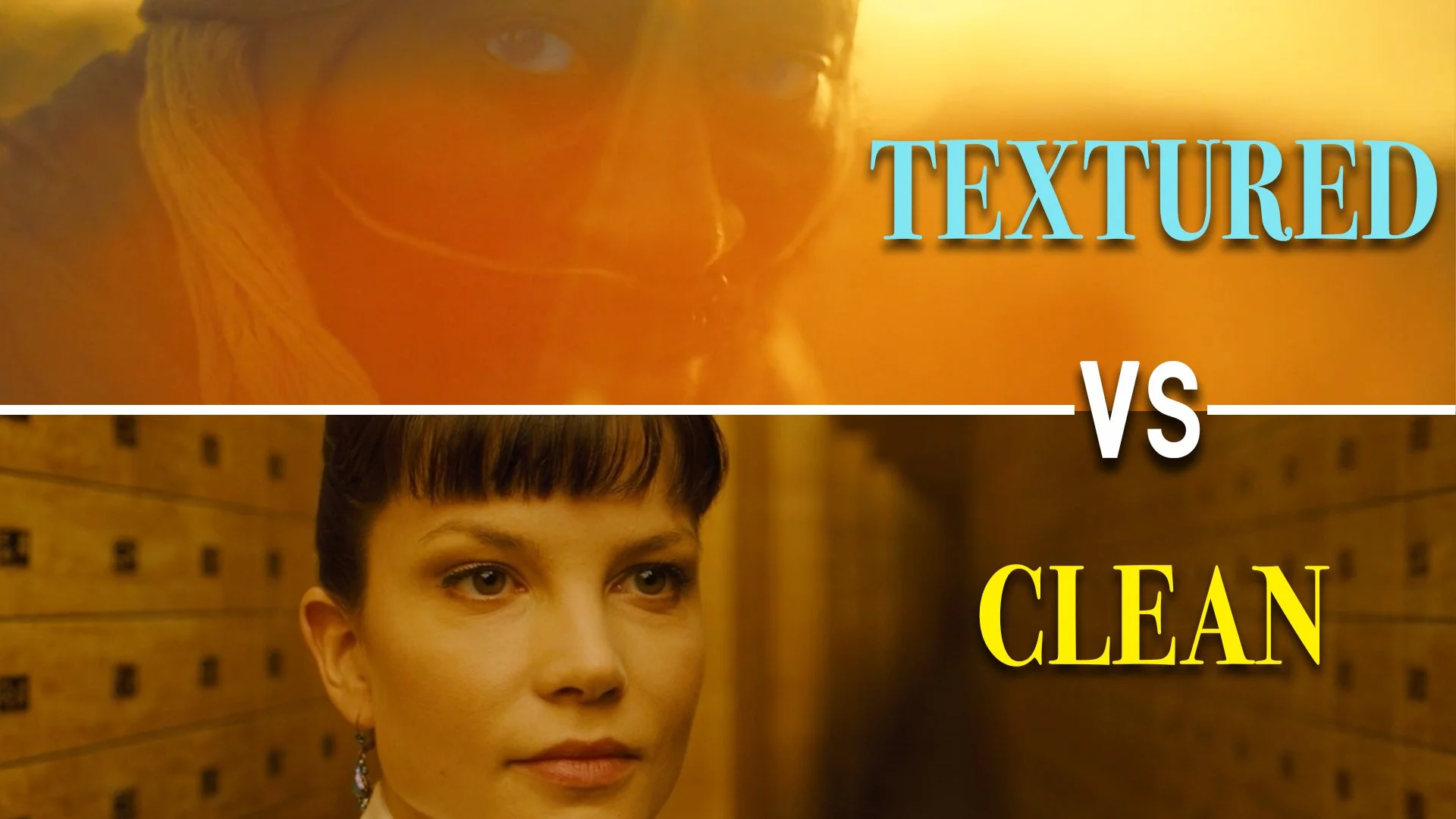

KODAK VISION 3 CHARACTERISTICS

There’s a good reason why many DPs who shoot on digital cinema cameras still try to create a Kodak ‘look’ for footage using a LUT or in the colour grade.

Whether it’s the result of the long legacy of shooting movies on film, or whether it’s just that filmic colour is actually more visually appealing, the film look remains sought after. However, it’s important to remember that the look of film has changed over the years due to the methods used by manufacturers.

For example, many iconic Hollywood films from the 70s that were shot with the famous 5254 have a more neutral, crushed, grainy look than modern Vision 3.

Also, keep in mind that modern productions shot on film are all scanned and then graded in post. So the colour in the final file may be different depending on how much the colourist remained true to, or strayed from, the original colour in the negative.

Kodak film has always been considered rich, with good contrast and warmer than Fujifilm - which has more pronounced blues and greens.

As it’s the most modern, the Vision 3 range is the cleanest looking motion picture film stock produced. The most sensitive of the bunch, 500T, has very low grain - even when push processed.

For this reason, filmmakers who seek a deliberately high grain, textured image these days regularly opt to shoot in 16mm, rather than the lower grain 35mm.

The colour produced is on the warmer side - which helps to create beautiful, rich looking skin tones that are more saturated than Kodak’s older Vision 2 stock.

Vision 3 film also has a big dynamic range of approximately 14 stops - which is more than older films. This means that when it’s scanned and converted to a digital file, the colourist is able to do more with it, such as use power windows to recover highlights from over exposed areas.

“As a colourist my job is to try to build a good contrast level and keep the detail in the lowlights. I find that the 5219 stock was designed so that I can have that contrast and the detail as well without having to do anything extra like power windows to pull the detail out.” - Mike Sowa, Colourist

What I especially love about the film is how it renders the highlights with a subtly blooming halation effect and how it renders detail in a way that is not overly sharp.

With modern post production colour, it’s possible to almost exactly replicate this look with digital footage. You can get almost identical colours, you can add scans of film grain on top of the image. But, to me, what is still not achievable in post is recreating how film renders details in an organic way that digital technology is still not able to recreate.

CONCLUSION

So that brings us to the end of this video. As always, a final thanks to all of the kind Patrons who keep the channel going with their support and receive these videos early and free of ads. Otherwise, until next time, thanks for watching and goodbye.

How Jordan Peele Shoots A Film At 3 Budget Levels

Jordan Peele is a director who possesses a true love of genre - especially the horror genre. His films have used genre as a structural framework, which are filled in with satirical stories that explore wider themes, ideas and issues in society, told through the eyes of his protagonists. In this video I’ll explore the work of Jordan Peele by looking at three films that he has directed at three increasing budget levels: Get Out, Us, and Nope.

INTRODUCTION

Jordan Peele is a director who possesses a true love of genre - especially the horror genre. His films have used genre as a structural framework, which are filled in with satirical stories that explore wider themes, ideas and issues in society, told through the eyes of his protagonists.

Telling stories in such a bold, direct manner, that at times challenge and poke at the audience’s own insecurities and deep set fears has sometimes meant that his films have gotten polarised reactions.

In this video I’ll explore the work of Jordan Peele by looking at three films that he has directed at three increasing budget levels: the low budget Get Out, the medium budget Us, and the high budget Nope to unpack the methodology behind his filmmaking and his career.

GET OUT - $4.5 MILLION

From his background in sketch comedy, Peele transitioned to another genre for his debut feature film.

“I think horror and comedy are very similar. Just in one you’re trying to get a laugh and in one you’re trying to get a scare.” - Jordan Peele

Both genres rely on careful pacing, writing, reveals and filmmaking gags that are used to invoke an emotional response from the audience. He also brought his appreciation for direct satire and social commentary from sketches into the horror screenplay.

In fact, some of the films that inspired him were stories written by Ira Levin, like The Stepford Wives and Rosemary’s Baby - built around the horror genre and underpinned with a satirical commentary on society.

“Those movies were both extremely inspiring because what they did within the thriller genre was this very delicate tightrope walk. Every step into ‘weird town’ that those movies make, there’s an equal effort to justify why the character doesn’t run screaming. That sort of dance between showing something sort of weird and over the top and then showing how easily it can be placed with how weird reality is. That’s the technique I brought to Get Out.” - Jordan Peele

Justifying the actions of the characters so that the audience does not question the decisions that they make is particularly important in the horror genre or any genre that incorporates elements of the supernatural into a story.

Slowly backing the characters up into a corner until they have no escape is what creates the necessary suspenseful environment.

He pitched the script to Blumhouse Productions - who have a track record of producing low budget horror films, under the $6 million mark, that they are later able to get wide studio releases for that catapult them to financial success due to the wide commercial audience for horror.

It was through Blumhouse that he was connected with DP Toby Oliver who had previously shot other films for the production company.

“It began as the fun of a horror story. In the middle of the process it turned into something more important. The power of story is that it’s one of the few ways that we can really feel empathy and encourage empathy. When you have a protagonist, the whole trick is to bring the audience into the protagonist’s eyes.” - Jordan Peele

Peele puts us in the character’s shoes through the way that he structures almost all of his stories around a central protagonist. He also uses the placement of the camera, how it moves and the overall cinematography to make us see the world from the point of view of the main character.

Oliver lit most of the film in a natural way, presenting the world to the audience in the same way that the protagonist would see it.

“My pitch to him was that I thought the movie should have really quite a naturalistic feel. Not too crazy with the sort of horror conventions in terms of the way it looks. Maybe not until the very end of the movie where we go towards that territory a little bit more. With the more stylised lighting and camera angles.” - Toby Oliver

Instead, the camera often tracked with the movement of the protagonist or stayed still when he was still.

They also shot some POV shots, as if the camera literally was capturing what the character was seeing, or used over the shoulder shots that angled the frame to be a close assimilation of the actor’s point of view.

This framing technique, combined with a widescreen aspect ratio, also stacks the image so that there are different planes within the frame.

“What I love to do as a DP is to have story elements in the foreground, midground and background. When you’re looking through the frame there’s depth that’s telling you something more about the characters and story as you look through it.” - Toby Oliver

One of the challenges that came with the film’s low budget was an incredibly tight 23 day shooting schedule. To counter this they did a lot of planning about how the film would be covered before production started - which included Peele drawing up storyboards for any complicated scenes and walking through the scenes in the house location and taking still photos of each shot they needed to get, which Oliver then converted into a written shot list.

They shot Get Out using two Alexa Minis in 3.2K ProRes to speed up the coverage of scenes, using Angenieux Optimo Zoom lenses, instead of primes, which also helped with the quick framing and set up time that was needed.

Overall, Get Out was crafted in its writing as a contained, satirical horror film, shot with limited locations, fairly uncomplicated, considered cinematography through the eyes of its protagonist, and pulled off on the low budget by shooting on a compressed schedule with pre-planned lighting diagrams and shot lists.

US - $20 MILLION

“It really is a movie that was made with a fairly clear social statement in mind, but it's also a movie that I think is best when it's personalised. It’s a movie about the duality of mankind and it’s a movie about our fear of invasion, of the other, of the outsider and the revelation that we are our own worst enemy.” - Jordan Peele

Building on the massive financial success of Get Out, Peele’s follow up film took on a larger scope story that demanded an increased budget. Again, Blumhouse Productions came on board to produce, this time with an estimated budget of $20 million.

Like Get Out, Us was also written as a genre film, this time leaning more into the slasher sub-genre of horror.

“I think what people are going to find in Us is that, much like in Get Out, I’m inspired by many different subgenres of horror. I really tried to make something that incorporates what I love about those and sort of steps into its own, new subgenre.” - Jordan Peele

This time Peele hired Michael Gioulakis to shoot the project, a cinematographer who’d worked in the horror and thriller genre for directors such as M. Night Shyamalan and David Robert Mitchell.

One of the early challenges that they faced in pre-production was a scheduling one. Because they had four leads, who each had a doppelganger in the movie, and changing between shots with those doppelgangers required hours of hair and make-up work, they needed to precisely plan each shot.

“Because you could never shoot a scene like you normally would where you shoot this side and then shoot the other side, we ended up actually boarding pretty much the whole movie. Which helped us to isolate who would be seen in which shot in which scene and then we could move that around and structure our day accordingly with costume and make up changes.” - Michael Gioulakis

The actors would arrive on set and do a blocking from both sides of the character. When shooting they then used a variety of doubles and stand-ins, who would take up one of the dopplegangers positions so that the actor had an eyeline to play to. They would shoot the scene from one of the character’s perspectives and then usually come back the next day and do the other side of the scene.

For some wider two shots they left the camera in the same position, shot one shot with one character, one empty plate shot without the characters and one shot with the character in new make up. Or they would shoot the scene with a double and did a face replacement in post production.

Not only was continuity very important for this, but also the lighting setups had to remain consistent between shots.

“I kind of like the idea of heightened realism in lighting. Like a raw, naturalistic look, just a little bit of a slightly surreal look to the light.” - Michael Gioulakis

A great example of this idea can be seen in the opening sequence inside the hall of mirrors where he used soft, low level LED LightBlade 1Ks with a full grid Chimera diffusion to cast a cyan light to give a more surreal feeling to what should be darkness.

Like in all of his work, Peele’s cinematographers often play with the contrast between warm and cool light and the connotations that warm light during the day is comforting and safe and bluer light at night is colder, more scary and dangerous.

This isn’t always the case, but generally in his films, Peele paces moments of comforting characterisation during the day with moments of darker terror at night.

One of the trickier sequences involved shooting on a lake at night. Instead of going the usual route of mimicking moonlight, the DP created a nondescript, key tungsten source, punctuated by some lights off in the background to break up the darkness.

His gaffer put a 150 foot condor on either side of the lake, with three 24-light dinos on each condor to key the scene. They then put up a row of 1ks and sodium vapour lights as practicals in the background.

The film was shot with an Alexa and Master Primes - on the 27mm and 32mm for about 90% of the film. He exposed everything using a single LUT that had no colour cast at the low end which rendered more neutral darker skin tones.

In the end, Us was shot over a longer production schedule that accommodated for double-shooting scenes with the leads, stunt scenes, bigger set design builds, and digital post production work by Industrial Light & Magic.

NOPE - $68 MILLION

“First and foremost I wanted to make a UFO horror film. Of course, it’s like where is the iconic, black, UFO film. Whenever I feel like there’s my favourite movie out there that hasn’t been made, that’s the void I’m trying to fill with my films. It’s like trying to make the film that I wish someone would make for me.” - Jordan Peele

For his next, larger budget endeavour he turned to the UFO subgenre with a screenplay that was larger in scope than his previous films, due to its large action and stunt set pieces and increased visual effects work.

Even though it was a bigger movie, the way in which he told and structured the story is comparable to his other work in a few ways. One - it was written as a genre film, based on horror with offshoots of other subgenres. Two - it was told over a compressed time period using relatively few locations. Three - it featured a small lead cast and told the story directly through the eyes of his protagonist.

With a larger story and a larger budget came the decision, from esteemed high budget cinematographer Hoyte Van Hoytema, to shoot the film in a larger format.

“So I talked to Hoyte. Obviously scope was a big thing and I wanted to push myself and I asked him, ‘How would you capture an actual UFO? What camera would you use?’ And that’s what we should use in the movie. Both in the movie and in the meta way. And he said the Imax camera.” - Jordan Peele

So the decision was made that to create an immersive, otherworldly, large scope cinema experience they would shoot on a combination of 15-perf, large format IMAX on Hasselblad lenses and 5-perf 65mm with Panavision Sphero 65 glass.

They stuck to Imax as much as they could, but had to use Panavision’s System 65 for any intimate dialogue scenes, because the Imax camera’s very noisy mechanics that pass the film through the camera make recording clean sync sound impossible.

They shot the daytime scenes on 65mm Kodak 250D and dark interiors and night scenes on Kodak 500T. They also used Kodak 50D to capture the aerial footage. He developed the film at its box speed without pushing or pulling it to ensure they achieved maximum colour depth and contrast ranges without any exaggerated film grain.

The most challenging scene for any cinematographer to light is a night exterior in a location which doesn’t have any practical lights to motivate lighting from.

Unlike the night exteriors in Us, which were keyed with tungsten units from an imagined practical source, van Hoytema chose to instead try to simulate the look of moonlight. There are two ways that this is conventionally done.

The first is shooting day for night, where the scene is captured during the day under direct sunlight which is made to look like moonlight using a special camera LUT.

The second way is to shoot at night and use a large, high output source rigged up in the air to illuminate a part of the exterior set. However the larger the area that requires light, the more difficult this becomes.

Van Hoytme came up with an innovative third method that he had previously used to photograph the large exterior lunar sequences on Ad Astra.

He used a decommissioned 3D rig that allowed two cameras to be mounted and customised it so that both cameras were perfectly aligned and shot the same image.

He then attached a custom Arri Alexa 65 which had an infrared sensor that captured skies shot in daylight as dark. A Panavision 65 camera was mounted to capture the same image but in full colour.

In that way they shot two images during the day that they could combine, using the digital infrared footage from the Alexa 65 to produce dark looking skies and pull the colour from the film negative of the Panavision 65.

This gave the night sequences a filmic colour combined with a background which looked like it was lit with moonlight and allowed the audience to ‘see in the dark’.

“Shooting on Imax brings its whole own set of challenges to the set. So for somebody that hasn’t shot on Imax you definitely bump yourself out of your comfort zone. By doing tests it became very evident, very early, that the advantages by far outweighed the disadvantages or the nuisances.” - Hoyte van Hoytema

While maintaining many of the story and filmmaking principles from his prior films, Nope was pulled off on a much larger budget that allowed them to shoot in the more expensive large format, with more money dedicated to post production, stunts and large action sequences that the bigger scope script required.

CONCLUSION

Jordan Peele’s filmic sensibilities that value genre, stories which contain broader social commentary, told with a limited cast, in limited locations, through the sympathetic eyes of its central protagonist have remained the same throughout his career as a writer and director.

What has changed is the scope of the stories he tells. Each new film he’s made has seen increasingly bigger set pieces, more complex action scenes and larger set builds which are captured by more expensive filmmaking techniques.

This increase in scope is what has influenced each bump up in budget - all the way from his beginnings as a low budget horror filmmaker to directing a massive, Hollywood blockbuster.

Why Top Gun: Maverick Action Scenes Feel Unbelievably Real

The runaway financial success of Top Gun: Maverick that makes it, at the time of this video, the sixth highest grossing movie in US box office history can be boiled down to numerous factors. This video will look at one of those factors: its aerial action scenes.

INTRODUCTION

The runaway financial success of Top Gun: Maverick that makes it, at the time of this video, the sixth highest grossing movie in US box office history - coming out ahead of even an Avengers movie - can be boiled down to numerous factors.

It was built on existing copyright and boosted by the success of the original Top Gun. It starred Tom Cruise. It pulled at the sentimental strings of a huge audience that missed the big, Hollywood blockbusters of old while still revitalising it with something fresh. It was directed with a deft handling of emotion. And - what we’ll talk about in this video - it was executed with amazingly filmed aerial action sequences that kept audiences right on the edge of their seats.

IN-CAMERA VS VFX

But, what is it that differentiates these moments of action from many of the other blockbuster set pieces that we’ve become used to? I’d pin point it to an effective use of ‘in-camera’ photography. In other words using real effects more than visual effects.

“I think when you see the film you really feel what it’s like to be a Top Gun pilot. You can’t fake that.” - Joseph Kosinski

Much of the appeal of what makes up a blockbuster comes from the sequences which feel ‘larger than life’ and offer a spectacle. Whether that means large choreographed dance routines, car chases, bank heists or displays of superpowers.

Every scene like this requires a filmmaking solution beyond the realms of just shooting two actors talking.

On the one end we have practical or in-camera effects. This is where real world filmmaking, engineering solutions and optical trickery are mixed - such as shooting miniatures or using forced perspective.

At the other end we have CGI, where computer software is used to manipulate and create those images.

Almost every big budget movie nowadays, including Top Gun: Maverick, uses a combination of both practical photography and computer-generated imagery. However some films, like Maverick, prioritise in-camera effects in order to achieve shots with a greater tie to reality.

“You can’t fake the G-forces, you can’t fake the vibrations, you can’t fake what it looks like to be in one of these fighter jets. We wanted to capture every bit of that and shooting it for real allowed us to do that.” - Joseph Kosinski

Once director Joseph Kosinski and cinematographer Claudio Miranda had the shooting script in their hands they had to start making decisions about how they would translate the words on the page into awe inspiring aerial action set pieces.

Shooting aerial sequences is a large practical challenge.

First, they broke the aerial shots that they needed into three types of shots: one, on the ground shots, two, air to air shots, and three, on board shots.

1 - ON THE GROUND

To execute the many aerial sequences in the movie they turned to David Nowell, a camera operator and specialist aerial director of photography who had worked on the original Top Gun film.

“If you analyse the first Top Gun about 75% of all the aerials we actually did from the mountain top because you can get stuff on a 1,000mm lens that you just can’t quite get when you’re filming air to air. And I brought that forward to Joe Kasinski, saying, ‘You have to do this on this movie. This is the difference it makes.’ And so, we did. We spent almost a week on the new Top Gun just on the mountain top getting all the different shots that they needed.” - David Nowell

Cinematographer Claudio Miranda selected the Sony Venice as the best camera for this shoot - for reasons we’ll get to later. This digital footage was warmed up a lot, given deep shadows and had artificial 35mm film grain added to it in the grade to give the footage a similar feeling to the original - with its warm, bronzed skin tones.

To further enhance the original Top Gun look, Miranda consulted with Jeffery Kimball, the cinematographer on the 1986 film, who passed on information about the graduated filters that he shot with.

Grads or graduated ND filters have a gradient level of ND that is strong at the top and decreases at the bottom, either softly or with a hard definition. Usually grads are used to shoot landscapes or skies. When the darker ND part of the filter is placed over the sky it produces a more dramatic, tinted look.

To capture all the angles that they needed for these scenes meant that a massive camera package was used. Six cameras could be used for the on-board action, four cameras could be mounted to the plane's exterior at a time, the air-to-air shooting was another camera and a few cameras were needed for the ground to air unit.

Like the original they decided to shoot on spherical lenses and crop to a 2.39:1 aspect ratio. This was due to spherical lenses having better close focus abilities and being smaller in size than anamorphic lenses, which allowed them to be placed in tight plane interiors.

To get shots of the planes from the ground, a camera unit was equipped with a Fujinon Premier 24-180mm and a 75-400mm zoom. They also carried two long Canon still lenses that were rehoused for cinema use: a 150-600mm zoom and a 1,000mm lens.

When this wasn’t long enough they used a doubler from IBE Optics. This 2x extender attaches to the back of the lens via a PL mount and doubles the focal length range. So a 75-400mm zoom effectively becomes a 150-800mm lens.

Tracking fast moving objects so far away is very difficult, so the operators ended up using modified rifle scopes mounted on top of the camera to help them sight the planes.

The on the ground scenes captured an F-14 Tomcat, which was re-skinned or made to look like an F18, with digital effects. This is a great example of the kind of intersection between practical photography and digital effects which I talked about earlier.

2 - AIR TO AIR

Although very useful, on the ground cameras are unable to physically move the camera to track with the aircrafts beyond using pans and tilts. For dynamic, in the air motion and a raised point of view the camera team shot air to air footage.

This required shooting with a CineJet - an agile Aero L-39 Albatros jet that has a Shotover F1 stablised head custom built onto the front of the nose which houses the camera.

The camera can be operated while the position of the plane is also adjusted relative to the other planes they were shooting by an experienced pilot.

Since the Shotover is primarily designed to be used from a slower moving helicopter, and on Maverick they were shooting a fast moving Boeing F/A-18F Super Hornet fighter jet, they needed to come up with a technical solution.

“The one big change for Top Gun is that the Shotover systems that we’ve used for years…was never fast enough to go any faster than what a helicopter would do. But then Shotover…they updated the motors that would take the high torque needed to pan and tilt while flying 350 knots, that’s close to 400 miles per hour.” - David Nowell

For certain sequences that required a shot looking back on aircrafts, they used an Embraer Phenom 300 camera jet that had both front and back mounted Shotovers.

The Venice that was mounted on the Shotover was paired with a Fujinon zoom, either a 20-120mm or a 85-300mm zoom. Some helicopter work was also done with the larger Shotover K1 that had an extended case that could house Fujinon’s larger 25-300mm zoom.

3 - ON BOARD

Arguably the most engaging and jaw dropping footage in the film comes from the cameras that are hard mounted onto the plane itself.

There are two ways that this kind of footage can be shot. The most common technique involves placing actors in a stationary car, spaceship, plane or whatever kind of moving vehicle it is, on a studio soundstage.

Outside the windows of said vehicle the technical crew will place a large bluescreen, greenscreen or nowadays, a section of LED wall. The actors then pretend the vehicle is moving, do their scene and the crew may give the vehicle a shake to simulate movement.

In post production this bluescreen outside the windows is replaced with either footage of the background space they want to put the vehicle in, such as highway footage, or with an artificial, computer generated background.

The two main reasons for shooting this way is that, one, it is usually a cheaper way of shooting and two, it offers a far greater degree of control. For example, it allows the actors to easily repeat the scene, the director can monitor their live performances and talk to them between takes, the sound person can get clean dialogue and the DP can carefully light so that the scene is exposed to their liking.

Instead of taking this more conventional approach, Top Gun’s creative team made the radical decision to shoot this footage practically - in real life.

To prepare, the actors underwent three months of training, designed by Tom Cruise, so that they could withstand the extreme forces that would play out on them during filming.

Along with the difficulties involved in the actors giving complex emotional performances while flying at extremely high speeds, rigging the on board cameras to capture these performances was no easy feat.

The main reason that Miranda went with the Sony Venice was due to its Rialto system. This effectively allows the camera to be broken in two: with one small sliver that has the sensor and the lens and the other which has the rest of the camera body and the required battery power. These units are tethered by a cable.

1st AC Dan Ming, along with a team of engineers, came up with a plan to mount six cameras inside the F18.

They custom machined plates that could be screwed into the plane that the cameras were mounted to. Three Venice bodies and a fourth Venice sensor block were mounted in front of the actors in the back seat of the jet. These were tethered to a body block and battery rack that they placed near the front seat where the real pilot was.

Two additional sensor blocks were also rigged on either side of the actor to get over the shoulder shots. Again, they were tethered to body blocks at the front of the plane.

As I mentioned, fitting that many cameras into such a tight space meant that the lenses need to be spherical, have good close focus and be as low profile as possible. Miranda went with a combination of 10-15mm compact Voigtländer Heliar wide-angle prime lenses and Zeiss Loxia primes.

Earlier I mentioned that this method of hard mounting the cameras came with a lack of control. This is perhaps best seen by the fact that once the plane took off, not only were the actors responsible for their own performances but they even had to trigger the camera to roll and stop when they were up in the air.

“Ultimately when they’re up there it’s up to them to turn the camera on and play the scene. I mean, the biggest challenge is not being there to give feedback. So you’re putting a lot of responsibility and trust in our cast. So, that was a unique way of directing the film for those particular scenes but it’s the only way to capture what we were able to get.” - Joseph Kosinski

Filming in this way meant that they’d do a run, come back and sometimes find out that parts of the footage wasn’t useful because of the lighting, or the actor’s eyeline being in the wrong place, or even because an actor didn’t properly trigger the camera to record.

However the footage that did work looked incredible and gave a feeling of being in a real cockpit - complete with all the vibrations, natural variations in light, and realistic adrenaline filled performances from the actors. These images wouldn’t have been the same had they shot these scenes in a studio.

Four cameras were also hard mounted directly onto the exterior of the jet. Again they used the Rialto system with wide angle Voigtländer primes. Another advantage of using the Venice is that it has a wide selection of internal ND filters.

This meant that they didn’t need to attach a mattebox with external NDs to decrease the exposure which would have made the camera’s profile too big for the interior shots, and would have probably been impossible to do safely on the exterior cameras due to the extreme high speeds of the jet.

CONCLUSION

Top Gun: Maverick brings us back to an era of filmmaking where real effects are used to tell stories and the CGI that is used is done subtly and largely goes unnoticed by the audience.

For years now, by and large, I’ve been nonplussed watching most action in films. The overabundance of CGI effects triggers something in my brain that tells me that what I’m watching isn’t real, which makes the action feel less exciting.

By putting us in an environment where each and every manoeuvre is physical, real and visceral it makes the stakes real. This leads to a real emotional connection and immersion in the story.

There’s a reason why you often hear some auteurs sing the praises of in-camera effects and disparage the overuse of CGI. Maverick uses the best of both worlds. The crew executed most of the action with bold, practical photography, which was safe and innovative.

Subtle digital effects were then brought in later when necessary to make up for those shots which were practically impossible.

I can only hope that Hollywood executives take this away as one of the reasons for the film’s financial success and encourage these kinds of filmmaking decisions going forward. There’s always a time and a place for great VFX in cinema, but sometimes shooting things practically is the best way to go.

Cinematography Style: Matthew Libatique

In this edition of Cinematography Style, we’ll unpack Matthew Libatique’s cinematography.

INTRODUCTION

“There’s an abundance of ways to shoot a film. In this world because we have so many people who are trying to make films, being original is also really difficult. You really have to go with a kind of abandon when you’re trying to create something special.”

From gritty, low budget movies to the biggest, blockbuster superhero flicks in the world, Matthew Libatique’s cinematography has covered them all. Directors are drawn to his appetite for creative risk taking, his bold, subjective, in your face close ups combined with his deep experience and on set knowledge that has covered a broad array of technical shooting setups.

In this edition of Cinematography Style, we’ll unpack Matthew Libatique’s photography by unveiling some of his philosophical thoughts and insights about working as a DP, as well as breaking down some of the gear and technical setups he has used to achieve his vision.

BACKGROUND

“I started becoming interested in films because of the camera. In undergraduate school I saw Do The Right Thing. It was like a mind explosion of possibility. It was the first time I ever saw a film that made it feel like it was possible for a person like me to make films.”

Even though Libatique majored in sociology and communication during his undergraduate studies at university, he was still strongly drawn to the camera. This led him to enrolling to study an MFA in cinematography at the AFI. It was there that he met a director who would be one of his most important and long running collaborators: Darren Aronofsky.

He shot Aronofsky’s early short film Protozoa, and when it came to working on their debut feature film, Pi, Libatique got called up to shoot it.

“The director gives you a motivation, an idea, a concept. And then you can build off of that. And the more they give you the more you can do.”

After the success of Aronofsky’s early films, Libatique began working as a feature film DP with other A-list directors, like: Spike Lee, Jon Favreau and Bradley Cooper.

PHILOSOPHY

“When I was becoming interested in filmmaking in undergrad I didn’t study film. It was in sociology and communications. The one camera they had was an Arri S and it had a variable speed motor on it. The variable speed motor was set to reverse. So when I got the footage back I had double exposed everything. And I looked at it and it was a complete and utter f— up on my part. But then I was sort of inspired by the mistake. I always look back on that moment and I’ve kinda made a career on those mistakes working out.”

I’d ascribe Libatique’s appetite for visual risk taking, which include what may be seen as ‘mistakes’ or ‘happy accidents’, as being a large part of what informs his photography.

What I mean by visual risk taking is that the films that he shoots often carry a visual language which doesn’t conform to what is seen as mainstream, Hollywood, cinematic conventions - such as steady, flowing camera moves, neutral colour palettes and more restrained contrast levels with highlights that don’t blow out and turn to pure white.

At times, his camera movement and lighting deliberately distorts and challenges what is seen as a perfect, clean image, by finding beauty in imperfections.

For example, his debut film Pi was shot on the highly sensitive black and white reversal film. This film has an exposure latitude that is far more limited than traditional colour negative film. What this means visually is that there is a tiny range or spectrum between the brightest parts of the image and the darkest parts of the image, and that areas of overexposure are quick to blow out, while shadowy areas of underexposure are quick to get crushed to pure black.

This resulted in an extremely high contrast black and white film, the complete opposite of Hollywood’s more traditionally accepted colour images that have gently graduated areas of light and shadow.

Another example of visual risk taking is using body mount rigs on Aronofsky movies like Requiem For A Dream where he strapped the camera directly onto actors for a highly subjective, actor focused point of view.

Even in his recent, high end budget work on a Superhero movie like Venom, he often directed light straight into anamorphic lenses, deliberately producing excessive horizontal flares that dirtied up the image.

Often these stylistic ideas will come from the director, especially when working with a director that is more hands on about the cinematography, like Arnofsky. But other times, visual ideas evolve from a combination of discussions and real world tests prior to shooting.

When prepping for A Star Is Born, Libatique brought a camera into director and actor Bradley Cooper’s house while he was working on the music to shoot some camera tests with him. A lot of ideas came out of this test that informed the language of the film. This included a red light that Bradley Cooper had in his kitchen, which inspired the use of magenta stage lighting for many of the performances in the film.

A final stylistic philosophy which I’d attribute to Libatique is his continual awareness of the point of view of the camera and whether the placement of the camera takes on a subjective or an objective perspective.

In many of his films, particularly in his work with Aronofsky, he’s known for using a highly subjective camera that is one with the subject or character of the film. He does this by shooting them almost front on in big close ups that are tight and isolate the character in the frame.

This is also paired with a handheld camera that he operates himself. By shooting with the characters in a reactive way as if he’s tethered to them it also makes the shots more character focused and subjective.

This isn’t to say that he always does this. Some other stories he’s shot in a wider, more detached, objective style. But whatever the movie he’s always acutely aware of where he places the camera and the effect that it has on the audience.

GEAR

Earlier I mentioned that he shot Pi on black and white reversal film, 16mm Eastman Tri-X 200 and Plus-X 50 to be precise. Unlike modern digital cinema cameras that have something like 17 stops of dynamic range, this reversal film that he shot on only had about 3 stops of range between the darkest shadows and brightest highlights.

This required his metering of exposure to be very precise. If he let the highlights be 4 stops brighter than the shadows then they would blow out to white and lose all information. One way he narrowed down the precision of his exposure was with reflective metering.

“The thing that has really stuck with me throughout my career is the impact of reflective lighting.”

There are two approaches to metering or judging how much light there is. One is called incident metering. This is where the light metre is placed directly between the source of the light and the subject - such as in front of an actor’s face - facing the light, to determine how much light is directly hitting them.

Another way to metre light - which Libatique uses - is reflective metering. Instead of facing the metre towards the light, he faces it towards the subject. This way the light metre measures the amount of light that is hitting the subject and bouncing back - hence reflective metering.

“I’ve been using a reflective metre my entire career until this digital revolution. And even so I use a waveform that gives me a reflective reading of somebody’s skin tone because that’s the only way that I know how to expose anything.”

He mixes up his choice of format, camera and lenses a lot depending on the story and practical needs. For example, some of Aronofsky’s work he’s shot in 16mm with Fuji Eterna film for some and Kodak Vision stock for others.

Much of the rest of his work prior to digital cinematography taking over was shot on 35mm - again alternating between Fujifilm and Kodak stocks for different projects.

Since digital has taken over he mainly uses different versions of the Arri Alexa - especially the Alexa Mini - but does occasionally use Red cameras.

He even famously used a Canon 7D DSLR with a 24mm L series lens to shoot the subway scenes in Black Swan, which he shot at 1,600 ISO at a deep stop of T8 ½. He did it in a documentary style, even pulling his own focus on the barrel of the lens. His colourist Charlie Hertzfeld later manipulated the 7D footage, especially the highlights, until it could be cut with the rest of the grainy 16mm footage.

His selection of lenses is as varied as his selection of cameras. He switches between using spherical and anamorphic lenses. Some examples of lenses he’s used include Panavision Ultra Speeds, Cooke Anamorphics, Zeiss Ultra Primes, Panavision Primos and Cooke S4s.

On A Star Is Born, he carried two different anamorphic lens sets - the more modern, cleaner Cooke Anamorphics, and the super vintage Kowas - and switched between them depending on the feeling he wanted.

He used the Kowas, with their excessive flaring, hazing and optical imperfections for more subjective close up moments on stage. Then for the more objective off-stage work he switched to the cleaner Cookes.

Overall most of the lighting in his films does tend to gravitate more towards the naturalistic side. But, within that, he introduces subtle changes depending on the nature and tone of the story.

For the more comedic Ruby Sparks a lot of his lighting, although naturalistic, was very soft and diffused on the actors faces. While Straight Outta Compton, which tips a bit more into a tense dramatic tone, had harder shadows, less diffusion and an overall lower exposure while still feeling naturalistic.

So while his lighting is always motivated by reality; the texture, quality, direction and colour of it changes depending on how he wants the image to feel.

Since the rise in LED lighting, he often uses fixtures like LiteGear LiteMats, Astera Tubes and of course Arri Skypanels. When he can, he likes rigging them to a board so that he can precisely set levels and sometimes even make subtle changes as the camera roams around in a space.

Although he has used every kind of rig to move the camera, from a MOVI to a Steadicam to a Dolly, he is partial to operating the camera handheld on the shoulder. I think in some contexts this can be seen as one of those creative risks that we talked about earlier.

For example, even on the large budget, traditional blockbuster - Iron Man - which you would expect to only have perfectly smooth dolly, crane and Steadicam motion - he threw the camera on his shoulder and gave us some of those on the ground, handheld close ups which he does so well.

CONCLUSION

Although he uses a lot of different tools to capture his images, he doesn’t do so haphazardly. Being a good cinematographer is more than just knowing every piece of gear available. It’s about knowing how you can use that gear to produce a tangible effect.

Sometimes that effect should be a bit more subtle, but certain stories call for boldness.

His images may take large creative risks that go against conventions and expectations, but those risks are only taken when they are justified by the story.

5 Reasons Why Zoom Lenses Are Better Than Primes

In this video let’s flip things in favour of our variable focal length friends by unpacking five reasons why zoom lenses are better than primes.

INTRODUCTION

As we saw in a previous video, there are many reasons why in some photographic situations prime lenses are a better choice than zoom lenses. The fixed focal length or magnification of prime lenses provide: a more considered perspective when choosing a focal length, better overall optical quality, a larger aperture, a smaller size and better close focusing capabilities.

In this video let’s flip things in favour of our variable focal length friends by unpacking five reasons why zoom lenses are better than primes.

1 - ZOOM MOVEMENT

The first reason for choosing to use a zoom lens goes without saying - it allows you to do a zoom move in a shot. Most shots in cinema and other kinds of film content shoot with a fixed level of magnification and do not zoom during a shot.

I think this is in part due to the traditional language of cinema built by a legacy of many older films which were photographed with prime lenses, before usable cinema zooms were widely manufactured and prime lenses were the de facto choice.

However, during the 1970s and 1980s using in-camera zooms to push into a shot or pull out wider without moving the camera gained more popularity amongst filmmakers.

There are many stylistic motivations behind using zoom movement. It can be used to slowly pull out and reveal more information in a shot until we see the full scope of the landscape. It can be used as a slightly kitsch, crash zoom - where the camera rapidly punches in to reveal a character, to emphasise a certain line, or land a comedic punchline.

Because of their flexibility and ease of use, which we’ll come to later, zooms have also been widely used when shooting documentaries - particularly fly on the wall type doccies. In some films this type of zoom movement is extrapolated from these documentary conventions in order to lend a visual style of realism associated with the documentary look, or even to mock this look for comedic emphasis.

The list of reasons to zoom within a shot goes on and has a different stylistic or emotional impacts depending on the context in which it is used. It should be noted though that most filmmakers are careful about not overusing zooms, as they can easily become a bit tired, distracting and cliched, unless they form part of an overall considered visual style.

2 - PRECISE FRAMING

Of course pushing in with a lens requires a zoom, but what about those films that don’t use any in shot zooms but still decide to shoot on zoom lenses?

Another reason cinematographers may use a zoom is because they make it easy to precisely frame a shot.

When you shoot with a prime lens’ fixed focal length on the camera and you want to change the width of the frame you need to physically move the position of the camera. This is easy when you are shooting handheld with a little mirrorless camera.

But when you are using a hefty cinema rig, on a head and a set of legs that is so heavy that it requires a grip team each time you move and level the camera, using zooms becomes more appealing.

With primes you may need to slightly reposition a frame by moving the camera forward six inches, realise this is too far forward, and then have to move the camera back again three inches until that light stand is just out of frame. With a zoom lens you can just just change the focal length from 50mm to 45mm without moving the camera or tripod.

A great example of this happens on most car shoots. I’ve worked as a camera assistant on loads of car commercials and about 99% of the time when using a Russian Arm to shoot moving vehicles, DPs choose a zoom lens over a prime lens.

It’s far easier and more practical to use a wireless motor to adjust the zoom on the barrel of the lens to find the correct frame from inside the Russian Arm vehicle, than it is to get the driver of the vehicle to keep repositioning the car a couple of metres on every run until the frame is perfect.

It is also easier to find the correct position for the camera without needing to move it when using wider primes: either with a pentafinder, a viewfinder app, or just based on the experience of the DP. But, when you use longer focal lengths, like a 135mm or 180mm prime lens, because of the lens compression it becomes infinitely more difficult to find the correct frame without needing to move the camera.

There are also less prime focal length options at the longer end - which we’ll talk about later. Therefore for telephoto shots, zooms are regularly used for their ability to punch in or out until the correct frame is found.

3 - FASTER SETUPS

With an increased precision in framing shots, comes a faster set up time. On a film set time very much equals money. The quicker you can set up and shoot each shot, the less crew overtime, rental days on gear and location fees you have to pay.

When you’re working on a tight budget without the possibilities of extended over time or extra pick up days, taking longer to set up shots means that the director is afforded to film less takes, with less time to craft the performances of actors or set up choreographed action.

Using zoom lenses speeds up production in a few ways. For one, if you shoot everything with a single zoom lens, it means less time spent changing lenses, swapping out matte boxes and recalibrating focus motors.

As we mentioned previously, it also means that grip teams don’t need to reposition heavy and time consuming rigs, like laying dolly tracks. If the track was laid a little bit too far forward, the operator can just zoom a little bit wider on the lens to find the frame, rather than starting over from square one and re-laying the tracks.

Another practical example is when using a crane or a Technocrane. If you use a 35mm prime lens on the camera, balance it on a remote head, perfectly position the arm and then realise that the lens is not wide enough and you need a 24mm focal length instead, the grip team needs to bring down the arm, the camera team needs to switch out the lens, the Libra head technician needs to rebalance the head with the weight of the new lens, and finally the grip team then brings the crane back into the correct position. All this could take 10 minutes or more.

If instead the DP used a zoom lens with a wireless focus motor on the zoom, this change would take less than 10 seconds.

10 minutes may not sound like a lot, but if this keeps happening throughout the day this can quickly add up to an hour or two of wasted shooting time - which is both expensive, means less footage will be shot and therefore gives the director less precious takes to work with in the edit.

4 - FOCAL LENGTH OPTIONS

A prime lens set usually covers a fair amount of different focal lengths on the wide end, but, when it comes to telephoto options beyond about 100mm their selection is usually very limited.

For this reason, DPs that like shooting with long focal lengths that compress the backgrounds in shots often hire a zoom. For example, the Arri Alura can punch in all the way to 250mm. While the longest focal length available on a set of modern cinema prime lenses such as the Arri Master Primes is 150mm.

So for cinematographers who want to use long, telephoto lenses, zooms are usually a better option.

Many zooms also offer an overall greater range of focal lengths, for example an Angenieux 12:1 zoom offers a field of view all the way from a wide 24mm lens up to an extended 290mm compressed field of view.

For shoots that are in remote areas or in locations which cannot be accessed by a camera truck, carrying around a full set of spherical primes in three or four different lens cases is far more logistically challenging for the crew than just putting a single zoom lens on the camera and walking it in to set.

This makes zooms far more flexible and practical when compared to primes, especially sets of older vintage prime lenses, such as the Zeiss Super Speeds that only come in 6 focal length options from 18mm to 85mm.

5 - BUDGET

The final reason may seem a little counterintuitive because when you compare the price of a single prime lens with that of a single zoom lens, the zoom lens will almost always be more expensive.

However, prime lenses are almost never bought or rented as individual units. They come in sets: such as a set of 6 lenses, or a set of 16 lenses.

When the rental price or buying price of a full set of primes is tallied up it is almost always more than that of a comparable, single zoom lens that covers the same amount of focal lengths.

Therefore, when the budget of a shoot is a bit tight, it may come down to either pleading with the rental house to break up a lens set into a very small selection of two or three primes that cover a limited range of focal lengths, or hiring a single zoom that you can use to cover every field of view that is required for the shoot.

In this regard, a zoom lens is far more realistic and practical.

How We Made A New Yorker Short Documentary: With Jessie Zinn

Highlights from my chat with director Jessie Zinn about the film Drummies. We discuss the process of making a short documentary - from coming up with the initial concept, hiring gear, cutting it together, to finally distributing the finished film.

INTRODUCTION

“That gimbal was terrible. And it was so heavy.” “Didn’t it overheat a couple of times? Cause it was also in the middle of summer and we were shooting in the northern suburbs where it gets up to like 40 degrees which is like in Fahrenheit in the 100s. And we were, like, sweating, and the gimbal was making a noise.”

If you’re watching this you may know me as the disembodied voice behind this channel, who edits these video essays made up of diagrams, shoddy photoshop work and footage from contemporary cinema. But what you may not know is that I also work as a cinematographer in the real world.

So, I thought I’d mix up the format a bit and chat to a real world director, Jessie Zinn, who I’ve shot some films for. One of those films that we shot during the height of the COVID lockdown, a short documentary called Drummies, was recently acquired by the New Yorker and is now available to view for free on their site - which I’ll link for you to check out.

Our chat will break down the process of making that short documentary - from coming up with the initial concept, hiring gear, cutting it together, to finally selling the finished film.

Also, if you want to see the full, extended podcast with Jessie, and also donate to keeping the channel going, it’ll be available on the Master tier on Patreon.

CONCEPT

Before the cameras and the lights and the calling of action, every film begins as an idea.

09:32 “I deas come through very unorthodox channels and different backends and ways of finding out about subjects and topics. I think it’s definitely worth noting that I always make films or I’m always interested in making films for me. And that’s not to say that I don’t have an audience in mind because of course I’m always very aware of who this is for ultimately; who is going to watch it. But I always approach a subject and have interest in making a film based on a subject that I’m just personally really, really interested in or feel a sense of passion towards in some sort of capacity.”

In the case of this film it was born out of seeing a photographic series by Alice Mann on the drummies or drum majorettes of Cape Town.

“Drummies is about a team of drum majorettes in Cape Town. It’s sort of like an intersection between cheerleading and marching band processions. It’s had this really interesting political history in South Africa because it was also one of the first racially integrated sports in South Africa during apartheid. And post-apartheid it’s become almost this underground cult world amongst young girls in schools. In particular in public schools. It’s both a sport where it brings a sense of community and family to them but also provides potential possibilities for upward social mobility.”

PRE-PRODUCTION

With a concept in place, Jessie then went about identifying and getting access to the potential characters that would be in the film and who we would focus on during the shooting process.

“So I actually cast for Drummies and I did that remotely because I was still in the States. So I asked their coach to send through Whatsapp videos of the girls - basically auditioning.”

From there she cut down her ‘cast’ to four or five characters who we would do the majority of our filming with. In the final edit this was later cut down to three characters.

Before bringing in any cameras, she did audio only interviews of her cast using a Zoom recorder and a lapel mic.

“People have their guard up when you first meet them. But in others, often with children, the first encounter is often sort of the least filtered. And so, I knew beforehand that there would probably be some audio soundbites and material that I could gather from those pre-interviews which maybe wouldn’t have been possible with having a whole camera setup around. Actually in the final film some of that audio and voice over is from those pre-interviews because some of it was such great material.”

When Jessie contacted me to shoot the project she passed on snippets of this material to me, so that when we met to chat about the film I already had an idea of the kind of characters that we would be photographing.

She also put together a script and a treatment which I could read through. As a cinematographer, it’s always fantastic to get this kind of thorough vision early on up front as it facilitates the discussions we have about finding a look, or overarching style, for the film.

“It’s always deliberately decided beforehand, at least with the short docs that we work on. You know, I’ll sit down with you often at a coffee shop somewhere and I’ll be like, ‘Here’s a couple of reference films and reference images.’ Then you’ll look at them and then you’ll say, ‘OK. I think this is what we can do based on these references and based on the real people.’”

In the real world, our characters were sitting around during this hot, summer vacation, unable to do much because of the covid restrictions. This led to us discussing the idea that the footage should feel dreamy, as if they were suspended in time - which is also a line that came from one of the interviews.

To visually represent this feeling we decided to shoot a lot of the non-dialogue scenes in slow motion with a heavy, worn, often malfunctioning Ronin gimbal that we managed to borrow from another Cape Town documentary filmmaker.

“This was something that we discussed beforehand. That we wanted there to be a dreamy aesthetic and in terms of the actual movement using a handheld, rough aesthetic wouldn’t have achieved that. You definitely don’t want to limit the dreams that you have in terms of aesthetics for your film but you also do need to be very practical about it and I think that’s what we often get right. We sit down and say, ‘these are the things we would like’ and then ‘this is the version of these things that is actually achievable.’

PRODUCTION

“It’s all about prep - literally. You know you’ve done your job well when you get onto set you can stand back and do very little. If you’re having to do a lot on set then you know you haven’t done a great job - basically.”

With all of Jessie’s prep, creative vision and our discussions about the film’s look coming together, I then, as the cinematographer, need to come up with a list of gear that we’ll use to bring these ideas to the screen.

When it comes to this, one of the biggest limitations is dictated by budget. Doccies are generally made with pretty limited funding, much of which is saved for post production finishing of the film. So for these kinds of projects I usually put together a gear list with two options: one, the kind of best case scenario with a full selection of the gear I’d like to rent, and two, a more stripped down list which is a bit lower cost.

A little bit of back and forth with the gear house may ensue until we come up with the best gear package that meets our budget.

For this film it meant shooting on a Sony FS7, with my four Nikon AIS prime lenses - a 24mm, 35mm, 50mm and 85mm. Although most of the film was shot on the two wider lenses. To add to this dreamy look that Jessie and I talked about I shot everything with a ¼ Black Pro Mist filter which gave the image a nice, diffused feeling.

As I mentioned we managed to get a free Ronin for the shoot - which was the old, original Ronin that was a bit tired but we managed to make it work. I also used a Sachtler Ace tripod, which some may see as being too lightweight for a camera like the FS7.

But, it’s small, easy to carry and makes finding a frame and levelling it off that much quicker. And speed in documentaries is often more important than fractionally more buttery smooth pans and tilts off a larger tripod head.

Although it hopefully does not look like it, every single interior shot in the film was lit with a combination of artificial film light sources and my most important tool: negative fill.

The lighting package I carried around consisted of a budget-friendly Dracast LED bi-colour fresnel, two 8x8 blackout textiles and a couple of C-stands to rig them with. Rigging the blackout to C-stands or hastily tying them to window frames is precisely how not to rig - but since I was working alone and had very limited time to set up each scene I had to make do.

When you’re working with a limited budget, the easiest and most cost effective way to control light isn’t by adding light, but by removing it.

As an example let's break down the changing room scene in the film.

“With that scene which would lead into the final performance because there was this theme throughout the film of being able to achieve dreams but also being held back from achieving those dreams. Because of COVID they weren’t actually able to perform and compete in all of these games and so, the changing room is of course tethered to reality. They have to get changed before any sort of performance. But they also weren’t performing at the time because of COVID restrictions and so I thought that that sort of worked.”

For that reason, I wanted to push this sequence visually about as far as we could into a dreamy state, while still maintaining a link to the real world. We took this orange, yellow palette of the changing room that we were presented with, amped up the warmth in the lighting to the max and pumped a bunch of smoke into the room to create an extra layer of diffusion.

With the help of an assistant I blacked out all the windows and doors which were letting through sunlight - except for a single window behind the characters. Outside this window I put up our LED fresnel, warmed it all the way up and I think even added a ½ CTO gel so that the colour temperature of the light went from tungsten to orange.

Again, doing most of the heavy lifting by removing light and then carefully placing a single backlight to create contrast and a more amplified visual world.

During the shoot, a technique which Jessie and myself often employed was to cordon off and light a specific space, almost like a set, then place the characters within that space and let them converse or act as they would naturally. That way you maintain naturalistic conversation and action but are able to also better sculpt the cinematography into the form which best suits the film.

POST-PRODUCTION

Once production wrapped, Jessie went about editing the film herself.

“I also often edit my films and so that is a big part of crafting a documentary. You’re not finding the story in the edit but you’re definitely chipping away at the basic model that you’ve planned. So when I’m on set I’m also shooting or directing with the edit in mind.”

“Drummies was a good exercise in learning how to trust my instinct. Basically the very first assembly that I laid down on the timeline which I had to deliver to the programme I think it was like three days after we’d shot the film. Which is insane, again. And so it was like a fever dream of staying up into the night to get this assembly done. And after that obviously I did many different versions and different edits where things changed and the structure changed. But when I looked at the final film it was actually almost identical in terms of structure to the very first assembly that I’d put down, like months ago, which also was the same as the script and the treatment.”

So again we come back to this idea of prep and how having a refined vision for the film going into the shooting process, even in a more unpredictable medium like documentary, is so valuable for a director to have.

After the completion of the music composition, sound mix and the final grade - which was based on the reference of another vibrant, colourful and slightly dreamy film - The Florida Project - Jessie is left with a completed film. But what comes after you have the final project?

“A couple of years ago people would say that the be all and end all are film festivals and that determines the success of your film. ButI don’t think that’s the case anymore because there are incredible online avenues for streaming services where you can put your film out there and it can get tons of views and potentially gain an audience that is much wider and larger than a film festival.”

‘So, Drummies did go to a few festivals but it was valuable because that’s how the film got distribution in the end. Both POV and The New Yorker showed interest because they’d seen it at a festival called Aspen Shorts Fest and they both reached out to me by email and said we’re interested in seeing the film, we’d like to have a look. And both of them basically came to me with offers within a few days and so that was the first film where I’d received pretty standard almost classical distribution interest in the film as far as broadcasters and sort of news channels are concerned. Whereas my two previous films got Vimeo Staff Picks which is, I'd say, a little bit more unorthodox and more current than those avenues.”

“People often think that if your film doesn’t get into an A-list festival then it’s the end of your film. Which is just so not true. Because the festivals that Drummies played at are I would say are probably B type of festivals. And those festivals got way more distributors interested than some of the A list festivals that I’d heard about. And so, never underestimate the space that your film is screening in is a valuable lesson that I learnt.”

5 Reasons Why Prime Lenses Are Better Than Zooms

As is the case with all film gear, there are a number of reasons for and against using prime lenses versus selecting zoom lenses. In this video I’ll go over five reasons that make primes superior to zooms - and follow it up with a later video from the other side of the argument about why zoom lenses are better than primes.

INTRODUCTION

If you’re into cinematography, photography or capturing images at all you’re probably aware that there are two types of camera lenses - prime lenses and zoom lenses.

Primes have a fixed focal length, which is measured in millimetres. This means that when you put this lens on a camera, the angle of view of what it sees, how wide it is, or how much the image is magnified is set at one distance and cannot be changed.

Zooms have a range of variable focal lengths. So by moving the zoom ring on the barrel of a lens you can change how wide an image is - in some cases all the way from a very wide angle to a close up telephoto shot.

As is the case with all film gear, there are a number of reasons for and against using prime lenses. In this video I’ll go over five reasons that make primes superior to zooms - and follow it up with a later video from the other side of the argument about why zoom lenses are better than primes. So if you like this content, consider hitting that subscribe button so that you can view the follow up video. Now, let’s get into it.

1 - CONSIDERED PERSPECTIVE

We can think of the width of a frame, or a focal length, as offering a perspective on the world.

This close up, shot with a wide focal length, sees a lot of background and places us, the audience, within the same world as that of the character. This close up, shot with a longer focal length, isolates the character more from the background, blurs it, and compresses, rather than distorts the features of their face.

The great thing about a prime lens’ fixed focal length, is that it also fixes the perspective or feeling of an image. When you choose what prime to put on the camera you are therefore forced into making a decision about perspective.

This isn’t to say that you can't do the same with a zoom, but when you work with a variable focal length lens it’s far easier to just plonk down the camera at a random distance from the subject and then zoom in or out until you get to the shot size that you want.

If you’re using a prime, you need to first decide on the focal length you want and then are forced to reposition the camera by moving it into the correct position. As they say in photography, it makes your legs become the zoom. This is especially useful as a teaching device for those learning about lens selection and camera placement.

So, prime lenses force you more into thinking about the focal length that you chose, which may elevate the visual telling of the story by making it a deliberate decision, rather than an incidental decision.

2 - OPTICAL QUALITY

The practical reasons behind choosing a lens are important, but so too is the look that the lens produces. Due to their design, prime lenses are considered to possess a higher quality optical look than most equivalent zooms. This is mainly because the construction of primes is much simpler and the design more straightforward than that of zooms.

Inside a lens you’ll find different pieces of curved glass. Light passes through this glass to produce an image. Because prime lenses only need to be built as a single focal length they can use less of these glass elements - and, the glass elements inside the lens don’t have to move in order to zoom.

Less glass means less diffraction of light, which usually means sharper images. Also, prime lenses only need to be corrected for optical aberrations like distortion and chromatic aberration for a single focal length. Zooms need to do this for multiple focal lengths, which is trickier to do.

Therefore, your average prime lens will be sharper with less distortion, or bending of the image, and more minimal colour fringing between dark and light areas.

I should add as a caveat that modern, high-end cinema zooms are constructed to a high degree of optical quality that is comparable to many prime lenses, but you pay a pretty penny for that level of cutting edge engineering. When you’re looking at zooms and primes in a comparable price range, primes usually have the winning, optical edge.

3 - APERTURE

A lens’ aperture is the size of the round opening at the back of the lens that lets in light. A large opening, which has a lower T or F stop number, like T/ 1.3, means that more light is let in, while a smaller opening, with a stop such as T/ 2.8 means that it lets in less light.

Once again, because of the extra glass and more complex design required to build zoom lenses, primes tend to have a faster stop.

When it comes to cinema glass, each extra stop of light that a lens can let in is precious and demands a higher price tag. Shooting with a wide aperture comes with a few advantages. It means you can expose an image in dark, lower light conditions. It allows you to create more bokeh - the out of focus area that separates the subject from the background and is generally considered ‘cinematic’.

This allows you to also be more deliberate about what is in and out of focus and is a way of guiding the audience's gaze to a certain part of the frame. So, for those cinematographers or photographers that want fast lenses, primes are the way to go.

4 - SIZE

If you’re working in wide open spaces, with a dolly that holds a heavy cinema camera, then the size of the lens is less of a concern. But the reality is that more often than not that’s not the case and having a physically smaller lens attached to the camera makes things much easier.

By now we know that zooming requires extra glass and extra glass requires a larger housing. This means zooms are heavier, longer and wider than primes.