How Movies Are Shot On Digital Cinema Cameras

INTRODUCTION

In a prior video I gave an overview of how movies today are shot using film. While it’s good to know, it probably won’t be applicable to the vast majority of movies which are mostly captured, edited and presented using digital technology.

So, let’s break down the workflow of how most movies these days are shot on digital cinema cameras: all the way from choosing and setting up a camera to exporting the final, finished product.

CAMERA SETUP

The digital cinema camera that cinematographers choose to shoot a movie on will likely be influenced by three decisive factors. One, the camera’s ergonomics. Two, the camera’s internal specs. And three, the cost of using the camera - because as much as you may want to shoot on an Alexa 65 with Arri DNAs it may be beyond what the budget allows.

Once you have an idea of what budget range the camera you select must fall into, it's time to think about the remaining two factors.

Ergonomic considerations are important. You need to think about the kind of camera movement you may need and what camera can be built into the necessary form factor to achieve that. If it’s mostly handheld work you may want something that is easy to operate on the shoulder. If you need to do a lot of gimbal or drone shots then a lighter body will be needed.

Also think about what accessories it’ll be paired with. What lens mount does it have? What are the power options? Do you need a compatible follow focus? What video out ports does it have? Does it have internal ND filters? If so, how many stops and in what increments?

These are all questions that will be determined by the kind of project you are shooting.

The second consideration is the internal recording specs that the camera has. What size is the sensor? Do you need to shoot in RAW or ProRes? Does it have a dual ISO? Do you need to shoot at high frame rates? What kind of codec, dynamic range and colour depth does it record? How big are the file sizes?

Once you’ve chosen a camera that best fits the needs of the project it’s time to set it up properly before the shooting begins.

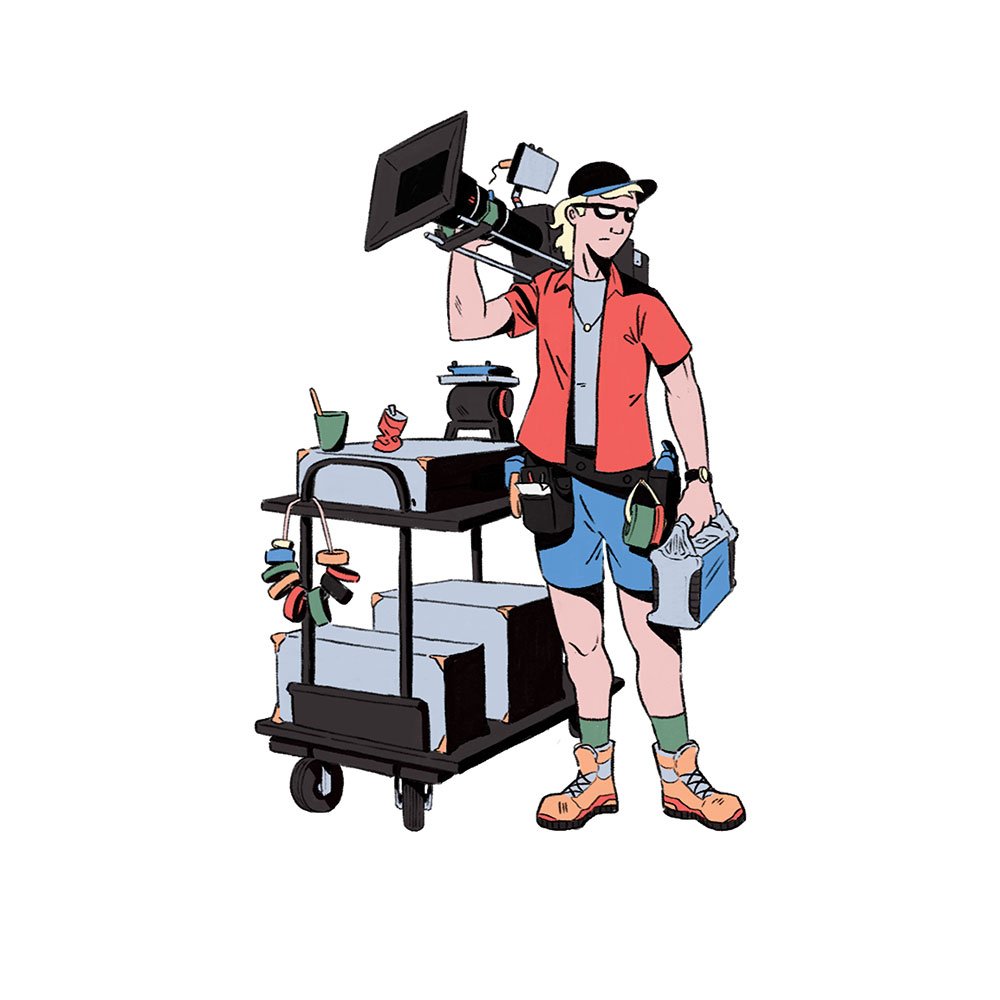

On high end productions this will be done by the DIT or digital imaging technician, under the direction of the DP. At the beginning of every shoot day or at regular intervals the DIT will continue to check that the specs are correctly dialled into the camera.

They will start by setting the codec that the camera records in: such as Arriraw or ProRes. Next, they’ll make sure that the correct sensor coverage is chosen. For example if using anamorphic lenses a fuller, squarer coverage of the sensor may be desired and a de-squeeze factor applied.

They’ll then dial in the resolution required, such as 4K, 4K UHD or 2K. Sometimes this might change during shooting if cinematographers want to capture clips at higher frame rates than their base resolution allows.

Next, they’ll set the base frame rate for the project. Even if the cinematographer decides to change the frame rate during shooting, such as to capture slow motion, the base frame rate will never change. This is the same frame rate that the editor will use when they create their project file.

With the basic settings dialled in, the DP may now either be happy to shoot with a regular Rec709 LUT or they may ask the DIT to upload a custom LUT that they’ve downloaded or created.

Cinema cameras are set to record a flat colour profile in order to maximise how the images can be manipulated in post. However it can be difficult to get an idea of how the final image will look when working with a flat log reference. So, a LUT is added on top of the image - which isn’t recorded onto the footage. This applies a look, like a colour grade, to the image so that cinematographers can better judge their exposure and what the final image will look like.

Finally, frame lines will be added and overlaid over each monitor so that operators can see the frame with the correct aspect ratio that has been chosen for the project.

Now, the camera is ready to go.

SHOOTING

While shooting the DP will usually manipulate the camera's basic settings themself and set exposure. These settings include the EI, white balance, shutter speed, frame rate, internal ND filters and the aperture of the lens.

There are different ways of judging exposure on digital cinema cameras. Most commonly this is done by referring to how the image itself looks on a monitor and occasionally also referring to the cameras built in exposure tools. On high end cameras the most used exposure tool is false colour which assigns a colour reading to different parts of the image based on how bright or dimly exposed they are.

If you see red it means an area is bright and overexposed. Green refers to an evenly exposed 18% middle grey - a good reference for skin tones. While a dark blue or purple indicates underexposure.

There are also other exposure tools, often found on broadcast cameras, such as histograms, waveforms or zebras which cinematographers may also use to assess their exposure. Alternatively, exposure can also be measured by DPs with a light metre, however this is becoming increasingly rare when working with digital cameras.

On bigger jobs with multiple cameras, the DP may also request that the DIT help set the exposure of each camera. For example, I’ve worked on jobs where there are say four to six cameras shooting simultaneously. The loader or assistant for each camera will be hooked up to a radio with a dedicated camera channel.

The DIT will have a station set up in a video village where they get a transmitted feed from all of the cameras to a calibrated monitor with exposure assist tools. While setting up for each shot they will advise each camera over the radio channel whether the assistants need to stop up or down on the lens and by how much so that all the cameras are set to the same exposure level and are evenly balanced.

For example they may say, ‘B-Cam open up by half a stop’. The assistant will then change the aperture from T4 to T2.8 and a half. On other shoots they may even be given a wireless iris control which is synced up to an iris motor on each camera - such as an Arri SXU unit. They can then remotely adjust the stop on each camera while judging exposure on a calibrated monitor.

The DIT, under the direction of the DP, may also change to different LUTs for different scenarios. For example, if they are shooting day for night, a specific LUT needs to be applied to get the correct effect.

DATA MANAGEMENT

Once the big red button has been pushed and some takes have been recorded digitally onto a card inside the camera, it’s time to transfer that footage from the card or capture device to a hard drive. This secures the footage and organises it so that editors can work with it in post production.

This is done either by the DIT or by a data wrangler using transfer software. The industry standard is Silverstack which allows you to offload the contents of a card onto hard drives, backup, manage and structure how the files are organised - usually by shoot day and the card name.

The standard rule is to always keep three copies of the footage - one master copy and two backups. This is to ensure that even if one of the drives fails or is somehow lost or stolen that there are still copies of the precious footage in other locations. Even though data storage can be expensive, it's almost always cheaper than having to reshoot any lost footage.

Once this footage has been secured on the hard drives the card can be handed back to the loader who can format it in camera. The footage is carefully viewed by the DIT to make sure that the focus is sharp and there aren’t any unwanted artefacts, aberrations, pulsing light effects or dead pixels - which may require the camera to be switched out.

The next job of the DIT or data wrangler is to prepare the drive for the editor. Because footage from high end digital cinema cameras comes in huge file sizes and is very intensive for computers to work with, smaller file size versions of each clip need to be created for the editor to work with so that there is no playback lag when they are editing. These files are called proxies or transcodes.

This is usually done in software like Da Vinci Resolve. The DIT will take the raw footage, such as the log 4K ProRes 4444 files, apply whatever LUT was used during shooting on top of that log footage, then process and output a far smaller video file, such as a 1080p 8-bit ProRes clip. Importantly these proxies should be created with the same clip name as the larger original files. This will be important later.

Data wranglers may also be tasked with doing things like creating a project file and syncing up sound to video clips to help speed up the edit.

POST PRODUCTION

Once shooting wraps the precious hard drive which includes the raw footage as well as the proxies will be sent over to the editor. There are two stages to the post production process: the offline edit and the online edit.

The first stage, offline, refers to the process of cutting the film together using the smaller, low res transcoded proxy files with video editing software, such as Avid or Adobe Premiere Pro. This will be done by the editor and director.

They will usually go through multiple cuts of the movie, getting feedback and adjusting things along the way, until they arrive at a final cut of the film. This is called a locked cut or a picture lock - meaning that all the footage on the timeline is locked in place and will no longer be subject to any further changes.

Having a locked final cut indicates the end of the offline edit and the start of online. Online is the process of re-linking up the original, high res, raw footage that came from the camera.

To do this the offline editor will export a sort of digital ledger of every cut that has been made on the timeline - in the form of a translation file such as an EDL or XML.

This file is used to swap out the low res proxies in the final cut timeline with the high res log or RAW footage that was originally on the camera card. This is why it is important that the proxies and the original files have the same file names so that they can easily be swapped out at this stage without any hiccups.

The original files can now go through a colour grade and any VFX work needed can be performed on them. The colourist will use any visual references, the shooting LUT, or perform a colour space transform to do basic colour correction to balance out the footage. They’ll then apply the desired grade or look to the original footage, all the while getting feedback from the director and cinematographer.

The main industry standard software for colour grading is Baselight and Da Vinci Resolve. Once the grade is complete, the final, fully graded clips are exported. They can then be re-linked back to the timeline in the original video editing software. A similar process will happen with the final sound mix where it is dropped in to replace the raw sound clips on the editing timeline, so that there is now a final video file and a final audio file on the time ready for export.

The deliverables can then be created. This may be in the form of a digital video file or a DCP that can be distributed and played back on a cinema projector.