Cinematography Style: Barry Ackroyd

Barry Ackroyd is a cinematographer who plays to his strengths. Over his career he’s developed an instantly recognisable style to his photography that is based around a vérité, documentary-esque search for truth and capturing realism. In this episode of Cinematography Style I’m going to take a look at the renowned work of Barry Ackroyd by going over his philosophical ideas on cinematography and outlining the gear that he uses to execute his vision.

INTRODUCTION

Barry Ackroyd is a cinematographer who plays to his strengths. Over his career he’s developed an instantly recognisable style to his photography that is based around a vérité, documentary-esque search for truth and capturing realism.

He works with multiple on-the-ground, handheld, reactive cameras that use bold, punch-in zooms and has been hired by directors such as Ken Loach and Paul Greengrass that highly value a sense of realism and heightened naturalism in their films.

So, in this episode of Cinematography Style I’m going to take a look at the renowned work of Barry Ackroyd by going over his philosophical ideas on cinematography and outlining the gear that he uses to execute his vision.

BACKGROUND

“I’m a cinematographer who was brought up in documentaries in Britain on small budgets.”

Ackroyd’s initial plans to become a sculptor changed while he was studying Fine Arts at Portsmouth Polytechnic after he discovered the medium of 16mm film.

He began working as a television cameraman in the 1980s, mainly shooting documentaries. It was there that he first encountered director Ken Loach. After working on a couple of documentaries together, Ackroyd was offered an opportunity to shoot Riff-Raff for Loach - his first feature length fiction film.

He continued to shoot numerous fiction films and documentaries for Loach during this period, culminating in The Wind That Shakes The Barley which won the Palme d’Or at Cannes Film Festival. Following this success he began working on other fiction projects for various well known directors such as: Paul Greengrass, Kathryn Bigelow and Adam McKay.

PHILOSOPHY

“Sometimes it’s better just to play to your strengths rather than to try to diversify too much…That was a choice I made, to play to my strengths.”

One of those strengths is a look rooted in a documentary style of working - which was informed by his early work on TV docs. Those documentaries relied on usually operating the camera handheld from the shoulder, in order to record the necessary moments as they happened live. In the real world events or moments often only happen once so you need an easily mobile camera to observe and capture them.

This is the opposite of fiction filmmaking, where events and scenes can be played out multiple times, and are more often than not photographed in a carefully curated, composed visual style. Rather than going the usual fiction cinematic route, Ackroyd took documentary conventions and ways of working and applied them to fiction filmmaking.

For example, he prefers always shooting movies on real locations whenever possible, over shooting them on a constructed set or in a soundstage - even if that real location is a ship on the ocean.

Ackroyd tends to steer away from setting things up too perfectly and instead leans towards a look where capturing a version of reality is far more important than capturing a ‘perfect’ image.

“I think if you look at my work I’m always trying to push what I’ve done before…and actually I push it towards imperfection…There’s a kind of state that you get into where you’re just in tune with what’s happening in front of the camera.”

To capture images realistically, honestly and with as few barriers as possible he relies on working with multiple camera operators and puts a lot of trust in his crew members. He gives his crew lots of credit on set and in interviews, from the focus puller to the sound recordist, and maintains the importance of teamwork and a group effort in creating a film.

“I used to say that in documentaries the best shot that you get in documentaries is out of focus and underlit and looks rubbish. You know that it had to be in the film because it was absolutely right at the time…I think that’s what you’re striving for, you know. Not to overwhelm people with the beauty. Not to fall in love with the landscape…But to get the picture that…you’re involved with it.”

An example of how he seeks authenticity through imperfections can be found in his approach to blocking scenes with directors and actors. Usually actors rehearse a scene on set and then marks are put down on the floor to indicate the exact position that actors must stand in in order to be perfectly lit, perfectly framed and perfectly angled for the shot.

Ackroyd prefers not to mark actors. He sets up any lights he needs either overhead or outside the set so that the actors have the freedom to move around as they like when they play out the scene. Since they don’t have to worry about hitting specific marks, he finds that the actors loosen up more, which injects a realist spontaneity into how their performances are captured.

Sometimes this leads to technical imperfections like moments that are out of focus or frames that aren’t classically composed. But it also injects an energy into the images which is undeniable.

GEAR

“You know I like to get physically involved. We ran around with the cameras. We had four or five cameras at times…In any one setup you’re trying to talk to all the guys, see what they’ve done, see what the next shot should be and give, you know, support and advice.”

As we mentioned, Ackroyd likes shooting with multiple handheld cameras. This allows his operators to quickly react and capture details or moments of performance. It also provides the director and the editor with multiple angles and perspectives which they can cut to in order to build up the intensity and pacing in a scene.

Directors who he has repeatedly collaborated with like Paul Greengrass and Kathryn Bigelow are known for their preference for quick cutting. Ackroyd’s style provides them with the high number of angles that are needed to work in this way.

One of the most important camera tools he uses is focus. He describes focus as being the best cinematic tool, even better than a dolly, crane or tripod, because focus mimics what we naturally do with our eyes and can be used to shift the attention of the audience to a particular part of the frame. He isn’t overly strict with his focus pullers and in fact prefers the natural, more organic method where people drift in and out of focus over every single shot having perfectly timed, measured and calculated focus pulls.

Another important tool in his toolbox is his use of zoom lenses. Again this goes against traditional fiction cinematography principles which ascribe a greater value to prime lenses over zooms - which most documentaries are shot with. He uses quick punch-in zooms as a tool to direct the focus of the audience in the moment. For example if a line of dialogue or an energetic moment of performance is particularly important his operators may push into it with a quick zoom for emphasis.

His choice of camera gear is a bit mishmash. In the same film he may use different formats, such as digital, 35mm and 16mm film, with different prime and, of course, zoom lenses. For example Captain Phillips involved shooting aerial shots digitally, while sequences in the fishing village and on the skiff were shot in Super 16, which they then switched to 35mm film once the characters boarded the large shipping vessel.

He likes the texture of film and has often used the higher grain 16mm to compliment his look. He famously used Super 16 to support the raw, on-the-ground documentary aesthetic on The Hurt Locker.

“Well then I thought it has to be Super 16. We have to get back to the basics. Get down to the lenses you can carry and run with and will give you this fantastic range of wide shots and big close ups…The first thing everybody said was that, ‘well, the quality is not going to be good.’ Well, nobody has criticised the quality of the film. They’ve only praised it.”

He has a preference for Fujifilm stock as it fares well in high contrast lighting situations. When shooting on film he would sometimes purposefully underexpose the negative and then bring up the levels later in the DI in order to introduce more grain to the image.

Ackroyd liked to combine 250D and 500T Fujifilm stocks when shooting Super 16 or 35mm. However, after Fujifilm was discontinued and no longer available he transitioned to shooting on Kodak film or with digital cameras - mainly the Arri Alexa Mini.

On Detroit he used the Arri Alexa Mini in Super 16 mode and shot with Super 16 lenses to introduce noise and grain to the image and get a Super 16 feel, which was further amped up in the grade, all while maintaining the benefits of a digital production.

The Aaton XTR is his go to Super 16 camera, so much so that he owns one. He has used different 35mm cameras such as the Aaton Penelope, the Arriflex 235, the Moviecam Compact and the Arricam LT. Some of his favourite Super 35 zooms are the 15-40mm and, in particular the 28-76mm Angenieux Optimo zoom, which are both light enough to be handheld and provide a nice zoom range that he can use to punch-in with.

He’s also used the Angenieux Optimo 24-290mm, sometimes with a doubler when he needs a longer zoom. It’s too heavy to be used handheld but he has used it with a monopod to aid in operating the huge chunk of a lens and still preserve a handheld feel. Some other zooms he has used include a rehoused Nikon 80-200mm and the Canon 10.6-180mm Super 16 zoom.

Although he prefers zooms he often carries a set of primes which have a faster stop and can be used in low light situations such as Zeiss Super Speeds or Cooke S4s.

Due to the lack of blocking or focus marks, he usually gives his focus pullers a generous, deep stop to work with of around T/5.6 and a half.

To further support his look based on realism and documentary, he lights in a very naturalistic manner. He tries to refrain from lighting exteriors all together and for interiors adds touches of artificial light which are motivated when he needs to balance the exposure in a scene. A lighting tool that he likes to use for this are single Kino Flo tubes, which can easily be rigged overhead or out of sight to provide a low level fill to a scene.

CONCLUSION

Barry Ackroyd’s cinematography is more about deconstructing photography than it is about trying to produce a perfectly beautiful image.

To him imperfections are a signal of authenticity and an expression of realism rather than a flaw. Breaking down an image can’t be done competently without a great degree of skill and knowledge.

His film’s aren’t created by just picking up a bunch of cameras and pointing them in the general direction of the action, but are rather made through deliberate thought and cultivation of a style that emits as much intensity, feeling of reality and truth as possible.

Does Sensor Size Matter?

Since there are loads of different cameras with loads of different formats and sensor sizes out there to choose from, in this video I’ll try to simplify it a bit by going over the five most common motion picture formats and discussing the effect that different sensor sizes have on an image.

INTRODUCTION

The sensor or film plane of a camera is the area that light hits to record an image. The size of this area can vary a lot depending on the camera, with each sensor size or format having a subtly different look.

Since there are loads of different cameras with loads of different formats and sensor sizes out there to choose from, in this video I’ll try to simplify it a bit by going over the five most common motion picture formats and discussing the effect that different sensor sizes have on an image.

5 MOTION PICTURE FORMATS

The size of a video camera's film plane or sensor ranges all the way from the minuscule one third inch sensor found in smartphones or old camcorders up to the massive 70x52mm 15-perf Imax film negative. But, rather than going over every single sensor in existence, I’m going to take a look at five formats which are far and above the most popular sizes used in film production today and have been standardised throughout film history.

While there are smaller sizes like 8mm film or sizes in between like the Blackmagic 4K’s four third sensor, these sizes are used far less frequently in professional film production and are an outlier rather than a standard. I’ll also only be looking at video formats so won’t be going over any photographic image sizes such as 6x6 medium format.

The smallest regularly used format is Super 16. The film’s smaller size of around 7.4 by 12.5mm makes it a cheaper option than the larger gauge 35mm, as less physical film stock is required.

Due to this it was often used in the past to capture lower budget productions. Now that digital has overtaken film, Super 16 is mainly chosen for its optical capabilities. Its lower resolution look and prominent film grain means that it is often used today to evoke a rough, documentary-esque feeling of nostalgia.

Some digital cameras, such as the original Blackmagic Pocket Cinema Camera have a sensor that covers a similar area to Super 16 and cameras such as the Arri Alexa Mini have specialised recording modes which only samples a Super 16 size area of the sensor.

Moving up, the next, and by far the most common format is Super 35. This format is based on the size of 35mm motion picture film that covers an approximate area of 21.9 by 18.6mm. 35mm refers to the total width of the frame, including the perforated edges on either side of the negative area.

Depending on the budget, aspect ratio, and lenses different amounts of horizontal space, measured in perforations, can be shot. The frame can be cropped to use less film stock or to extract a widescreen image when using spherical lenses. Shooting with anamorphic lenses, that optically squeeze the image, requires using the entire area of the negative or sensor and then de-squeezing the image at a later stage to get to a 2.39:1 aspect ratio.

Many digital cinema camera sensors are modelled on this size, with some minor size variations depending on the camera, such as the Arri Alexa Mini, the Red Dragon S35 and the Sony F65. Since this format is the most popular in cinema, most cinema lenses are designed to cover a Super 35 size sensor. Meaning this format has the widest selection of cinema glass available on the market.

Stepping up from 35mm we get to what is called a large format or a full-frame sensor. This size is modelled on still photography DSLR cameras with a 35mm image sensor format, such as the Canon 5D that is larger than Super 35. It’s also around the same size as 8-perf Vista Vision film.

Although digital sensors differ a bit depending on the camera, it is usually about 36 by 24mm. Some cameras with this sensor size include the Alexa LF, the Sony Venice and the Canon C700 FF.

This large format is a middle ground between Super 35 and the next format up - 65mm.

Originally, this format was based on using 65mm gauge film which was 3.5 times as large as standard 35mm, and measured 52.6 by 23mm using 5 vertical perforations with a widescreen aspect ratio of 2.2:1. The Alexa 65 has a digital sensor that matches 65mm film and is a viable digital version of this format.

Finally, the largest possible motion picture format that you can shoot is Imax film. With an enormous 15 perforations, an Imax frame covers a 70.4 by 52.6mm image area.

Due to its enormous negative size and the large, specialised cameras required to shoot it, this format is prohibitively expensive and out of the budget range of most productions. But, it has seen a bit of a resurgence in recent years on high budget blockbusters from directors such as Christopher Nolan who champion the super high fidelity film format.

THE EFFECTS OF SENSOR SIZES

With these five formats in mind, let’s examine some of the effects and differences between them. There are a few things that choosing a format or sensor size affects.

The most noticeable optical effect is that different formats have different fields of view. What this means is that if you put the same 35mm lens on a Super 16, Super 35 and a large format sensor camera, the smaller the sensor is the tighter the image that is recorded will appear.

So the field of view on a large format camera will be much wider than on a Super 16 camera which is tighter. Since the field of view is wider, larger formats also have a different feeling of depth and perspective.

Because of this difference, the sensor determines the range of focal length lenses that need to be used on the camera. To compensate for the field of view differences, smaller formats like Super 16 need to use wider angle lenses to get to an image that sees the same amount of information, while larger formats need to use longer lenses for that same frame.

For example, to get the same field of view from a 35mm lens on a Super 35 sensor, a Super 16 camera needs to use a 17.5mm focal length and a large format, full-frame camera needs to use a 50mm focal length.

Since focal lengths affect the depth of field an image has, this is another effect of different formats. Longer focal lengths have a very shallow depth of field or area of the image which is in focus. So full-frame cameras that use longer focal lengths will therefore have a shallower depth of field. This means that the larger the format, the more the background will be out of focus and the more the subject will be separated from the background.

This is helpful for creating a greater feeling of depth for wide shots which people often perceive as looking more ‘cinematic’.

One negative effect of this is that the job of the 1st AC to keep the focus consistently sharp becomes far more difficult. For this reason smaller formats such as Super 16 are far more forgivable to focus pullers as they have a deeper depth of field where more of the image is in focus and therefore the margin for error is not as harsh.

The grain and resolution that an image has is also affected by the size of the format. The smaller the format is, the more noticeable the grain or noise texture will usually be, and the larger the sensor is the finer the grain will appear and the greater clarity and resolution it often has.

Sometimes cinematographers deliberately shoot smaller gauge formats like Super 16 to create a more textured image, while others prefer larger formats like 65mm for it’s super clean, sharp, low noise look.

So those are the main optical effects of choosing a format.

Smaller formats require wider focal lengths, have a deep depth of field, have more grain and will overall feel like they are a bit flatter.

Larger formats require longer focal lengths, have a shallower depth of field, less grain, greater resolution and clarity and overall have a more three-dimensional look with an increased feeling of depth.

There are also the all-important practical implications to be considered. Generally speaking the larger the format, the larger the form factor of the camera will be to house it and the more expensive it is to shoot on.

This calculation may be different when comparing the costs of digital and film, but when comparing all the digital formats, renting the cameras and lenses for 65mm will be more expensive than a Super 35 camera. Likewise, when comparing film formats 16mm is vastly cheaper than Imax.

So broadly speaking, smaller formats tend to be more budget friendly and come in a smaller housed package.

DOES SENSOR SIZE MATTER?

Coming back to the question of whether sensor size matters, I don’t think any one sensor is necessarily better than another. But the effects that they produce are certainly different.

Filmmakers that want an image that immerses an audience in a crystal clear, highly detailed, wide vista with a shallow depth of field will probably elect to shoot on a larger format.

Whereas those who require a more textural, nostalgic or rougher feeling photography with less separation between the subject and the background may be drawn to smaller gauge formats.

As always, the choice of what gear is most suitable comes down to the needs of the project and the type of cinematic tone and photographic style you are trying to capture.

Using Colour To Tell A Story In Film

Let’s examine this idea of colour by going through an introduction to colour theory, look at how filmmakers can create a specific colour palette for their footage and check out some examples of how colour has been used to aid the telling of different stories.

INTRODUCTION

Cinematography is all about light.

Light is a complex thing. It can be shaped, it can come in different qualities, different strengths and, importantly, it can take the form of different colours.

So, let’s examine this idea of colour by going through an introduction to colour theory, look at how filmmakers can create a specific colour palette for their footage and check out some examples of how colour has been used to aid the telling of different stories.

WHAT IS COLOUR THEORY?

Colour theory is a set of guidelines for colour mixing and the visual effects that using different colours has on an audience.

There are many different approaches to colour theory ranging from ideas all the way back in Aristotle’s time up to more contemporary studies on colour such as those by Isaac Newton. But let's just take a look at some basic ideas and see how they can be applied to film.

When different spectrums of light hit objects with different physical properties it produces a colour, which we put into a category and ascribe a name to.

Primary colours are a group of colours that can be mixed to form a range of other colours. In film these are often, but not always, used sparingly in a frame. A splash of red in an otherwise green landscape stands out and draws the eye.

An important part of colour theory in the visual arts space is knowing complimentary colours. When two of these colours are combined they make white, grey or black. When the spectrum of colours are placed on a colour wheel, complimentary colours always take up positions opposite each other.

When two complementary colours are placed next to each other they create the strongest contrast for those two colours and are generally viewed as visually pleasing. Cinematographers often combine complimentary colours for effect and to create increased contrast and separation between two planes in an image. For example, placing a character lit with an orange, tungsten light against a blue-ish teal background creates a greater feeling of separation and depth than if both the character and the background were similar shades of orange.

When it comes to the psychology of using colour, cinematographers generally fall into two camps - or somewhere in the middle. Some cinematographers such as Vittorio Storaro think that certain colours carry an innate, specific psychological meaning.

“Changing the colour temperature of a single light, changes completely the emotion that you have in your mind. I didn’t know at the time the meaning of the colour blue. It means freedom.” - Vittorio Storaro

Other filmmakers rely more on instinct and what feels best when lighting or creating a colour palette for a film. The psychology of colour can change depending on the context and background of the audience.

As well as being a means of representing and expressing different emotions, deliberate and repeated uses of colour can also be used by filmmakers as a motif to represent themes or ideas.

Another important part of colour theory is warm and cool colours. The Kelvin scale is a way of measuring the warmth of light, with lower Kelvin values being warmer and higher Kelvin values being cooler.

Warm and cool colours can have different psychological effects on an audience and can also be used to represent different physical, atmospheric conditions. Using warmer colours can be used to emphasise the feeling of physical heat in a story, while inversely cooler colours can be used to make the setting of a story feel cold or damp.

CREATING A COLOUR PALETTE

Now that we have a basic framework of colour theory to work with, let's look at the different ways that filmmakers can make a colour palette for a movie. Colour palettes in film can be created using three tools: production design and costume, lighting and in the colour grade.

The set and the clothing that the characters are dressed in is always the starting point for creating a colour palette. In pre-production, directors will usually meet with the production designer and come up with a plan for the look of the set. They might give the art director a limit to certain colours they need to work with, or decide on specific tones for key props. The art team will then go in and dress the set by doing things such as painting the walls a different colour and bringing in pieces of furniture, curtains and household items that conform to that palette.

Since characters are usually the focus of scenes and we often view them up close, choosing a colour for their costume will also have a significant impact on the overall palette. This may be a bold primary colour that makes them stand out in the frame, or something more neutral that makes them blend into the set.

With a set to work with, the next step in creating a movie’s colour palette is with lighting.

Traditionally, film lighting is based around the colour temperature of a light which as we mentioned could be warm, such as a 3,200K tungsten light or cool, such as a 5,600K HMI. On top of this, cinematographers can also choose to introduce a tint to get to other colours. This can be done the old school way by placing different coloured gels in front of lights, or the modern way by changing the hue or tint of LEDs.

DPs can either flood the entire image with monochromatic coloured light, or, as is more common, light different pockets of the image with different colour temperatures or hues. In the same way that we create contrast by having different areas of light and shadow in an image, we can create contrast by having different areas of coloured light.

Once the colour from the set and the lighting has now been baked into the footage, we move into post-production where it’s possible to fine tune this colour in the grade.

An image contains different levels of red, green and blue light. A colourist, often with the guidance of a director or cinematographer, uses grading software like Baselight or Da Vinci Resolve to manipulate the levels of red, green and blue in an image.

They can change the RGB of specific values of light, like introducing blue into the shadows, or adding magenta to the highlights. They can also create power windows, to change the RGB values in a specific area of the frame, or key certain colours so that they can be individually adjusted. There are other significant adjustments they can make to colour such as determining the saturation or the overall intensity of the colour that the image has.

USING COLOUR TO TELL A STORY

“It’s a show about teenagers. Why not make a show for the teenagers that looks like how they imagine themselves. It’s not based on reality but mostly on how they perceive reality. I think colour comes into that pretty obviously.” - Marcell Rév

When coming up with a concept for the lighting in Euphoria, instead of assigning very specific psychological ideas to colour, Marcell Rév used colour more generally as a way to elevate scenes from reality.

He wanted to put the audience in the emotionally exaggerated minds of some of the characters and elevate the level of the emotions that were happening on screen. In the same way that the often reckless actions of the characters continuously ratcheted up the level of tension in the story, so too did the exaggerated, brash, coloured lighting.

To increase the potency of the visuals he often played with a limited palette of complementary colours. He avoided using a wide palette of colours, as it would become too visually scattered and decrease the potency of the colours that he did use.

Along with his gaffer he picked out gels, mainly light amber gels which he used with tungsten lights and cyan 30 or 60 gels which he used with daylight HMIs. They also used LED Skypanels, which they could quickly dial specific colour tints into.

“That light…that colour bouncing off the screen and arriving at us we don’t see it only with the eyes, we see it with the entire body…because light is energy. I’m sending some vibrations to you, to the camera, to the film…unconsciously.” - Vittorio Storaro

When photographing Apocalypse Now, Vittorio Storaro was very deliberate about his use of colour. He wanted the colours to be so strong and saturated that the world on film almost became surrealistic.

He wasn’t happy with Kodak’s 5247 100T film stock at the time, so he got the film laboratory to flash the negative to get the level of contrast and saturation which he was happy with.

In the jungle scenes he didn’t want to portray the location naturally. He sometimes used filters to add a monochromatic palette which was more aggressive, to increase the tension.

“I can use artificial colour in conflict with the colour of nature. I was using the symbolic way that the American army was using to indicate to the helicopter…They were using primary and complementary colours. I was using those kinds of smoke colours to create this conflict.” - Vittorio Storaro

He also described how the most important colour in the film was black, particularly in the silhouetted scenes with Kurtz. He felt black represented the unconscious and was most appropriate for scenes where the audience was trying to discover the true meaning of Kurtz, with small slithers of light, or truth, emerging from the depths of the unconscious.

What A Boom Operator Does On Set: Crew Breakdown

In this Crew Breakdown video I’ll go over the position in the sound department of the boom operator, to break down what they do, their average day on set and some tips which they use to be the best in their field.

INTRODUCTION

In this series I go behind the scenes and look at some of the different crew positions on movie sets and what each of these jobs entails. If you’ve ever watched any behind the scenes videos on filmmaking you’ve probably seen this person, holding this contraption.

In this Crew Breakdown video I’ll go over the position in the sound department of the boom operator, to break down what they do, their average day on set and some tips which they use to be the best in their field.

ROLE

The boom operator, boom swinger or first assistant sound is responsible for placing the microphone on a set in order to capture dialogue from the actors or any necessary sounds in a scene.

They do this by connecting a boom mic, or directional microphone, to a boom pole. The mic is then connected either with an XLR cable or wirelessly to a sound mixer where the sound intensity is adjusted to the correct level.

On feature films this mixing is done separately by the sound recordist who heads the department, and is responsible for recording all the audio and delegating the positioning of the mic to the boom operator. However, for low budget features, TV shoots, documentaries or commercials, the role of the sound recordist and the boom swinger is sometimes performed simultaneously by one person.

To get the best possible sound and capture dialogue clearly the microphone usually needs to be placed as close as possible to the actors. Since film frames have quite a lot of width to them and see a lot of the location the best way to get the microphone in close to the action without it entering the shot is to attach it to a boom pole, with the mic angled downwards and use the length of the boom held overhead to position the microphone directly above the actors and outside of the top of the frame.

For stationary shots without camera movement this involves finding a position for the boom and holding it throughout the take. Sometimes for long documentary interviews this can be done with the help of a stand. However, for shots which involve camera movement or actors that are moving and talking, the boom operator is tasked with performing a kind of dance. They need to move tighter or wider as the camera does, always fighting to get the mic as close as possible while making sure it never dips into the frame and enters the shot. If this happens during a take the DP, director or 1st AD will often call out ‘boom’.

While the act of operating the boom mic during filming is their primary responsibility, there are also some other tasks that boom swingers need to perform.

Dialogue is usually captured by two different types of microphones, the boom mic, as we mentioned, as well as lapel or lav microphones. These are small microphones which are strapped directly onto the actors with a clip or with an adhesive tape. They are usually positioned under clothing near the chest or throat area so that they will pick up intimate sound but be unseen by the camera.

These microphones are attached to a transmitter. This wirelessly transmits the recorded sound to a receiver that is then connected to a sound mixer where the audio feed is recorded. The boom operator is usually responsible for attaching this lav microphone to the necessary actors.

It’s best practice to always inform and explain how you’ll be attaching the mic to the actor, as it can be a bit invasive. Experienced actors are aware of this but it’s still professional to ask their permission before touching them or putting on the lav mic.

The boom operator also assists the sound recordist with any necessary technical builds or changes, such as switching out batteries or attaching a lock it box for synchronising timecode onto the camera.

AVERAGE DAY ON SET

After arriving on set the boom operator will track down a copy of the shooting schedule and sides. This lists what scenes are planned for the day and pages from the script with dialogue for those scenes.They’ll read the sides to see what dialogue needs to be recorded and what actors need to be mic-ed up. They’ll replace the necessary batteries and make sure everything is charged up and ready to go.

The boom operator or the sound recordist may test that their audio feed is getting transmitted to VT and hand out a pair of headphones and receiver to the director for them to monitor the sound during takes.

The boom operator will mic up any actors in the scene that have dialogue and prep their boom setup. This may involve changing their gear, such as using a blimp, or a ‘deadcat’ as it’s called, to cut out wind noise if they are recording a scene outdoors.

They’ll find out the lens that the camera is shooting with, or take a peek at the monitor to see how wide the frame is and how close they can position the boom without getting in the shot.

Once ready to record a take the 1st AD will call roll sound, and the sound recordist will begin recording, then the cameras will roll. The boom swinger will then move the mic over so that it’s directed at the 2nd AC, who will announce the information on the clapper board and then give it clap so that the editor has a point where they can sync the sound with the video. The boom operator will then quickly position the boom above the actors and be ready to begin recording dialogue.

They always wear headphones while recording which can help them to position the boom further away, closer or at a different angle to get the best possible sound.

When recording sound the default rule is to usually capture whatever audio is present on screen.

So, for wider shots where multiple actors are in a frame the boom swinger may alternate and move the boom closer to whoever is speaking, positioning the mic back and forth as the actors exchange dialogue. Then for close ups where only a single actor is on screen they will usually focus only on recording sound for that actor alone. When the camera switches to a close up of the next actor then their full sound will get recorded. By doing this they will then have clear dialogue for both actors which the editor can use at their discretion.

Sometimes if the boom was unable to capture a certain sound effect or a certain line of dialogue during a scene then they will pull the actor aside between setups and record what is known as wild sound - sound that isn’t recorded with any specific video footage but which may be synchronised or used later.

They may also need to record ‘room tone’, a quiet ambient recording of the space without any dialogue. This can be placed as a layer underneath the dialogue in the edit to make the cuts more natural and provide a background noise to the scene.

In this case the 1st AD will make an announcement to the set, the crew will awkwardly freeze so as not to make any noise and the mic will record about a minute of quiet, ambient sound.

TIPS

Since any footage where the boom dips into shot will be unusable one of the most valuable skills for boom operators is to know how wide a frame is. If you’re starting out, it’s useful to look at the framing on the monitor and find what is called an ‘edge of frame’. This could be a marker on the set which indicates what is in or out of the shot.

As boom operators become more experienced they’ll begin to learn focal length sizes and be able to place the boom without needing to look at a frame. For example, if they know how wide a 35mm lens is then they can imagine its field of view and be sure to stay out of it.

As space on a set can sometimes be limited, it is useful to find the best position to stand before shooting begins. When finding a position it’s important to be mindful of how the camera and actors will move and to identify any lights that may cast shadows or reflective surfaces that will pick up the mic in shot.

Always make sure to never position the boom between a light source and a character, as it will cast a shadow of the gear in the image. Adjusting the length of the boom so that it has enough reach, but isn’t overextended will also save space and mean that it stays out of the way of other crew members, gear or lights.

Boom mics are directional, meaning that they capture whatever sound they are pointed at most prominently. Any sound behind or off to the side of the mic will be recorded much softer. Therefore positioning the boom overhead with the mic facing down towards the speaker is most common.

In a dialogue scene with two people close together the operator may be able to turn the angle of the directional mic toward whoever is speaking at the time without needing to move the position of the actual pole.

If the frame has a lot of headroom it is also possible to boom sound from underneath the frame. However, when recording outside this may sometimes pick up excess aviation noise if any planes pass overhead, so should be avoided unless it’s necessary.

If you’ve ever operated a boom during a long scene you’ll know that holding it in an awkward position gets surprisingly heavy surprisingly quickly. The solution is to either spend more time at the gym or find positions that better mitigate the weight of the mic pole, such as resting it on a shoulder or on the head.

The Most Popular Cinema Lenses (Part 4): Panavision, Tokina, Atlas, Canon

In the fourth part of this series I’ll look at the Panavision G-Series anamorphics, Tokina Vistas, Atlas Orions and the Canon S16 8-64mm zoom lens.

INTRODUCTION

While many think that only the camera is responsible for the look that footage has, the glass that is put in front of the camera has just as great an influence over how a film looks.

In the fourth part of this series I’ll look at some popular lenses which are used in the film production industry and break down the ergonomics of each lens, the format they are compatible with, as well as their all important look, by using footage from movies shot with this glass.

Hopefully this will provide some insight into what kind of jobs and stories each lens is practically and aesthetically suited for. Let’s get started.

PANAVISION G-SERIES

Panavision launched their G-Series lightweight anamorphic lenses that covered a 35mm frame in 2007.

In a past episode we looked at another of Panavision’s anamorphic series of lenses, the Cs, which are probably considered their flagship product from the past - being launched in 1968. The classic, vintage anamorphic look of the Cs is still highly sought after today, despite their relative scarcity and mish-mash ergonomic designs.

The Gs were released by Panavision for DPs that prefer a slightly more updated iteration of the Panavision anamorphic look with easily workable, modern ergonomics.

The older Cs came in different sizes, with different apertures, different close focus capabilities and different front diameters. Whereas the Gs came in more consistent sizes, with more standardised T stops and front diameters. This makes working with the Gs far easier and quicker for camera assistants. For example when changing between G-series lenses the focus and iris gears are positioned almost identical distances apart, meaning the focus motors don’t have to move.

The Gs can be shot wide open at T/2.6 and get the exact same exposure with different lenses, whereas the apertures of the Cs need to be individually tweaked between lens changes. Their standardised front diameters means that clip-on matte boxes can be easily interchanged without swapping out the back, and their more standardised lengths and weights make balancing gimbals after changing lenses easier.

The Gs also have more subtle breathing, which means the image will shift less when the focus is racked.

The update of the Gs also carries over into their optical look. They have a higher contrast, a greater degree of sharpness, aberration control, glare resistance and overall, resolve higher resolution images, while maintaining Panavision’s beautiful anamorphic bokeh and focus falloff.

DPs such as Matthias Koenigswieser enjoy the more consistent and modern anamorphic look of the Gs. On Christopher Robin he combined the C-series and the G-series. He used the more modern Gs when shooting on slightly less sharp 35mm film, and used the softer, more vintage Cs for footage that needed to be shot at a higher digital resolution. In this way they balanced out to provide a consistent look across mediums.

Fun fact, when I camera assisted Matthias on a TV commercial he also opted to use the Gs for a contemporary, sharper looking, anamorphic car shoot.

Overall, Panavision G-series are great for cinematographers who need a lightweight lens that is solidly constructed, quick and easy to work with and desire a slightly updated Panavision anamorphic look that is more optically consistent.

TOKINA VISTA

From an anamorphic lens that covers the 35mm format, to a large format spherical lens, let's take a look at the Tokina Vista primes. This set of lenses features a massive 46.7mm image circle. This means that they cover almost any cinema camera on the market, including full frame sensors and large format sensors like the Red Monstro 8K or the Alexa LF.

They have a fast aperture of T/ 1.5 across the entire range of focal lengths. This means that when the longer lenses are combined with large format cameras, the depth of field becomes razor thin.

They are very solidly constructed and come in consistent lengths across the range, with the iris and focus gears all being the same distance from the mount. The front diameter is standardised to 114mm.

There are lots of well designated distance measurements on the barrel of the lens which makes focus pulling marks more accurate. Although super robust, their full metal construction and the ample glass that is needed to cover large sensors mean that the lenses are a pretty hefty weight.

The lenses are super sharp and come with modern coatings that give them very few chromatic aberrations. This means their optical qualities are far less vintage and imperfect like other large format lenses such as Arri DNAs. Their modern coatings also mean that the lenses don’t flare massively, but when hit with the right angle of light they will produce a blue, green rainbow flare.

Their look is super modern, ultra-crisp and sharp across the entire width of the frame. Even wide open at T/ 1.5 the sweet spot of the lens in focus is very crisp. They also have minimal distortion even at the widest 18mm focal length.

The Tokina Vistas are a great option for DPs that need a ergonomically designed, fast, super sharp, modern looking spherical lens that resolves high resolution images and covers large format cameras.

ATLAS ORION

The Orion series of anamorphic lenses from Atlas was first unveiled in 2017. After first starting with just a 65mm lens, the set has now been expanded to 7 focal lengths ranging from 25mm to 100mm.

Atlas was started as a small company with the goal to manufacture professional grade anamorphic cinema lenses at an affordable price point. This may not seem all that affordable at first glance, but when compared to purchasing or renting other high end anamorphic glass, the price is significantly reduced.

Since these front anamorphic lenses have been designed recently they feature solid, modern design with a robust housing, well spaced distance markings and a smooth focus gear. They aren’t the smallest, lightest or most compact of anamorphic lenses, particularly when compared to lenses such as Kowas, but they are solid.

All focal lengths, even the 25mm, feature an aperture of T/2. This means they are very fast for anamorphic lenses which typically aren’t as fast as their spherical counterparts. However, when shot wide open at T/2 they do lose some sharpness, with their sweet spot being closer to around T/4.

Another great feature is that the Orion’s have very good close focus capabilities. This makes it easy to shoot close ups in focus without the use of diopters.

When it comes to their look, I’d say they have subtle vintage characteristics, but overall create a more traditional anamorphic look with good levels of contrast and no crazy focus falloff or distortion.

So if you’re looking for a solid set of modern, fast anamorphic primes that cover a 35mm sensor, with some vintage characteristics and great close focus all at an affordable price point, then Orion’s may be the way to go.

CANON S16 8-64mm

So far we’ve looked at lenses that cover Super 35 and large format. Next let’s take a look at a smaller format lens, Canon’s super 16 zoom, the 8-64mm.

Since this lens was designed for super 16mm film it doesn’t cover a lot of today’s modern sensors which are super 35 size or larger. However, this lens is still widely used today as the zoom of choice for 16mm film work, on the Alexa Mini in its S16 mode, or certain micro four thirds digital cameras.

It’s 8-64mm range is about the equivalent of a 14.5-115mm lens in super 35 mode. This means that this single lens has lots of flexibility and covers a whole range of conventional prime focal lengths. Despite this long zoom range it has good close focus at 22”. This meant that it was a popular workhorse in the 90s, especially for TV work or documentaries which were shot in 16mm.

For such a long zoom range it’s pretty compact and lightweight but it's built like a tank. The focus rotation is only about 180 degrees which makes it a good pairing for solo documentary operators. It has some distance markings on the barrel of the lens, with probably not as many distances as most focus pullers would like, but enough to get by.

With an aperture of T/2.4 the lens is fairly fast for a zoom. When shot wide open the image does tend to get a little softer and ‘dreamier’ like a diffusion filter has been added. But if its stopped down just a bit to around T/ 2.8 the lens sharpens up.

For a vintage zoom it's amazingly sharp which you usually want when shooting 16mm film which is a lower fidelity medium. However it isn’t overly sharp or too clinical with its vintage lens coating.

Its solid construction, long zoom range, fast aperture, great close focus and sharp but slightly vintage look make the Canon 8-64mm a great choice for DPs looking for a 16mm zoom.

Cinematography Style: Conrad Hall

n this episode I’ll look at what Conrad Hall had to say about his philosophy on photography and show some of the gear which he used in order to cultivate his photographic vision.

INTRODUCTION

If you were to Google ‘who are the best cinematographers of all time?’, it won’t take long to stumble upon the name Conrad Hall. Through ten Academy Award nominations and three wins, the strength of his career speaks for itself.

His photography is characterised by neutral colour palettes, inventive uses of hard light, reflections and character focused framing which all culminated into a style which he called ‘magic naturalism’. In this episode I’ll look at what Conrad Hall had to say about his philosophy on photography and show some of the gear which he used in order to cultivate his photographic vision.

BACKGROUND

Hall was born in 1926 in French Polynesia and in his mid teens began attending boarding school in California. After graduating he signed up for a degree in journalism at USC, however that didn’t last long.

“Boy am I lucky that I got a D+ in journalism and had to change my major.”

He switched to the cinema program and began learning the basics of filmmaking, a relatively new art form to study at the time.

To work in Hollywood on a camera crew back then required being a member of the International Photographers Guild. This left him without a job. To work around this Hall and some of his classmates created their own independent production company and produced a film called Running Target which Hall shot. This gained him membership to the guild however due to regulations he wasn’t allowed to be credited as cinematographer on the film, but rather as a visual consultant, even though he shot the entire film.

As a member of the guild he then worked his way up the ranks, from camera assistant, to camera operator until he eventually got a chance to photograph the feature film Morituri as the director of photography.

Hall’s career went on to span many decades, from the 1950s to the early 2000s, during which time he worked with a host of esteemed directors which included: Richard Brooks, Stuart Rosenberg, John Huston, Steven Zaillian and Sam Mendes.

PHILOSOPHY

When Hall was asked how he decided where to point the camera, he is reported to have said, “I point it at the story.”

To him, the story was always the starting point for determining his photographic decisions and the ultimate target he aimed for. Although the look of his photography changed between projects depending on the story and director he was working with, he carried over some philosophical concepts throughout his career.

“I’ve never been somebody to get a movie to look absolutely perfect… Mine are always sort of flawed somehow or other. And in a way I don’t mind that because it’s not about perfection it’s about the overall feeling of the thing.”

His light wasn’t always perfectly soft, perfectly shaped and didn’t always have a perfect contrast ratio between light and shadow, his framing wasn't always perfectly symmetrical. By not always aiming for a perfectly beautiful image, much of his work carries a feeling of naturalism. He often incorporated interesting flourishes as well, such as using reflections, interesting hard shadows and atmospheric texture such as smoke or rain.

He called this magic naturalism: shooting things as they are, while at the same time incorporating stylistic touches that heightened the atmosphere of the story.

“I’m one of those guys who doesn’t do a lot of augmenting. But who knows how to take the accident and turn it into something wonderful, magical. I look for that. I thrive on it. I feed on it. I don’t invent stuff. It invents itself and then I notice it and use it dramatically.”

Hall was a master of observing unintentional magical moments then using the photographic tools he had to emphasise them. Whether that was zooming into the reflection of a chain gang trapped in the sunglasses of a prison guard. Throwing hard light against a tree to create ominously moving shadows made by the wind. Or positioning a character who was a murderer so that the acciedntal reflection made by the rain on the window made it look like he was crying.

When combined with an otherwise largely naturalistic look, these stylised little moments of ‘happy accidents’ elevated the story in a magical way.

Due to the length of his career, he started photographing films in black and white but of course moved on to using colour in his work as it replaced black and white as the dominant medium. A thread that he has carried through most of his colour films is using an earthy, neutral colour palette. Many of his films used lots of browns, greens, whites and greys, with strong, pure black shadows. The colour was rarely strongly saturated or vivid across the frame.

This meant that when a strong colour was used, like the famous use of red in American Beauty for example, it really stood out against the rest of the film’s neutral tones.

GEAR

“It’s as complex a language as music. A Piano’s got 88 keys and you can use them in any complex way you want to. We got the sun and light. Is there anything more complex than light?”

As we mentioned, being open to ‘happy accidents’ is an important part of his cinematic philosophy, especially when it comes to light. For that reason he liked coming up with the majority of his lighting on the day, although sometimes for large spaces some basic pre lighting work was necessary.

“I don’t like to figure things out ahead of time before the actors do”

For example, on Road To Perdition, many of the large interiors were sets constructed in a studio. During prep he got a rigging crew of 10 people to rig a collection of greenbeds and scaffolding overhead in the studio over 8 weeks. To this scaffolding his team rigged 30 10K fresnels and 60 5Ks which were all rigged to dimmers and used to light the backgrounds outside the windows. Basically acting the same as ambient sunlight outside.

It also meant that all his lights were out of the sets and wouldn’t get in the way of the actor’s blocking or framing. The sets were also electrified so that practical light sources, such as lamps, could be plugged in and used. Much of his lighting was done with tungsten balanced lights.

With these fixtures rigged in place he could then come in on the day and position the lights as he desired. A lot of the light was hard and undiffused, something he often did in many of his films. This resulted in strong lines of shadow.

He regularly created shape by breaking up the light with interesting textures or used parts of the set to shape the shadows which were cast.

When lighting interiors he would also use what he called ‘room tone’, where he bounced smaller fixtures like a 1K into the ceiling to provide a soft ambient base light to a room. This filled in the contrast from the hard light a bit. Because the light is soft and bounced it’s not very directional which means it’s difficult to tell where exactly it comes from. In this way it provides an overall lift to the space in a natural way. Once this base ‘room tone’ was in place he could then work on lighting the characters. Often hitting them from the side or behind with a hard source.

He used this same hard backlight to bring out textural components like smoke or rain.

When it came to selecting lenses he liked using a wide collection of prime focal lengths from 27mm to 150mm. Unlike the recent trend of using wide angle lenses for close ups, Hall took more of a traditional approach. We used wide angle lenses for wide shots and longer focal lengths for close ups that threw the background out of focus.

However he did sometimes use long lenses, like Panavision’s 50-500mm zoom, creatively for wide shots to increase the feeling of heat waves with distortion and represent characters as more wispy yet menacing.

He liked using a shallow depth of field, usually setting the aperture of his lenses between T/1.9 and T/2.5. This gave the photography an emotional dimension and clearly showed the focus (literally) of the shot.

Hall mainly used Panavision cameras and lenses. He shot on 35mm film before the advent of digital cinematography with cameras such as the Panavision Platinum. He especially liked using Panavision Primo lenses for their look, reliability and wide range of focal lengths.

To achieve the colour he liked he used fine grain tungsten Kodak film stocks for interiors as well as exterior daylight scenes. For example, he used the more modern Kodak Vision 200T for his later work, and Eastman EXR 100T for his older work. A lot of the classic 60s and 70s-feeling muted, neutral colour came from his use of Eastman’s famous 100T 5254 colour negative film.

For some films, such as Butch Cassidy, he wanted the colour to be even more muted and to pull out the cliche blue often used in ‘western skies’. To do this he radically overexposed the film, then got the film laboratory to compensate for the overexposure in the print. This further washed out the colour and turned the blue a softer, lighter shade.

CONCLUSION

“Those are the kind of films that I like to get a hold of and don’t often get a chance to do. Stuff that, like, goes on forever about some basic and important human condition that is bigger than all of us and will go on forever no matter what era it’s set in.”

Conrad Hall’s selection of films that he photographed is a reflection of his style as a whole. Telling simple, natural stories that represent something bigger through his injection of magical moments.

One thing that he always tried to do was to tell stories so well that if the sound was turned off the audience would still understand the story just based on the images. His love for the medium, his powers of observation and ability to translate stories using whatever magic naturally occurs on set is what has made him one of the greatest of all time.

4 Reasons Movies Shouldn't Be Watched On Laptops

Let's remind ourselves why going to the cinema is still the superior to streaming movies from home by going over 4 reasons we should still make the effort to get out of the house and go to the cinema.

INTRODUCTION

According to a recent study, only 14% of adults stated they preferred viewing a new movie in the cinema, while 36% preferred streaming it from a device at home. While this trend may have been expedited by the pandemic, I think it’s a trend that’s on the rise regardless. Fewer and fewer people are going to cinemas anymore and I think that’s kind of sad.

And I mean, look, I get it. I’m guilty of it too. Convenience outweighs viewing experience. 80% of my cinematic diet is probably consumed at home from a TV or a laptop rather than on the big screen.

Before this video becomes too much of a lamentation about the death of cinema, I’d like to flip it to remind us why going to the cinema is still the superior experience by going over 4 reasons we should still make the effort to get out of the house and go to the movies.

CONSISTENCY

One of the biggest issues of watching movies from home comes from the inconsistency of the image.

Filmmakers spend years developing their craft, putting blood, sweat and tears into lighting, testing for the perfect lenses and tweaking nuances of colour on a calibrated monitor in the grade. Only to have 60%, or so, of the audience watch the final product from a smartphone with a cracked screen in a bright room full of reflections.

Cinema is a medium which is all about refining and tweaking the details in order to create a lasting art work. A lot of this is undone by watching the final product in a sub optimal viewing environment.

I mean yes, you get the gist of the visuals, but it’s kind of like listening to an album that was carefully, meticulously written, recorded, mixed and mastered on a noisy airplane with the cheapest pair of headphones sold in the 7 Eleven. You can kind of make out most of the lyrics and melody but all the sonic nuance that the artists spent their time and energy creating is lost.

Most movie complexes use high end digital cinema projectors that are DCI compliant, tested to output a high standard of quality images, in a dark, light free environment. This means that the viewing experience at different cinemas around the world will be almost identical. Colour and contrast will be consistent and resolution is standardised to either 2K or 4K.

This differs from home viewing a movie. Different screens made by different manufacturers have different resolutions, will display colour and contrast inconsistently at varying levels of brightness and don’t have to conform to any compliance standards.

Using different media players may also affect the colour and luminance information. For example a pet peeve of mine is that Apple’s Quicktime Player shifts the gamma curve and plays video files with different contrast from the original file.

Also, unless you are viewing a movie at night with all the lights in the house off, there will be excess ambient light that may cause reflections or dilute the brightness of the image.

THE AUDIENCE EFFECT

One of the most noticeable impacts that viewing a movie at home has, is that it shifts the experience from being a communal one to being an individual one.

The idea of cinema was born out of creating a medium which could be shared by an audience. I’d say that communal viewing heightens the effect that a film has on us. Whatever emotion the filmmakers impart to the audience is heightened when we share it as a group. For horror films you can hear the audience gasp, for comedies laughter rings out, and for compelling dramas you can almost feel a communal silent focus take hold.

I think part of this reaction comes from it being an uninterrupted viewing experience. Streaming sites are set up in a way to ease and encourage the process of watching films in little segments. Watch for 5 minutes. Pause and make something to eat. Watch for another 10 minutes while you simultaneously browse your phone. Skip forward past a scene you get bored of. Then come back the next day and find the movie paused right where you left off so that you can begin this fragmented viewing process again.

Filmmakers work extremely hard to design each film as a continuous, cohesive experience that suspends your disbelief and envelops you in the world of the story. Breaking down the medium by stopping and starting it destroys a movie’s ability to take hold of you.

The cinema is so important because it forces you to view a film as it was intended to be viewed, as a single, uninterrupted experience.

Sometimes filmmakers want to test your patience and use more drawn out scenes to support their point of view of the story. Sitting through a movie from beginning to end, even if you don’t care for the film, will at least give you a complete idea of what the filmmaker was intending to do.

Plus, in today’s world where everything is so sped up and our attention spans have become shorter than ever before, I think turning off your phone and watching a complete film from start to finish is an important mental exercise we should all regularly perform.

SOUND

The sound that you hear in a cinema is far more immersive than that from a laptop, phone or TV. This is because of surround sound. Consumer display products, like a laptop, typically have a single speaker built into the device that emanates sound from one source or direction.

Cinemas have surround sound which uses multiple speakers in multiple positions to provide sound that is more immersive and which surrounds you 360 degrees.

Like high end cinema projectors which are standardised, so too is the sound. The global standard is Dolby Digital which provides an audio mix with multiple channels, such as Dolby Digital 5.1. This provides 3 front channels which are sent to separate speakers: a centre, left and right which provide clean dialogue and placement of on screen sounds. Two twin surround channels are typically placed on the sides and behind the audience to provide a fuller, 360 degree listening experience. A low frequency channel that provides bass effects, with about a tenth of the bandwidth of the other channels, makes up the final .1.

The cinema is therefore set up to provide a more captivating sonic experience that places you in the centre of the action and better draws you into the world of the movie.

THE ALTAR OF CINEMA

The final reason to go to the cinema is less of a practical one and more of a conceptual one, but is arguably the most important. This may seem a bit over the top, and hopefully no one takes offence, but I think a comparison can be made between cinema and religion in the way that they are presented.

In most religions it is of course possible to practice from home without interacting with others through meditation or prayer. However all major religions have physical spaces which bring communities together: temples, churches, mosques. Often these spaces are large, impressively built and feature significant iconography.

I think as humans we are drawn to spaces, and get some kind of greater, more significant experience from coming together as a collective in a space that is designed and devoted to that experience.

Standing in the queue for popcorn, buying tickets, sitting amongst a group of people, watching the trailers - it’s almost ritualistic and builds up a level of excitement and reverence for the film we’re about to watch. An image which is projected onto a massive screen has to be taken more seriously than one on a smartphone.

The issue with having a continuous never ending supply of content to stream at home on a laptop is that it diminishes the importance of the medium. It makes movies more mundane and everyday. Taking the time to visit the cinema builds anticipation and makes it more of an experience and an event.

CONCLUSION

So much effort goes into making movies as a work of art. I think they should be appreciated as such and not given the same gravity as this YouTube video for example. They are different mediums. The smaller the screen becomes the more that watching a film turns into an individual experience rather than the group experience that it was designed to be.

Going to the movies may be less practical than just bingeing the latest releases on a laptop, but the experience of going to a cinema elevates movies into the unique medium that they are.

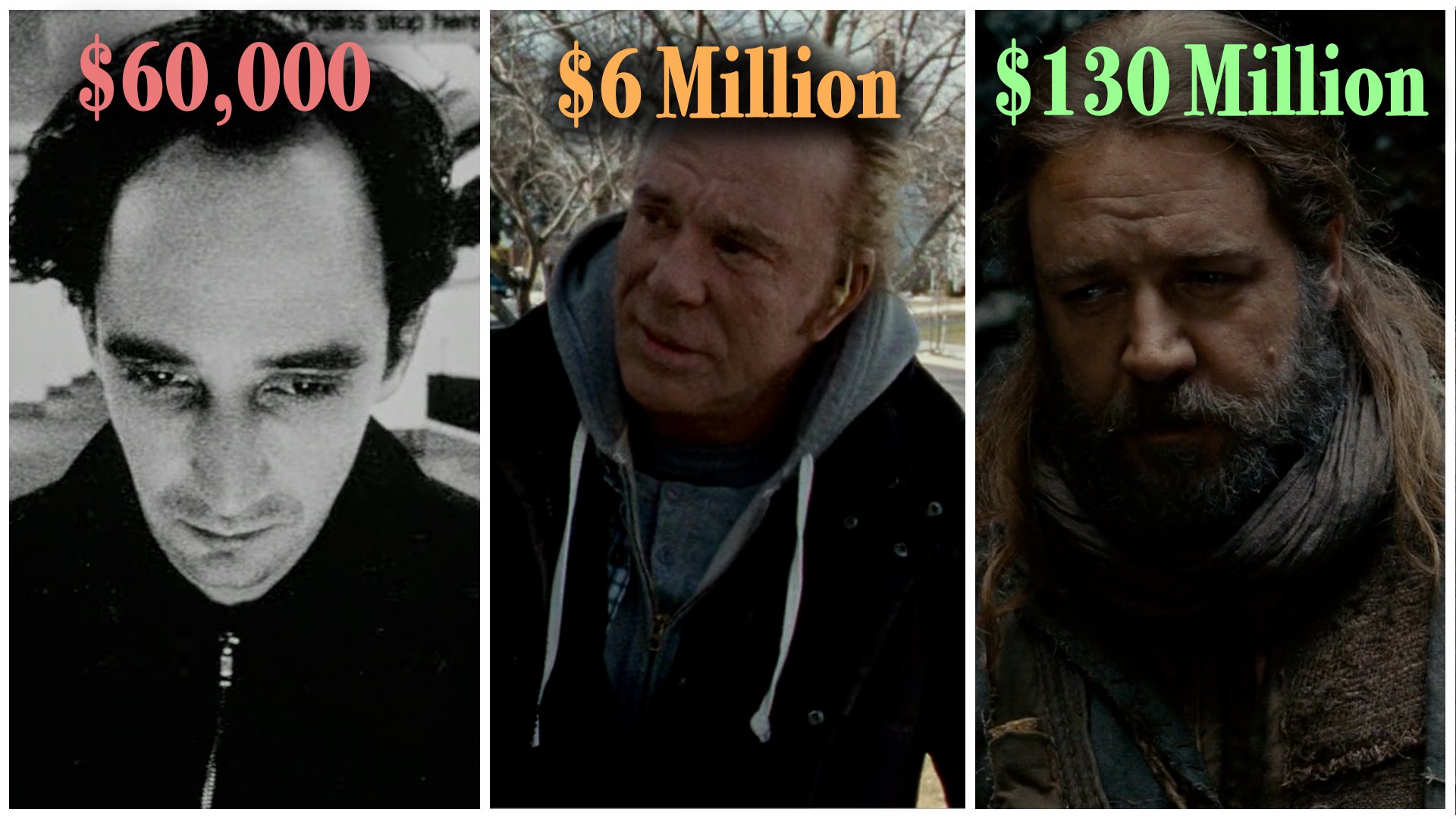

How Denis Villeneuve Shoots A Film At 3 Budget Levels

Great directors are capable of creating and maintaining very deliberate cinematic tones. This is true of Denis Villeneuve.

INTRODUCTION

Great directors are capable of creating and maintaining very deliberate cinematic tones. This is true of Denis Villeneuve. His films are thrilling, dramatic and at times epic in both tone and scope, yet also provoke subtle political, ethical and philosophical questions that provide substance to action.

His career has wound a path from lower budget productions all the way to directing some of the largest blockbusters in the world.

In this video I’ll look at three of his films at three increasing budget levels, the low budget August 32nd on Earth, the medium budget Sicario and the high budget Dune to unveil the formation of his style and identity as a director.

AUGUST 32ND ON EARTH

The Canadian filmmaker’s interest in movies was piqued as a child. He began making short films when he was in high school, where he also developed an early love of science fiction. After leaving school he began studying science but later changed his focus to film when he moved to the University of Quebec.

After winning some awards he began working with the National Film Board of Canada where he established a working relationship with producer Roger Frappier who developed films by emerging directors.

The NFB funded his first 30 minute short film which showed a lot of promise. Frappier then produced Cosmos, a collection of six different shorts made by six young directors, which included Villeneuve as well as his future collaborator André Turpin. It was a critical success.

Following this Villeneuve wrote a screenplay with a contained story about a woman who is thrust into an existential crisis after surviving a car accident. Frappier came on board to produce the film under his production company Max Films.

André Turpin was brought on board to serve as the cinematographer on the film. This collaboration established a trait which would continue throughout his later movies - an openness to letting DPs bring their own photographic sensibilities to the project, while at the same time always firmly maintaining his own strong perspective on the script.

To August 32nd On Earth, Turpin brought his preference for strong, saturated 35mm Kodak colour, very soft side light, character focused framing and use of sharp lenses with a shallow depth of field. This was complemented by Villeneuve’s preferences for using subjective framing with lots of close ups and motivated, smooth camera moves from a tripod, dolly or Steadicam.

Although the film is a mature, cinematically grounded and more realistic production, it also has a dreamlike tone with moments of experimentation, some of which seems to have been inspired by his love of French New Wave Films, such as Breathless.

From the philosophical walk and talks, to the numerous jump cuts and even the main character's short haircut - Breathless seems to be a clear influence. And if you think maybe these are just coincidences, there’s even a shot with a poster of Seberg who starred in Breathless. While the influence of French New Wave filmmaking is strong, it’s not overpowering.

Villeneuve took parts of the style that worked effectively for a low budget film, such as a subjective focus on very few characters, and parts that suited his story, such as the experimental editing to visualise the character’s post accident haze, and combined it with own sensibilities for realism, mature drama, cinematic control, and isolated desert locations (which cropped up in much of his later work).

August 32nd established his strong voice as a director, his ability to maintain a consistent cinematic tone, openness to collaboration and his stylistic sensibilities.

He made his first low budget film by writing a simple story with few moving parts, using experimental cutting to avoid showing expensive set pieces like the car accident, and instead devoted his budget to creating a deliberate, cinematic camera language.

SICARIO ($30 Million)

August 32nd got into the Cannes Film festival and premiered in the Un Certain Regard section, which he followed with a string of Canadian medium budget films.

In 2013 it was announced that Villeneuve would direct Sicario, an action thriller on the Mexican border. He was drawn to the philosophical concept of the border, an imaginary line which divides two extremes, as well as examining the idea of western influence and how it is exerted by first world nations.

At a medium-high $30 million budget it was a step up from his prior Canadian films in the $6 Million range. However, the script involved many large, expensive set pieces and complex action sequences which meant the budget, relative to what needed to be shot, wasn’t huge. After writing or co-writing the screenplays for his early projects, Sicario was penned by Taylor Sheridan.

“The research I did after, as I was prepping the movie, just confirmed what was written in the script… I wanted to embrace Mexico. To see scenes from the victim’s point of view…try to create authenticity in front of the camera and not fall into cliches.”

To capture an authentic, naturalistic vision he turned to famed cinematographer Roger Deakins who he’d worked with before on Prisoners.

They storyboarded many of the sequences as a team during the process of location scouting in pre-production. This nailed down the photographic style they wanted and also allowed them to work quickly and effectively when shooting complex action sequences that needed to be pieced together.

This helped decrease shooting time in the tight schedule. Villeneuve’s clear vision for the shots he needed to get also saved time. For example, after shooting a master of a confrontation scene, Deakins asked if he should move the camera closer to get singles of each character. Villeneuve declined, knowing that he would use the master shot as a single long take in the edit…which he did. Not shooting extraneous close ups saved the production around three hours.

In his trademark style, Deakins shot many of the scenes from an Aerocrane jib arm with a Power Pod Classic remote head, a combination he’s used for over 20 years.

This allows him to quickly and easily move the camera on any axis, making it useful not only for smooth moves, but also for quickly repositioning the frame, allowing for a more organic working style and time saving setup.

“I mean the challenge of the photography of any film is sustaining the look and the atmosphere and not breaking out of that.”

One challenge when shooting out in Villeneuve’s favourite location, the desert, was controlling the natural light. Deakins did this by breaking down and scheduling each exterior shot at a specific time when the angle of sunlight was right with the assistant director Don Sparks.If the sun went away or into clouds they had a separate list of shots they could get such as car interiors or close ups which were easier to light.

Another way of exercising control of the lighting and the location was shooting certain interiors in a studio. To free up space for camera moves and to keep the light as motivated and as natural as possible he set up all his lights outside the set - 6 T12 fresnels pushing hard, sourcey light through windows and 65 2K space lights to provide ambience outside those windows.

He recorded on ArriRaw with the Alexa XT using Master Prime lenses - usually the 32, 35 and 40mm, occasionally pulling out the 27mm for wides.

“The overall approach to the film was this personal perspective. We’re either with Emily, or with Benicio, you know. So we took all that to say well we’ll do this whole night sequence from the perspective of the night vision system.”

To do this a special adapter was used on the Alexa to increase its sensitivity to light. He then lit the scene with a low power single source bounced from high up to mimic realistic moonlight and keep the audience immersed.

The much larger scope Sicario was therefore pulled off with a $30 million budget by: carefully planning out the complex action sequences in pre production to save time and money, casting famous leads that drew audiences to the cinema, shooting some interiors in a studio for increased control and exteriors on location to wrap the audience up in a feeling of authenticity and controlling the score, sound design and pacing in the edit to provide a consistently thrilling tone.

DUNE ($165 Million)

After Sicario’s critical and commercial success Villeneuve turned to a project he’d dreamt about making since he was a teenager - Dune - based on the sci fi novel by Frank Herbert.

“I felt in love spontaneously with it…There’s something about the journey of the main character…This feeling of isolation. The way he was struggling with the burden of his heritage. Family heritage, genetic heritage, political heritage.”

With this thematic backing Villeneuve took on this sci fi story of epic proportions with a large studio budget of around $165 million. Since a large part of the undertaking was based on creating his imaginings of the world of Dune, he teamed up with his regular production designer Patrice Vermette and experienced cinematographer Greig Fraser. Together they worked with the extensive conceptual art and storyboards to bring the story to life. Since the way in which the sets were constructed would have an impact on the lighting, Fraser had many pre-production meetings with Vermette about light.

“The main character in the movie for me is nature. I wanted the movie to look as naturalistic and as real as possible. To do so we used most of the time natural light.”

On Arrakis buildings are constructed from rock with few openings to save its occupants from the oppressive heat. So instead of using direct light, the interior lighting is soft and bounced. To create this Fraser and his gaffer rigged Chroma-Q Studio Force II LED light strips to simulate the ambient softness of bounced sunlight. For close ups where they needed more punch he used LED Digital Sputnik DS6 fixtures.

To create depth Fraser constantly broke up spaces by using areas of light and shadow in different planes of the image. To bring out the incredible heat and harshness on the desert planet, Fraser used hard natural light from the sun which he cut into sections of sharp shadow in interesting ways.

Generally in cinematography, the larger a space is the more expensive and work it takes to light. This sequence was no exception.

In a massive undertaking, Fraser’s grip and rigging team put up gigantic sections of fabric gobo over the set’s ceiling to creatively block the sunlight, to create a sense of ominous depth to the space. They then had a precise window to shoot the scene between 10:45 and 11:10am where the angle of the sun would be perfect.

They photographed Dune on large format with the Alexa LF and Mini LF on large format spherical Panavision H-series lenses to render the taller 1.43:1 Imax sequences and Panavision Ultra Vista 1.65x anamorphic lenses for the 2.39:1 shots.

“I wanted the sky to be a vivid white. A very harsh sky. To bring kind of a violence to the desert - a harshness to it.”

To do this Fraser got his colourist Dave Cole to create a LUT for the camera in pre-production that pulled out the blue in the image and rolled off the overexposure.